AI-genererad karaktärsdialog i spel

AI förändrar hur spelkaraktärer interagerar med spelare. Den här artikeln förklarar hur AI driver dynamisk NPC-dialog, lyfter fram toppverktyg som Inworld AI, GPT-4 och Convai, och utforskar verkliga spelexempel med generativ konversation.

Videospel har traditionellt förlitat sig på förskrivna dialogträd, där NPC:er (icke-spelarkaraktärer) levererar fasta repliker som svar på spelarens handlingar. Idag använder AI-driven dialog maskininlärningsmodeller—särskilt stora språkmodeller (LLM)—för att dynamiskt generera karaktärssvar. Som Associated Press rapporterar experimenterar studior nu med "generativ AI för att hjälpa till att skapa NPC-dialog" och skapa världar som är "mer responsiva" mot spelarens kreativitet.

I praktiken innebär detta att NPC:er kan minnas tidigare interaktioner, svara med nya repliker och delta i fria samtal istället för att upprepa förutbestämda svar. Spelstudior och forskare noterar att LLM:ers starka kontextförståelse ger "naturligt klingande svar" som kan ersätta traditionella dialogmanus.

Varför AI-dialog är viktigt

Inlevelse & återspelningsvärde

NPC:er får livfulla personligheter med djup och dynamik, vilket skapar rikare samtal och starkare spelarengagemang.

Kontextmedvetenhet

Karaktärer minns tidigare möten och anpassar sig efter spelarens val, vilket gör världarna mer responsiva och levande.

Framväxande spelupplevelser

Spelare kan interagera fritt och driva framväxande berättelser istället för att följa förutbestämda uppdragsvägar.

AI som kreativt verktyg, inte ersättning

AI-driven dialog är utformad för att assistera utvecklare, inte ersätta mänsklig kreativitet. Ubisoft betonar att författare och konstnärer fortfarande definierar varje karaktärs kärnidentitet.

Utvecklare "formar [en NPC:s] karaktär, bakgrundshistoria och samtalsstil," och använder sedan AI "endast om det har värde för dem" – AI "får inte ersätta" mänsklig kreativitet.

— Ubisoft, NEO NPC-projektet

I Ubisofts prototyp "NEO NPC"-projekt skapar designers först en NPC:s bakgrund och röst, och vägleder sedan AI att följa den karaktären. Generativa verktyg fungerar som "medpiloter" för berättandet och hjälper författare att snabbt och effektivt utforska idéer.

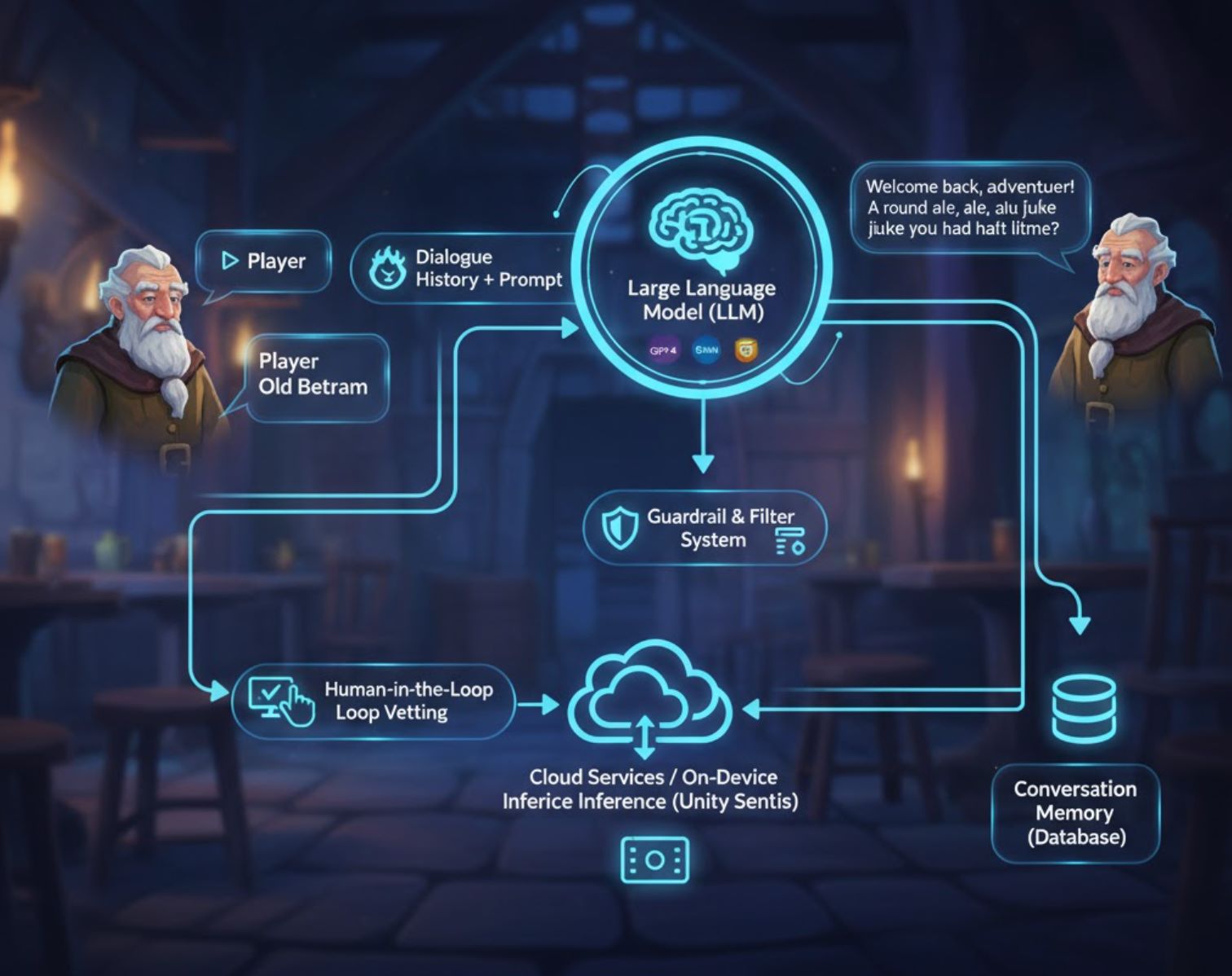

Hur AI-dialogsystem fungerar

De flesta AI-dialogsystem använder stora språkmodeller (LLM) som GPT-4, Google Gemini eller Claude—neurala nätverk tränade på enorma textmängder för att generera sammanhängande svar.

Karaktärsdefinition

Utvecklare ger en prompt som beskriver NPC:s personlighet och kontext (t.ex. "Du är en gammal värdshusvärd vid namn Gamle Bertram, som talar vänligt och minns spelarens tidigare beställningar")

Generering i realtid

När en spelare pratar med en AI-NPC skickar spelet prompten och dialoghistoriken till språkmodellen via API

Leverans av svar

AI returnerar en dialogreplik som spelet visar eller röstlägger i realtid eller nära realtid

Minnesbevarande

Samtalsloggar sparas så att AI vet vad som sagts tidigare och kan bibehålla sammanhang över sessioner

Säkerhetsåtgärder & kvalitetskontroll

Team bygger in flera säkerhetsåtgärder för att bibehålla karaktärskonsistens och förhindra olämpliga svar:

- Skyddssystem och toxicitetsfilter håller NPC:er i karaktär

- Mänsklig-involverad iteration: om en NPC "svarade som den karaktär vi hade i åtanke" behåller utvecklarna det; annars justerar de modellpromptar

- Högkvalitativa promptar säkerställer högkvalitativ dialog ("skräp in, skräp ut")

- Molntjänster eller inferens på enheten (t.ex. Unity Sentis) optimerar prestanda och minskar fördröjning

Fördelar och utmaningar

Fördelar för utvecklare & spelare

- Tidsbesparing: Skapa snabbt utkast till samtal istället för att skriva varje replik för hand

- Kreativ brainstorming: Använd AI som utgångspunkt för att utforska nya dialogriktningar

- Skalbarhet: Generera långa chatt-sessioner och personliga berättelsegrenar

- Spelarengagemang: NPC:er som minns tidigare möten känns mer levande och anpassningsbara

- Framväxande berättande: Spelare kan driva fria interaktioner i sandlådespel eller multiplayer

Fallgropar att hantera

- Meningslös chatt: Obegränsad, slumpmässig dialog är "bara oändligt brus" och bryter inlevelsen

- Hallucination: AI kan generera irrelevanta repliker om den inte noggrant begränsas med kontext

- Beräkningskostnad: LLM API-anrop blir dyra i stor skala; användningsavgifter kan belasta budgetar

- Etiska frågor: Röstskådespelare och författare oroar sig för jobbförlust

- Transparens: Vissa överväger att informera spelare om AI-genererade repliker

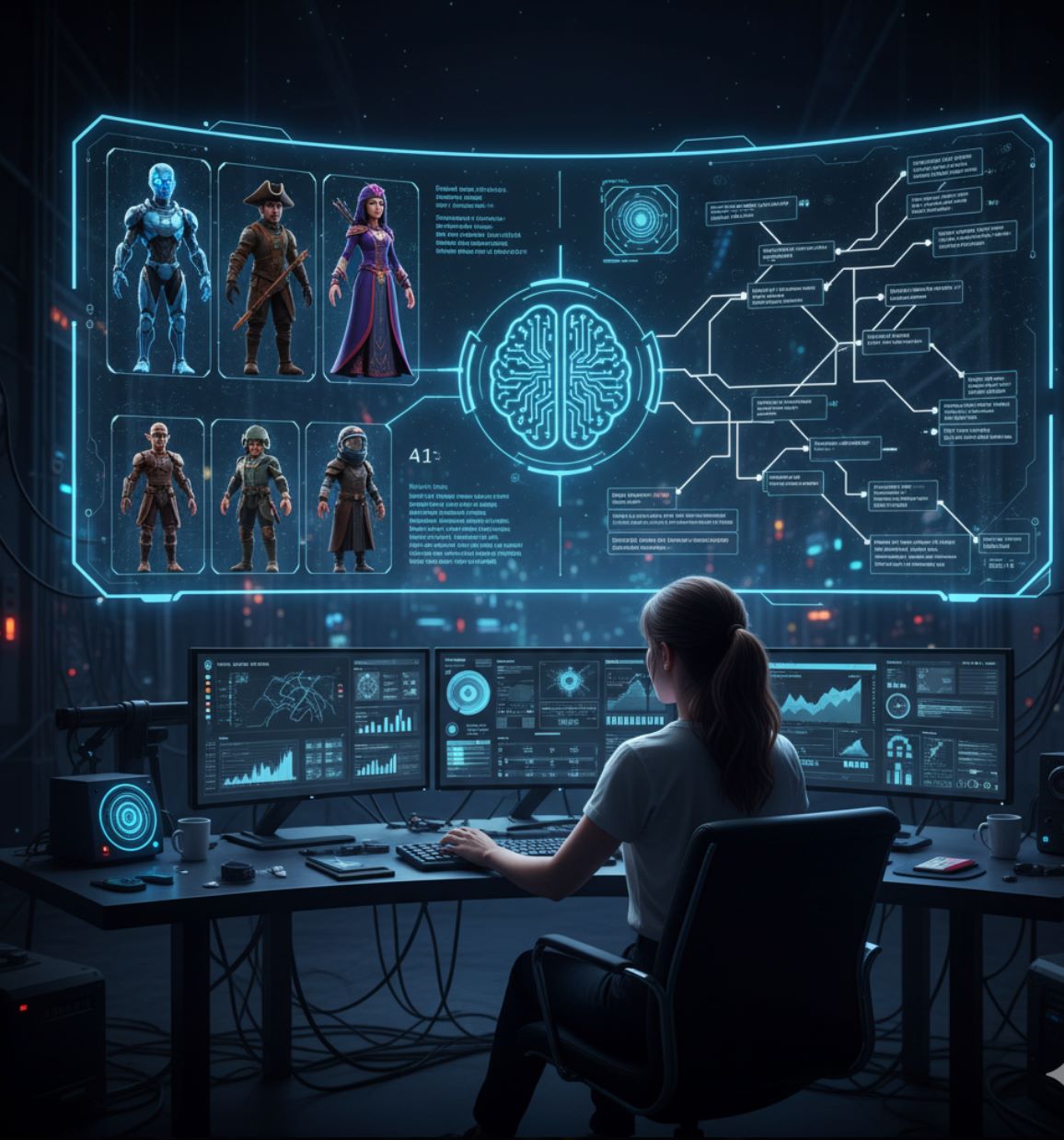

Verktyg & plattformar för AI-dialog i spel

Game creators have many options for AI dialogue. Here are some notable tools and technologies:

Inworld AI

Applikationsinformation

| Utvecklare | Inworld AI, Inc. |

| Stödda Plattformar |

|

| Språkstöd | Främst engelska; flerspråkig röstgenerering och lokaliseringsfunktioner under utveckling. |

| Prisstruktur | Freemium: fria krediter med pay-as-you-go-användning för LLM-dialog och text-till-tal. |

Översikt

Inworld AI är en generativ AI-plattform designad för att skapa mycket realistiska, känslomässigt intelligenta icke-spelarkaraktärer (NPC:er) för spel. Genom att kombinera minne, mål, personlighet och röstsyntes möjliggör den dynamiska, kontextmedvetna samtal som utvecklas baserat på spelarens beteende och världens tillstånd. Spelutvecklare kan bygga AI-drivna karaktärer med visuella verktyg och sedan integrera dem med spelmotorer som Unreal eller via API.

Nyckelfunktioner

Karaktärer med minne, mål och känslomässiga dynamiker som svarar naturligt på spelarinteraktioner.

Kodfri, grafbaserad Studio-gränssnitt för att definiera personlighet, kunskap, relationer och dialogstil.

Låg latens TTS med inbyggda röstarketyper anpassade för spel och känslomässig nyans.

NPC:er minns tidigare interaktioner och utvecklar relationer med spelare över tid.

Filtrera karaktärskunskap och moderera svar för att säkerställa realistiskt och säkert NPC-beteende.

SDK:er och plugins för Unreal Engine, Unity (tidig åtkomst) och Node.js-agentmallar.

Ladda ner eller få tillgång

Kom igång

Registrera ett Inworld Studio-konto på Inworlds webbplats för att få tillgång till karaktärsskaparen.

Använd Studio för att definiera persona, minne, känslomässiga grafer och kunskapsbas för din NPC.

Ladda ner Unreal Runtime SDK eller Unity-plugin, och importera sedan karaktärsmallskomponenter till ditt projekt.

Ställ in spelarinput (tal eller text), koppla till dialoggrafen och mappa utdata till text-till-tal och läpprörelse.

Definiera vad din NPC vet och hur dess kunskap utvecklas som svar på spelarens handlingar över tid.

Prototypa interaktioner i Studio, granska genererad dialog, justera karaktärsmål och känslomässiga vikter, och distribuera sedan på nytt.

Använd API eller integrerat SDK för att lansera dina AI-drivna NPC:er i ditt spel eller interaktiva upplevelse.

Viktiga överväganden

Konfiguration & optimering

- Justering av minne och säkerhetsfiltrering kräver noggrann konfiguration för att förhindra orealistiska eller osäkra NPC-svar

- Röstlokalisering expanderar men alla språk är inte tillgängliga ännu

- Testa karaktärsbeteende noggrant innan produktionssättning för att säkerställa kvalitativa interaktioner

Vanliga frågor

Ja, Inworld Studio erbjuder ett kodfritt, grafbaserat gränssnitt för att designa karaktärspersonlighet, dialog och beteende utan programmeringskunskaper.

Ja, Inworld inkluderar ett uttrycksfullt text-till-tal-API med speloptimerade röster och inbyggda karaktärsarketyper. TTS är integrerat i Inworld Engine.

Inworld använder användningsbaserad prissättning: du betalar per miljon tecken för text-till-tal och beräkningskostnader för LLM-dialoggenerering. Fria krediter finns för att komma igång.

Ja, Inworld stödjer långtidsminne, vilket gör att NPC:er kan minnas tidigare interaktioner och upprätthålla utvecklande relationer med spelare över flera sessioner.

Ja, Inworld AI NPC Engine-plugin finns tillgängligt på Epic Games Marketplace för integration med Unreal Engine.

HammerAI

Applikationsinformation

| Utvecklare | HammerAI (solo-utvecklare / litet team) |

| Stödda Plattformar |

|

| Språkstöd | Främst engelska; karaktärsskapande stödjer olika stilar utan geografiska begränsningar |

| Prissättningsmodell | Gratisnivå med obegränsade konversationer och karaktärer; betalda planer (Starter, Advanced, Ultimate) erbjuder utökad kontextstorlek och avancerade funktioner |

Översikt

HammerAI är en kraftfull AI-plattform designad för att skapa realistiska, uttrycksfulla karaktärsdialoger. Den ger författare, spelutvecklare och rollspelare möjlighet att interagera med AI-drivna personligheter genom intuitiv chatt, vilket låter dem bygga rik lore, bakgrundshistorier och engagerande samtal. Plattformen stödjer både lokala språkmodeller och molnbaserade alternativ, vilket ger flexibilitet mellan integritet och skalbarhet.

Nyckelfunktioner

Gratisnivån stödjer obegränsade chattar och karaktärsskapande utan begränsningar.

Kör kraftfulla LLM lokalt via skrivbordet för integritet eller använd molnbaserade modeller för bekvämlighet.

Bygg detaljerad lore, bakgrundshistorier och karaktärsinställningar för att berika dialog och bibehålla konsekvens.

Specialiserat läge för att skriva dialoger till spelens cutscenes och interaktiva berättelseselement.

Skrivbordsappen stödjer bildgenerering under chattar med inbyggda modeller som Flux.

Bjud in upp till 10 karaktärer i en och samma gruppchatt för komplexa interaktioner mellan flera karaktärer.

Detaljerad Introduktion

HammerAI erbjuder en unik miljö för att skapa och samtala med AI-karaktärer. Genom skrivbordsapplikationen kan användare köra språkmodeller lokalt på sin egen hårdvara med ollama eller llama.cpp, vilket säkerställer integritet och offline-funktionalitet. För de som föredrar molnbaserade lösningar erbjuder HammerAI säker fjärrhosting för obegränsad AI-chatt utan krav på konto.

Karaktärssystemet stödjer loreböcker, personliga bakgrundshistorier och justering av dialogstil, vilket gör det idealiskt för berättelseutveckling i spel, manus och interaktiv fiktion. Plattformen inkluderar specialverktyg för dialoggenerering till cutscenes, vilket möjliggör snabb skapelse av filmiska och spelberättande sekvenser med korrekt formatering för talad dialog, tankar och berättarröst.

Ladda ner eller få åtkomst

Kom igång-guide

Hämta HammerAI från dess itch.io-sida för Windows, macOS eller Linux.

Använd fliken "Modeller" i skrivbordsappen för att ladda ner språkmodeller som Mistral-Nemo eller Smart Lemon Cookie.

Välj bland befintliga AI-karaktärskort eller skapa din egen anpassade karaktär via Författarläge.

Skriv dialog eller handlingar med vanlig text för tal eller kursiv stil för berättarröst och tankar.

Klicka på "Generera om" om du inte är nöjd med AI:s svar, eller redigera din inmatning för att styra bättre svar.

Skapa och lagra karaktärers bakgrundshistorier och världslore för att bibehålla konsekvent kontext genom samtalen.

Byt till cutscene-dialogläge för att skriva filmiska eller interaktiva berättelseutbyten för spel och historier.

Begränsningar & Viktiga Anmärkningar

- Offline-användning kräver att karaktärs- och modelfiler laddas ner i förväg

- Molnmodeller är begränsade till 4 096 tokens kontext på gratisplanen; högre planer erbjuder utökad kontext

- Chattar och karaktärer lagras lokalt; synkronisering mellan enheter är inte tillgänglig på grund av avsaknad av inloggningssystem

- Molnbaserade modeller använder innehållsfilter; lokala modeller är mindre begränsade

- Prestanda för lokala modeller beror på tillgängligt RAM och GPU-resurser

Vanliga Frågor

Ja — HammerAI erbjuder en gratisnivå som stödjer obegränsade konversationer och karaktärsskapande. Betalda planer (Starter, Advanced, Ultimate) ger utökad kontextstorlek och ytterligare funktioner för avancerade användare.

Ja, via skrivbordsappen som kör lokala språkmodeller. Du måste ladda ner karaktärs- och modelfiler i förväg för att möjliggöra offline-funktionalitet.

Ja — skrivbordsappen stödjer bildgenerering under chatt med inbyggda modeller som Flux, vilket låter dig skapa visuellt innehåll parallellt med dina samtal.

Använd lorebokfunktionen för att bygga och hantera karaktärers bakgrundshistorier, personlighetsdrag och världskunskap. Detta säkerställer konsekvent kontext genom dina samtal.

Du kan generera om svaret, redigera dina inmatningar för att ge bättre vägledning, eller justera dina rollspelsinstruktioner för att styra AI:n mot bättre svarskvalitet.

Large Language Models (LLMs)

Applikationsinformation

| Utvecklare | Flera leverantörer: OpenAI (GPT-serien), Meta (LLaMA), Anthropic (Claude) och andra |

| Stödda plattformar |

|

| Språkstöd | Främst engelska; flerspråkigt stöd varierar per modell (spanska, franska, kinesiska och fler tillgängliga) |

| Prisstruktur | Freemium eller betalt; gratisnivåer finns för vissa API:er, medan större modeller eller hög volym kräver prenumeration eller betalning per användning |

Översikt

Stora språkmodeller (LLM) är avancerade AI-system som genererar sammanhängande, kontextmedveten text för dynamiska spelupplevelser. Inom spelutveckling driver LLM intelligenta NPC:er med realtidsdialog, adaptiv berättarteknik och interaktivt rollspel. Till skillnad från statiska skript svarar LLM-drivna karaktärer på spelarens input, bibehåller samtalsminne och skapar unika berättelseupplevelser som utvecklas med spelarens val.

Hur LLM fungerar i spel

LLM analyserar stora mängder textdata för att förutsäga och generera naturligt språk anpassat till spelkontext. Utvecklare använder promptdesign och finjustering för att forma NPC-svar samtidigt som berättelsens sammanhang bibehålls. Avancerade tekniker som retrieval-augmented generation (RAG) gör det möjligt för karaktärer att minnas tidigare interaktioner och lore, vilket skapar trovärdiga, engagerande NPC:er för rollspel, äventyr och berättelsedrivna spel.

Skapar kontextkänsliga NPC-samtal i realtid som svarar naturligt på spelarens input.

Genererar uppdrag, händelser och berättelsegrenar som anpassar sig efter spelstatus och spelarens beslut.

Bibehåller karaktärskonsistens med definierade bakgrundshistorier, mål och personlighetsdrag.

Minns tidigare interaktioner och fakta från spelvärlden för sammanhängande flerstegsdialog och bestående karaktärskunskap.

Ladda ner eller få tillgång

Kom igång

Välj en modell (OpenAI GPT, Meta LLaMA, Anthropic Claude) som matchar ditt spels krav och prestandabehov.

Använd moln-API:er för bekvämlighet eller sätt upp lokala instanser på kompatibel hårdvara för större kontroll och integritet.

Skapa detaljerade NPC-bakgrundshistorier, personlighetsdrag och kunskapsdatabaser för att styra LLM-svar.

Formulera prompter som styr LLM-svar enligt spelkontext, spelarinput och berättelsemål.

Koppla LLM-utdata till ditt spels dialogsysten med SDK:er, API:er eller anpassade middleware-lösningar.

Utvärdera NPC-dialogens kvalitet, förfina prompter och justera minneshantering för att säkerställa konsekvens och inlevelse.

Viktiga överväganden

- Hallucinationer: LLM kan producera osammanhängande eller faktamässigt felaktig dialog om prompter är otydliga; använd tydliga, specifika instruktioner

- Hårdvara & latens: Realtidsintegration kräver kraftfull hårdvara eller molninfrastruktur för responsivt spelande

- Etiska & bias-risker: LLM-utdata kan innehålla oavsiktliga fördomar; implementera moderering och noggrann promptdesign

- Prenumerationskostnader: Modeller med hög volym eller finjustering kräver vanligtvis betald API-åtkomst

Vanliga frågor

Ja. Med korrekt persona-design, minnesintegration och promptteknik kan LLM bibehålla karaktärskonsistens över flera interaktioner och samtal.

Ja, även om prestandan beror på hårdvara eller molnlatens. Mindre lokala modeller kan föredras för realtidsrespons, medan moln-API:er fungerar bra för turordningsbaserat eller asynkront spelande.

Många modeller stödjer flerspråkig dialog, men kvaliteten varierar beroende på språk och specifik modell. Testa noggrant för dina målspråk.

Implementera modereringsfilter, begränsa prompter med tydliga riktlinjer och använd säkerhetslager som tillhandahålls av modellplattformen. Regelbunden testning och feedback från communityn hjälper till att identifiera och åtgärda problem.

Vissa gratisnivåer finns för grundläggande användning, men större kontextmodeller eller högvolymscenarier kräver vanligtvis prenumeration eller betalning per användning. Utvärdera kostnader baserat på ditt spels omfattning och spelarbas.

Convai

Applikationsinformation

| Utvecklare | Convai Technologies Inc. |

| Stödda plattformar |

|

| Språkstöd | 65+ språk stöds globalt via webbaserade och motorintegrationer. |

| Prisstruktur | Gratis tillgång till Convai Playground; företags- och storskaliga installationer kräver betalda planer eller licenskontakt. |

Vad är Convai?

Convai är en konversations-AI-plattform som ger utvecklare möjlighet att skapa mycket interaktiva, kroppsliga AI-karaktärer (NPC:er) för spel, XR-världar och virtuella upplevelser. Dessa intelligenta agenter uppfattar sin miljö, lyssnar och talar naturligt och svarar i realtid. Med sömlösa integrationer i Unity, Unreal Engine och webbmiljöer ger Convai liv åt levande virtuella människor, vilket tillför uppslukande narrativ djup och realistisk dialog till interaktiva världar.

Nyckelfunktioner

NPC:er svarar intelligent på röst, text och miljöstimuli för dynamiska interaktioner.

Låg latens röstbaserad chatt med AI-karaktärer för naturlig, uppslukande dialog.

Ladda upp dokument och lore för att forma karaktärens kunskap och bibehålla konsekventa, kontextmedvetna samtal.

Grafbaserade verktyg för att definiera triggers, mål och dialogflöden samtidigt som flexibla, öppna interaktioner bibehålls.

Inbyggd Unity SDK och Unreal Engine-plugin för sömlös inbäddning av AI-NPC:er i dina projekt.

Möjliggör att AI-karaktärer kan samtala autonomt med varandra i delade scener för dynamisk berättande.

Ladda ner eller få tillgång

Kom igång-guide

Skapa ditt Convai-konto via deras webbplats för att få tillgång till Playground och börja bygga AI-karaktärer.

I Playground definierar du din karaktärs personlighet, bakgrund, kunskapsbas och röstinställningar för att ge liv åt den.

Använd Convais Narrative Design-graf för att etablera triggers, beslutspunkter och mål som styr karaktärens beteende.

Unity: Ladda ner Convai Unity SDK från Asset Store, importera den och konfigurera din API-nyckel.

Unreal Engine: Installera Convai Unreal Engine-plugin (Beta) för att aktivera röst, perception och realtidskonversationer.

Aktivera Convais NPC2NPC-system för att låta AI-karaktärer samtala autonomt med varandra.

Speltesta dina scener noggrant, finslipa maskininlärningsparametrar, dialogtriggers och karaktärsbeteenden baserat på feedback.

Viktiga begränsningar & överväganden

- Karaktärsavatarer skapade i Convais webbverktyg kan kräva externa modeller för export till spelmotor.

- Att hantera narrativt flöde över flera AI-agenter kräver noggrann design och planering.

- Realtids röstkonversationer kan uppleva latens beroende på backend-prestanda och nätverksförhållanden.

- Komplexa eller storskaliga installationer kräver vanligtvis företagslicenser; gratisnivån är främst tillgänglig via Playground.

Vanliga frågor

Ja — Convai stödjer NPC-till-NPC-samtal genom sin NPC2NPC-funktion i både Unity och Unreal Engine, vilket möjliggör autonoma karaktärsinteraktioner.

Grundläggande karaktärsskapande är kodfritt via Playground, men integration med spelmotorer (Unity, Unreal) kräver utvecklingskunskaper och teknisk expertis.

Ja — du kan definiera en kunskapsbas och minnessystem för varje karaktär, vilket säkerställer konsekvent, kontextmedveten dialog under interaktioner.

Ja — realtids röstbaserade samtal stöds fullt ut, inklusive tal-till-text och text-till-tal-funktioner för naturliga interaktioner.

Ja — Convai erbjuder företagsalternativ inklusive lokal installation och säkerhetscertifieringar som ISO 27001 för kommersiella och storskaliga projekt.

Nvidia ACE

Applikationsinformation

| Utvecklare | NVIDIA Corporation |

| Stödda plattformar |

|

| Språkstöd | Flera språk för text och tal; globalt tillgängligt för utvecklare |

| Prissättningsmodell | Företags-/utvecklaråtkomst via NVIDIA-program; kommersiell licens krävs |

Vad är NVIDIA ACE?

NVIDIA ACE (Avatar Cloud Engine) är en generativ AI-plattform som ger utvecklare möjlighet att skapa intelligenta, livslika NPC:er för spel och virtuella världar. Den kombinerar avancerade språkmodeller, taligenkänning, röstsyntes och realtidsansiktsanimation för att leverera naturliga, interaktiva dialoger och autonomt karaktärsbeteende. Genom att integrera ACE kan utvecklare bygga NPC:er som svarar kontextuellt, samtalar naturligt och uppvisar personlighetsdrivna beteenden, vilket avsevärt förbättrar inlevelsen i spelupplevelser.

Hur det fungerar

NVIDIA ACE använder en uppsättning specialiserade AI-komponenter som samarbetar:

- NeMo — Avancerad språkförståelse och dialogmodellering

- Riva — Realtids tal-till-text och text-till-tal konvertering

- Audio2Face — Realtids ansiktsanimation, läpprörelser och känsloyttringar

NPC:er som drivs av ACE uppfattar ljud- och visuella signaler, planerar handlingar autonomt och interagerar med spelare genom realistiska dialoger och uttryck. Utvecklare kan finjustera NPC-personligheter, minnen och samtalskontext för att skapa konsekventa, engagerande interaktioner. Plattformen stödjer integration i populära spelmotorer och molndrift, vilket möjliggör skalbara AI-karaktärsimplementationer för komplexa spelscenarier.

Nyckelfunktioner

Finjustera NPC-dialog med karaktärers bakgrundshistorier, personligheter och samtalskontext.

Tal-till-text och text-till-tal drivs av NVIDIA Riva för naturliga röstinteraktioner.

Realtids ansiktsuttryck och läpprörelser med Audio2Face i NVIDIA Omniverse.

NPC:er uppfattar ljud- och visuella indata, agerar autonomt och fattar intelligenta beslut.

Moln- eller enhetsdrift via flexibelt SDK för skalbar och effektiv integration.

Kom igång

Installations- & installationsguide

Anmäl dig till NVIDIA Developer-programmet för att få ACE SDK, API-referenser och dokumentation.

Säkerställ att du har en NVIDIA GPU (RTX-serien rekommenderas) eller en molninstans för realtids AI-inferens och bearbetning.

Installera och konfigurera de tre kärnkomponenterna:

- NeMo — Distribuera för dialogmodellering och språkförståelse

- Riva — Konfigurera för tal-till-text och text-till-tal tjänster

- Audio2Face — Aktivera för realtids ansiktsanimation och uttryck

Konfigurera personlighetsdrag, minnessystem, beteendeparametrar och samtalsregler för varje NPC-karaktär.

Koppla ACE-komponenterna till Unity, Unreal Engine eller din egen spelmotor för att möjliggöra NPC-interaktioner i din spelvärld.

Utvärdera dialogkvalitet, animationsjämnhet och svarslatens. Finjustera AI-parametrar och hårdvaruallokering för optimal spelupplevelse.

Viktiga överväganden

Vanliga frågor

Ja. NVIDIA Riva erbjuder realtids tal-till-text och text-till-tal funktioner, vilket gör att NPC:er kan föra naturliga, röstbaserade samtal med spelare.

Ja. Audio2Face tillhandahåller realtids ansiktsanimation, läpprörelser och känsloyttringar, vilket gör NPC:er visuellt uttrycksfulla och känslomässigt engagerande.

Ja. Med RTX GPU:er eller optimerad molndrift stödjer ACE låg latens för interaktioner som passar realtidsspel.

Ja. Motorintegration och setup av flera komponenter kräver gedigna programmeringskunskaper och erfarenhet av spelutvecklingsramverk.

Nej. Åtkomst sker via NVIDIA:s utvecklarprogram. Företagslicensiering eller prenumeration krävs för kommersiellt bruk.

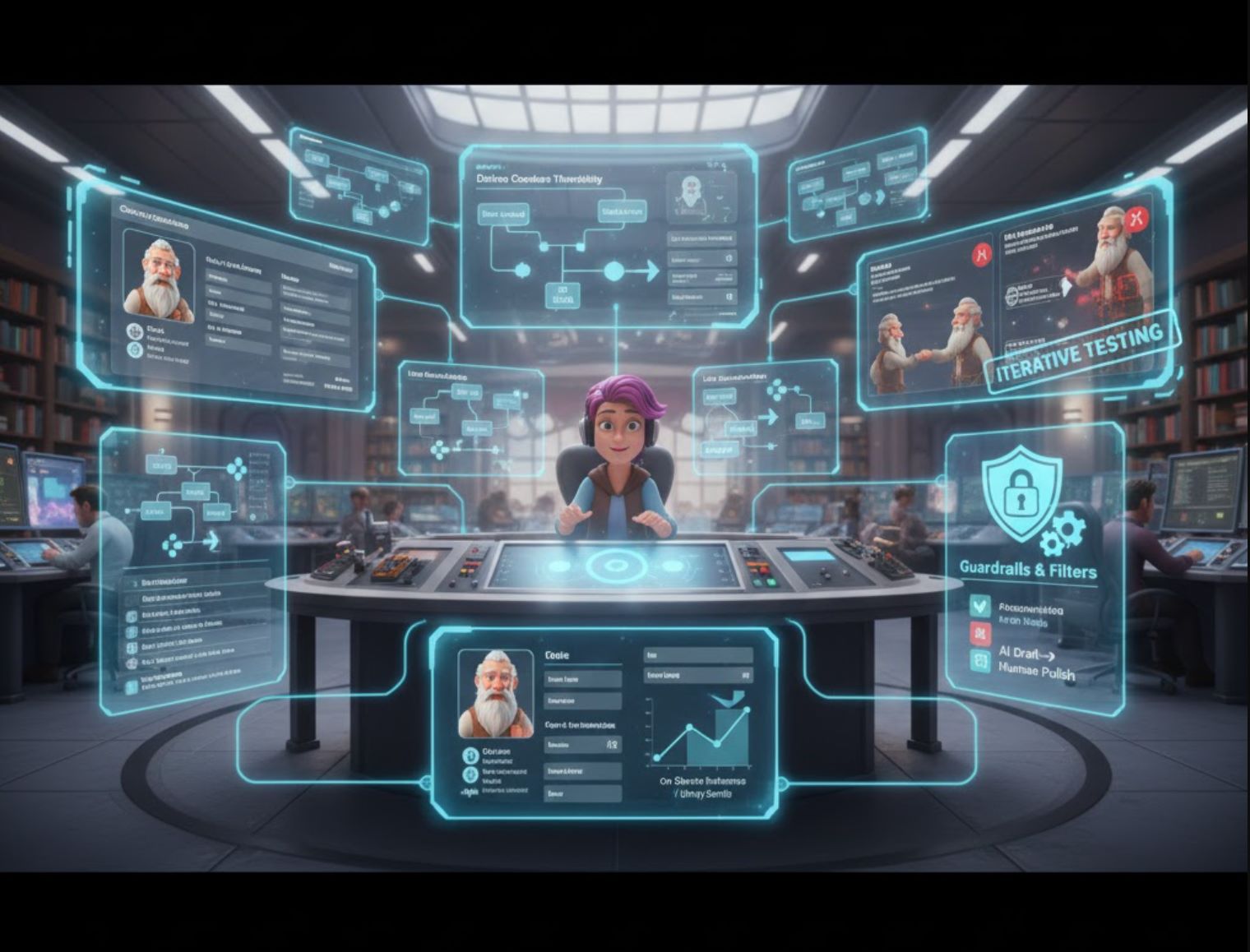

Bästa praxis för utvecklare

Definiera karaktärer noggrant

Skriv en tydlig bakgrund och stil för varje NPC. Använd detta som AI:s "systemprompt" så att den vet hur den ska tala. Ubisofts experiment lät författare skapa detaljerade karaktärsanteckningar innan AI involverades.

Behåll kontext

Inkludera relevant spelkontext i varje prompt. Skicka spelarens senaste chatt och viktiga händelser (avslutade uppdrag, relationer) så att AI:s svar håller sig till ämnet. Många system sparar samtalshistorik för att simulera minne.

Använd skyddsåtgärder

Lägg till filter och begränsningar. Sätt ordlistor för AI att undvika eller programmera triggers för speciella dialogträd. Ubisoft använde skyddsåtgärder så att NPC aldrig avvek från sin personlighet.

Testa iterativt

Speltesta samtal och förfina promptar. Om ett NPC-svar känns otypiskt, justera inmatningen eller lägg till exempel på dialoger. Om svaret inte riktigt är din karaktär, gå tillbaka och undersök vad som hände i modellen.

Hantera kostnad och prestanda

Balansera AI-användning strategiskt. Du behöver inte AI för varje engångsreplik. Överväg att förgenerera vanliga svar eller kombinera AI med traditionella dialogträd. Unitys Sentis-motor kan köra optimerade modeller på enheten för att minska serveranrop.

Blanda AI med manuell skrivning

Kom ihåg att mänskliga författare bör granska AI:s output. Använd AI som inspiration, inte som slutgiltig röst. Berättelsens båge måste komma från människor. Många team använder AI för att utarbeta eller utöka dialoger, sedan granskar och polerar de resultaten.

Framtiden för speldialog

AI banar väg för en ny era av videospelsdialog. Från indie-mods till AAA-forskningslaboratorier använder utvecklare generativa modeller för att få NPC:er att prata, reagera och minnas som aldrig förr. Officiella initiativ som Microsofts Project Explora och Ubisofts NEO NPC visar att branschen omfamnar denna teknik—alltid med fokus på etik och författarövervakning.

Dagens verktyg (GPT-4, Inworld AI, Convai, Unity-assets och andra) ger skapare möjlighet att snabbt prototypa rik dialog. I framtiden kan vi se helt procedurberättade och personligt genererade historier i realtid. För nu innebär AI-dialog mer kreativ flexibilitet och inlevelse, så länge vi använder den ansvarsfullt tillsammans med mänsklig konstnärlighet.

Inga kommentarer än. Var först med att kommentera!