What is a Large Language Model?

A Large Language Model (LLM) is an advanced type of artificial intelligence trained on massive amounts of text data to understand, generate, and process human language. LLMs power many modern AI applications such as chatbots, translation tools, and content creation systems. By learning patterns from billions of words, large language models can provide accurate answers, create human-like text, and support tasks across industries.

Large Language Models (LLMs) are AI systems trained on enormous text datasets to understand and generate human-like language. In simple terms, an LLM has been fed millions or billions of words (often from the Internet) so it can predict and produce text in context. These models are usually built on deep learning neural networks – most commonly the transformer architecture. Because of their scale, LLMs can perform many language tasks (chatting, translation, writing) without being explicitly programmed for each one.

Core Features of Large Language Models

Key features of large language models include:

Massive Training Data

LLMs are trained on vast text corpora (billions of pages). This "large" training set gives them broad knowledge of grammar and facts.

Transformer Architecture

They use transformer neural networks with self-attention, which means every word in a sentence is compared to every other word in parallel. This lets the model learn context efficiently.

Billions of Parameters

The models contain millions or billions of weights (parameters). These parameters capture complex patterns in language. For example, GPT-3 has 175 billion parameters.

Self-Supervised Learning

LLMs learn by predicting missing words in text without human labels. For instance, during training the model tries to guess the next word in a sentence. By doing this over and over on huge data, the model internalizes grammar, facts, and even some reasoning.

Fine-tuning and Prompting

After pre-training, LLMs can be fine-tuned on a specific task or guided by prompts. This means the same model can adapt to new tasks like medical Q&A or creative writing by adjusting it with a smaller dataset or clever instructions.

Together, these features let an LLM understand and generate text like a human. In practice, a well-trained LLM can infer context, complete sentences, and produce fluent responses on many topics (from casual chat to technical subjects) without task-specific engineering.

How LLMs Work: The Transformer Architecture

LLMs typically use the transformer network architecture. This architecture is a deep neural network with many layers of connected nodes. A key component is self-attention, which lets the model weight the importance of each word relative to all others in a sentence at once.

Sequential Processing

- Process words one by one

- Slower training on GPUs

- Limited context understanding

Parallel Processing

- Process entire input simultaneously

- Much faster training on GPUs

- Superior context comprehension

Unlike older sequential models (like RNNs), transformers process the whole input in parallel, allowing much faster training on GPUs. During training, the LLM adjusts its billions of parameters by trying to predict each next word in its massive text corpus.

Over time, this process teaches the model grammar and semantic relationships. The result is a model that, given a prompt, can generate coherent, contextually relevant language on its own.

Applications of LLMs

Because they understand and generate natural language, LLMs have many applications across industries. Some common uses are:

Conversational AI

Content Generation

Translation and Summarization

Question Answering

Code Generation

Research and Analysis

For instance, GPT-3.5 and GPT-4 behind ChatGPT have hundreds of billions of parameters, while Google's models (PaLM and Gemini) and others operate similarly. Developers often interact with these LLMs through cloud services or libraries, customizing them for specific tasks like document summarization or coding help.

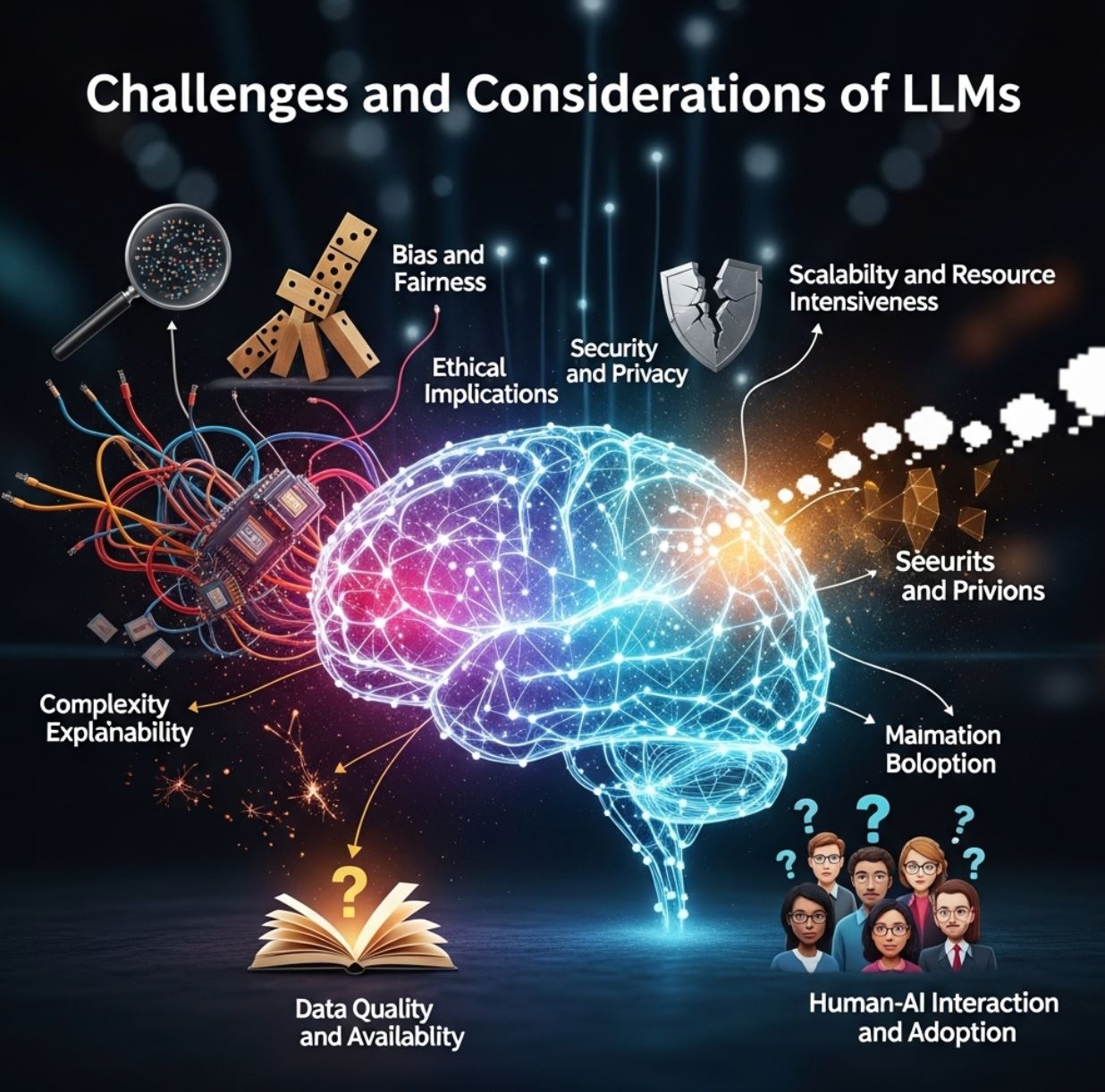

Challenges and Considerations

LLMs are powerful, but they are not perfect. Because they learn from real-world text, they can reproduce biases present in their training data. An LLM might generate content that is culturally biased, or it might output offensive or stereotypical language if not carefully filtered.

Bias Issues

Hallucinations

Resource Requirements

Accuracy Verification

Another issue is hallucinations: the model can produce fluent-sounding answers that are completely incorrect or fabricated. For example, an LLM might confidently invent a false fact or name. These errors occur because the model is essentially guessing the most plausible continuation of text, not verifying facts.

Even so, users of LLMs must be aware that results should be checked for accuracy and bias. Additionally, training and running LLMs requires huge compute resources (powerful GPUs/TPUs and lots of data), which can be costly.

Summary and Future Outlook

In summary, a large language model is a transformer-based AI system trained on vast amounts of text data. It has learned patterns of language through self-supervised training, giving it the ability to generate fluent, contextually relevant text. Because of their scale, LLMs can handle a wide range of language tasks – from chatting and writing to translating and coding – often matching or exceeding human levels of fluency.

These models are poised to reshape how we interact with technology and access information.

— Leading AI researchers

As of 2025, LLMs continue to advance (including multimodal extensions that handle images or audio) and remain at the forefront of AI innovation, making them a central component of modern AI applications.

No comments yet. Be the first to comment!