What is Edge AI?

Edge AI (Edge Artificial Intelligence) is the combination of artificial intelligence (AI) and edge computing. Instead of sending data to the cloud for processing, Edge AI enables smart devices such as smartphones, cameras, robots, or IoT machines to analyze and make decisions directly on the device. This approach helps reduce latency, save bandwidth, enhance security, and provide real-time responsiveness.

Edge AI (sometimes called "AI at the edge") means running artificial intelligence and machine-learning models on local devices (sensors, cameras, smartphones, industrial gateways, etc.) rather than in remote data centers. In other words, the "edge" of the network – where data is generated – handles the computing. This lets devices analyze data immediately as it's collected, instead of constantly sending raw data to the cloud.

Edge AI enables real-time, on-device processing without relying on a central server. For example, a camera with Edge AI can detect and classify objects on the fly, giving instant feedback. By processing data locally, Edge AI can work even with intermittent or no internet connection.

— IBM Research

In summary, Edge AI simply brings computation closer to the data source – deploying intelligence on devices or nearby nodes, which speeds up responses and reduces the need to transmit everything to the cloud.

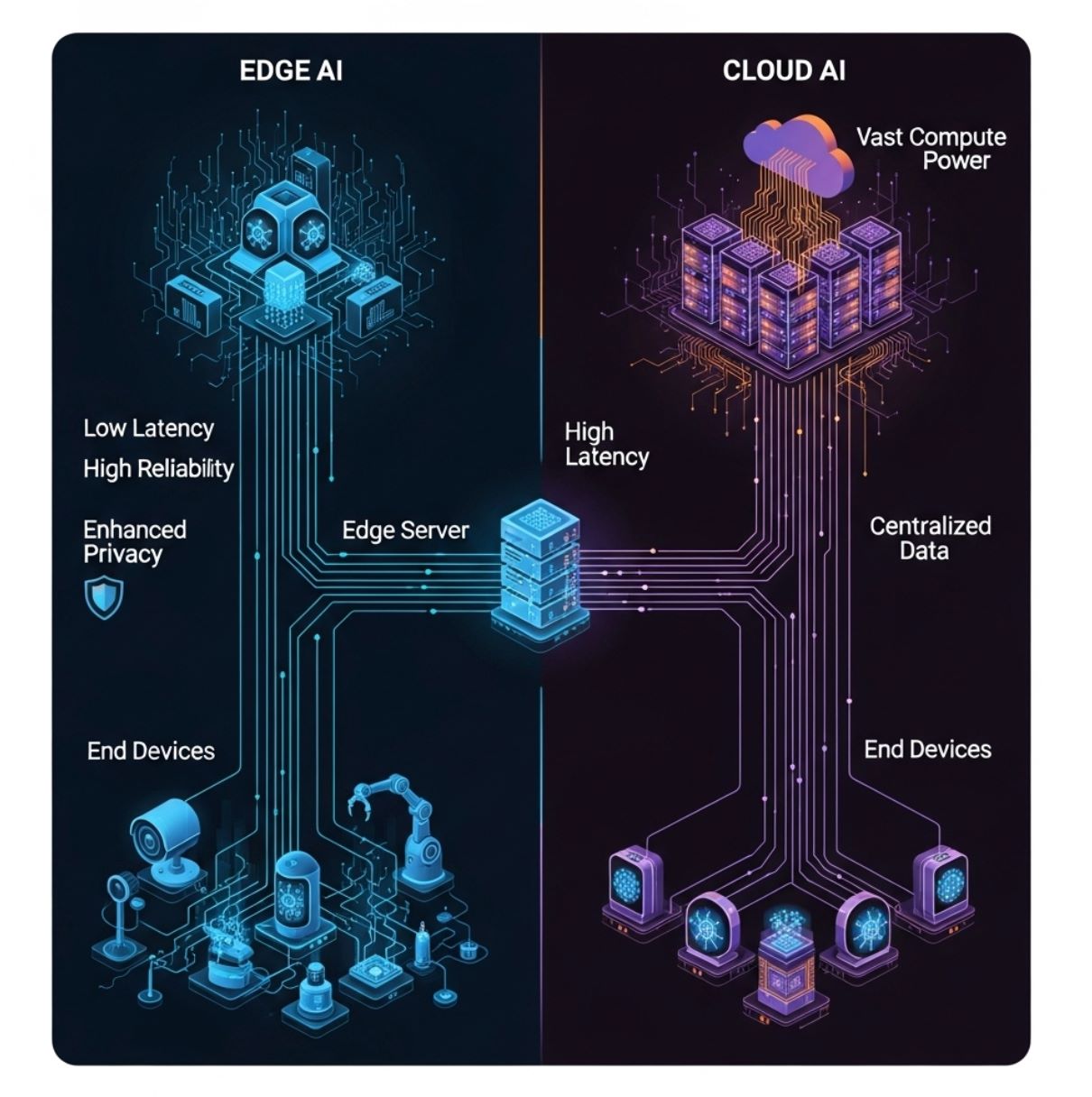

Edge AI vs Cloud AI: Key Differences

Unlike traditional cloud-based AI (which sends all data to centralized servers), Edge AI distributes computing among on-site hardware. The diagram below illustrates a simple edge-computing model: end devices (bottom layer) feed data to an edge server or gateway (middle layer) instead of only to the distant cloud (top layer).

In this setup, AI inference can happen on the device or the local edge node, greatly reducing communication delays.

Traditional Approach

- Data sent to remote servers

- Higher latency due to network delays

- Requires continuous connectivity

- Unlimited compute resources

- Privacy concerns with data transmission

Modern Approach

- Local processing on devices

- Millisecond response times

- Works offline when needed

- Resource-constrained but efficient

- Enhanced privacy protection

Latency

Edge AI minimizes lag. Because processing is local, decisions can occur in milliseconds.

- Critical for time-sensitive tasks

- Avoiding car accidents

- Controlling robots in real-time

Bandwidth

Edge AI cuts network load by analyzing or filtering data on-site.

- Far less information sent upstream

- More efficient and cost-effective

- Reduces network congestion

Privacy/Security

Sensitive data can be processed and stored on-device, never transmitted to the cloud.

- Voice, images, health readings stay local

- Reduces exposure to third-party breaches

- Face recognition without photo uploads

Compute Resources

Edge devices have limited processing power but use optimized models.

- Compact, quantized models

- Training still occurs in cloud

- Size-constrained but efficient

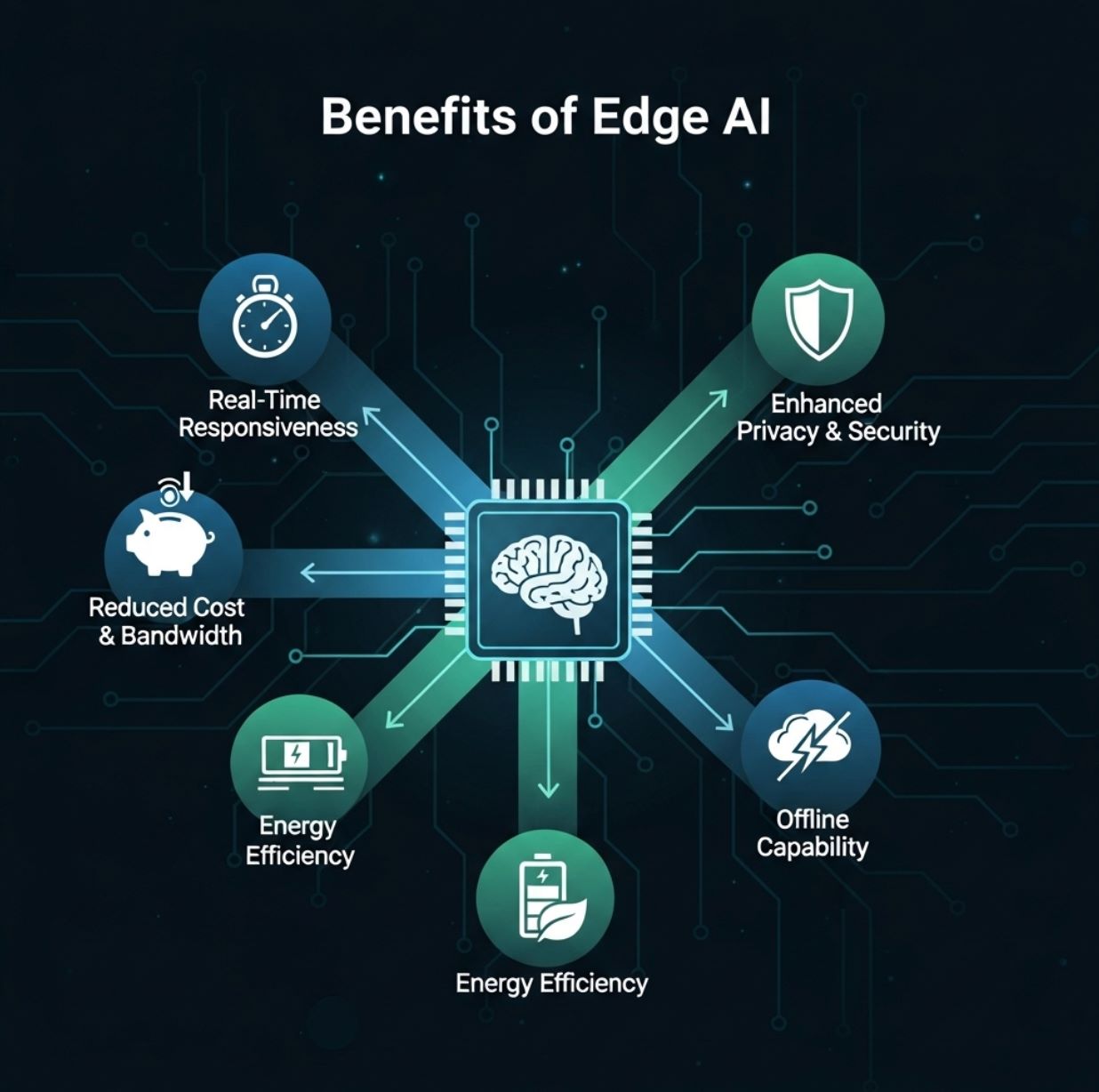

Benefits of Edge AI

Edge AI offers several practical advantages for users and organizations:

Real-time Responsiveness

- Live object detection

- Voice reply systems

- Anomaly alerts

- Augmented reality applications

Reduced Bandwidth and Cost

- Security cameras upload only threat clips

- Reduced continuous streaming

- Lower cloud hosting expenses

Enhanced Privacy

- Critical for healthcare and finance

- Data stays within country/facility

- Compliance with privacy regulations

Energy and Cost Efficiency

- Lower power consumption

- Reduced server costs

- Optimized for mobile devices

Edge AI brings high-performance computing capabilities to the edge, enabling real-time analysis and improved efficiency.

— Red Hat & IBM Joint Report

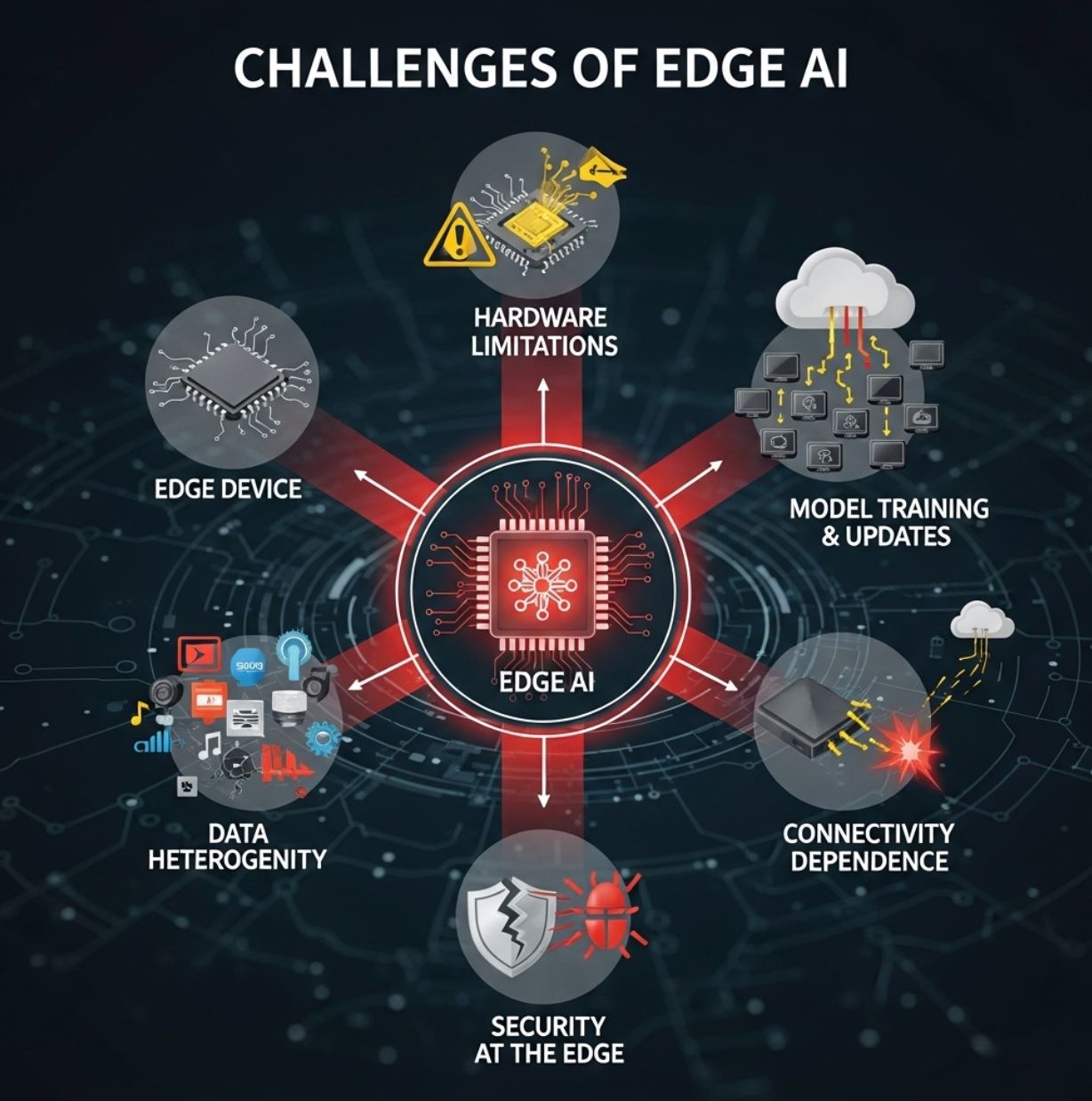

Challenges of Edge AI

Despite its advantages, Edge AI also faces significant hurdles:

Hardware Limitations

Edge devices are typically small and resource-constrained. They may only have modest CPUs or specialized low-power NPUs, and limited memory.

- Forces use of model compression and pruning

- TinyML techniques required for microcontrollers

- Complex models cannot run full-scale

- Some accuracy may be sacrificed

Model Training and Updates

Training sophisticated AI models usually still happens in the cloud, where massive data and computing power are available.

- Models must be optimized and deployed to each device

- Keeping thousands of devices up-to-date is complex

- Firmware synchronization adds overhead

- Version control across distributed systems

Data Gravity and Heterogeneity

Edge environments are diverse. Different locations may collect different types of data, and policies may vary by region.

- Data tends to stay local

- Hard to gather global view

- Devices come in all shapes and sizes

- Integration and standardization challenges

Security at the Edge

While Edge AI can improve privacy, it also introduces new security concerns. Each device or node is a potential target for hackers.

- Models must be tamper-proof

- Firmware security requirements

- Distributed attack surface

- Strong safeguards needed

Connectivity Dependencies

Although inference can be local, edge systems still often rely on cloud connectivity for heavy tasks.

- Retraining models requires cloud access

- Large-scale data analysis needs connectivity

- Aggregating distributed results

- Limited connectivity can bottleneck functions

Use Cases of Edge AI

Edge AI is being applied across many industries with real-world impact:

Autonomous Vehicles

Self-driving cars use on-board Edge AI to instantly process camera and radar data for navigation and obstacle avoidance.

- Can't afford delay of sending video to server

- Object detection happens locally

- Pedestrian recognition in real-time

- Lane tracking without connectivity

Manufacturing and Industry 4.0

Factories deploy smart cameras and sensors on production lines to detect defects or anomalies in real time.

Quality Control

Edge AI cameras spot faulty products on conveyor belts and trigger immediate action.

Predictive Maintenance

Industrial machines use on-site AI to predict equipment failures before breakdowns occur.

Healthcare and Emergency Response

Portable medical devices and ambulances now use Edge AI to analyze patient data on the spot.

- Ambulance onboard ultrasound with AI analysis

- Vital-sign monitors detect abnormal readings

- Alert paramedics about internal injuries

- ICU patient monitoring with instant alarms

Smart Cities

Urban systems use edge AI for traffic management, surveillance, and environmental sensing.

Traffic Management

Surveillance

Environmental Monitoring

Retail and Consumer IoT

Edge AI enhances customer experience and convenience across retail and consumer applications.

In-Store Analytics

Smart cameras and shelf sensors track shopper behavior and inventory levels instantly.

Mobile Devices

Smartphones run voice and face recognition on-device without cloud access for unlocking and gesture identification.

Fitness Tracking

Wearables analyze health data (heart rate, steps) locally to provide real-time feedback.

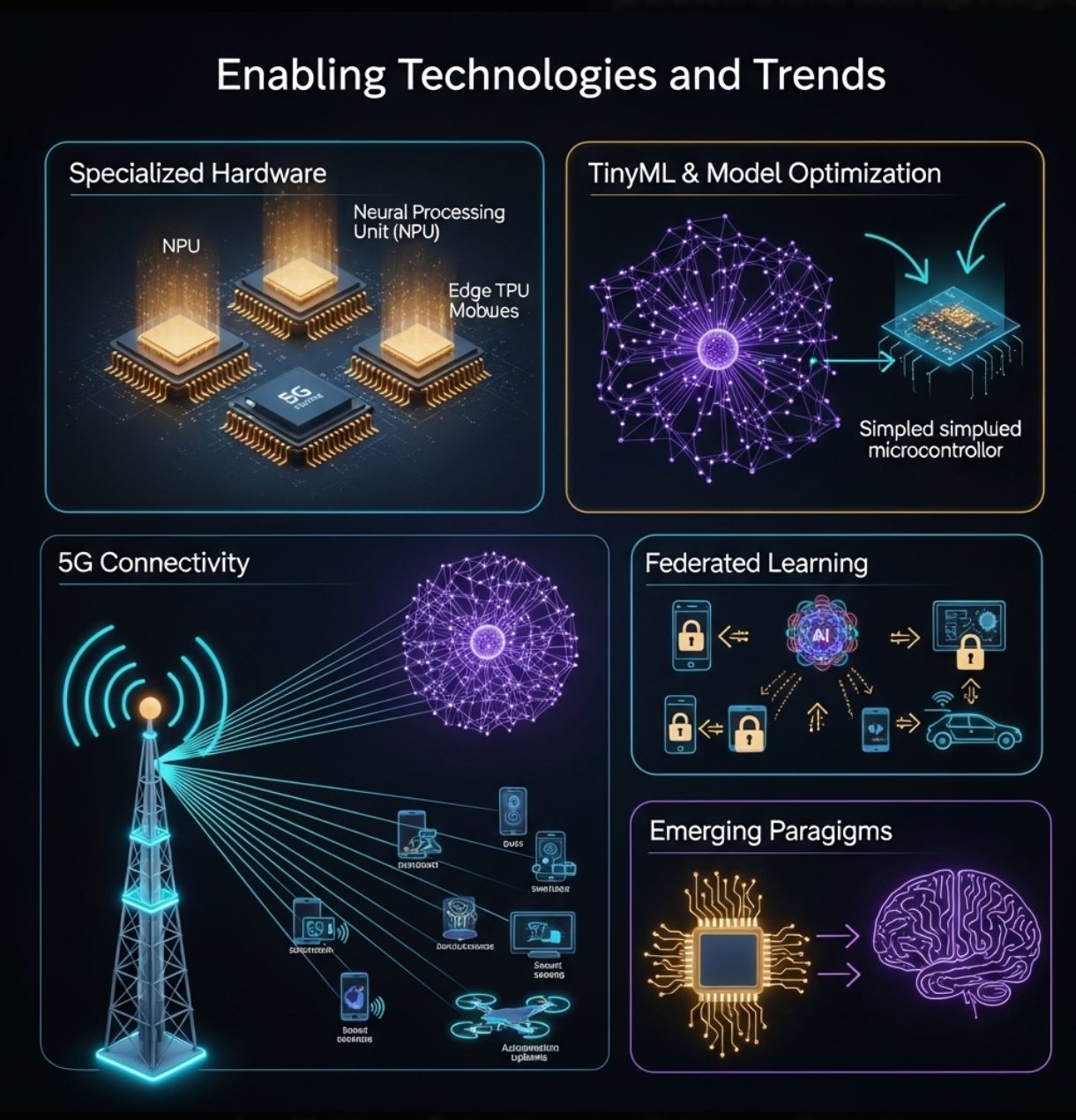

Enabling Technologies and Trends

The growth of Edge AI is fueled by advances in both hardware and software:

Specialized Hardware

Manufacturers are building chips designed specifically for edge inference.

- Low-power neural accelerators (NPUs)

- Google Coral Edge TPU

- NVIDIA Jetson Nano

- Arduino and Raspberry Pi with AI add-ons

TinyML and Model Optimization

Tools and techniques make it possible to shrink neural networks for tiny devices.

- TensorFlow Lite optimization

- Model pruning and quantization

- Knowledge distillation

- TinyML for microcontrollers

5G and Connectivity

Next-generation wireless provides high bandwidth and low-latency links that complement Edge AI.

- Fast local networks for device coordination

- Offload heavier tasks when needed

- Smart factories and V2X communication

- Enhanced edge device clusters

Federated Learning

Privacy-preserving methods allow multiple edge devices to jointly train models without sharing raw data.

- Local model improvement

- Only share model updates

- Distributed data utilization

- Enhanced privacy protection

These technologies continue to push the boundary of what Edge AI can do. Together, they help deliver the "AI inference era" – shifting intelligence closer to users and sensors.

Conclusion

Edge AI is transforming how we use artificial intelligence by moving computation to the data source. It complements cloud AI, delivering faster, more efficient, and more private analytics on local devices.

This approach addresses real-time and bandwidth challenges inherent in cloud-centric architectures. In practice, Edge AI powers a wide range of modern technologies – from smart sensors and factories to drones and self-driving cars – by enabling on-the-spot intelligence.

As IoT devices proliferate and networks improve, Edge AI is only set to grow. Advances in hardware (powerful microchips, TinyML) and techniques (federated learning, model optimization) are making it easier to put AI everywhere.

No comments yet. Be the first to comment!