What is a Neural Network?

Neural Network (artificial neural network) is a computational model inspired by the way the human brain works, widely used in the fields of Artificial Intelligence (AI) and Machine Learning.

Neural Network is a method in the field of artificial intelligence (AI) used to teach computers to process data by mimicking the human brain. Specifically, it is a machine learning technique within the branch of deep learning – using interconnected nodes (similar to neurons) arranged in a layered structure resembling the neural network of the brain.

This system has the ability to adapt, meaning the computer can learn from its own mistakes and continuously improve its accuracy over time. The term "artificial neuron" originates from the network's structure, which simulates how neurons in the brain transmit signals to each other.

Today, artificial neural networks have exploded in popularity and become a core tool in many industries as well as advanced AI systems. They are the backbone of modern deep learning algorithms – most recent breakthroughs in AI bear the hallmark of deep neural networks.

Structure and Operating Mechanism of Neural Networks

Artificial neural networks are built based on inspiration from the biological brain. The human brain contains billions of neurons connected in complex ways, transmitting electrical signals to process information; similarly, artificial neural networks consist of many artificial neurons (software units) connected to work together on a specific task.

Each artificial neuron is essentially a mathematical function that receives input signals, processes them, and generates output signals passed to the next neuron. The connections between these neurons simulate synapses in the human brain.

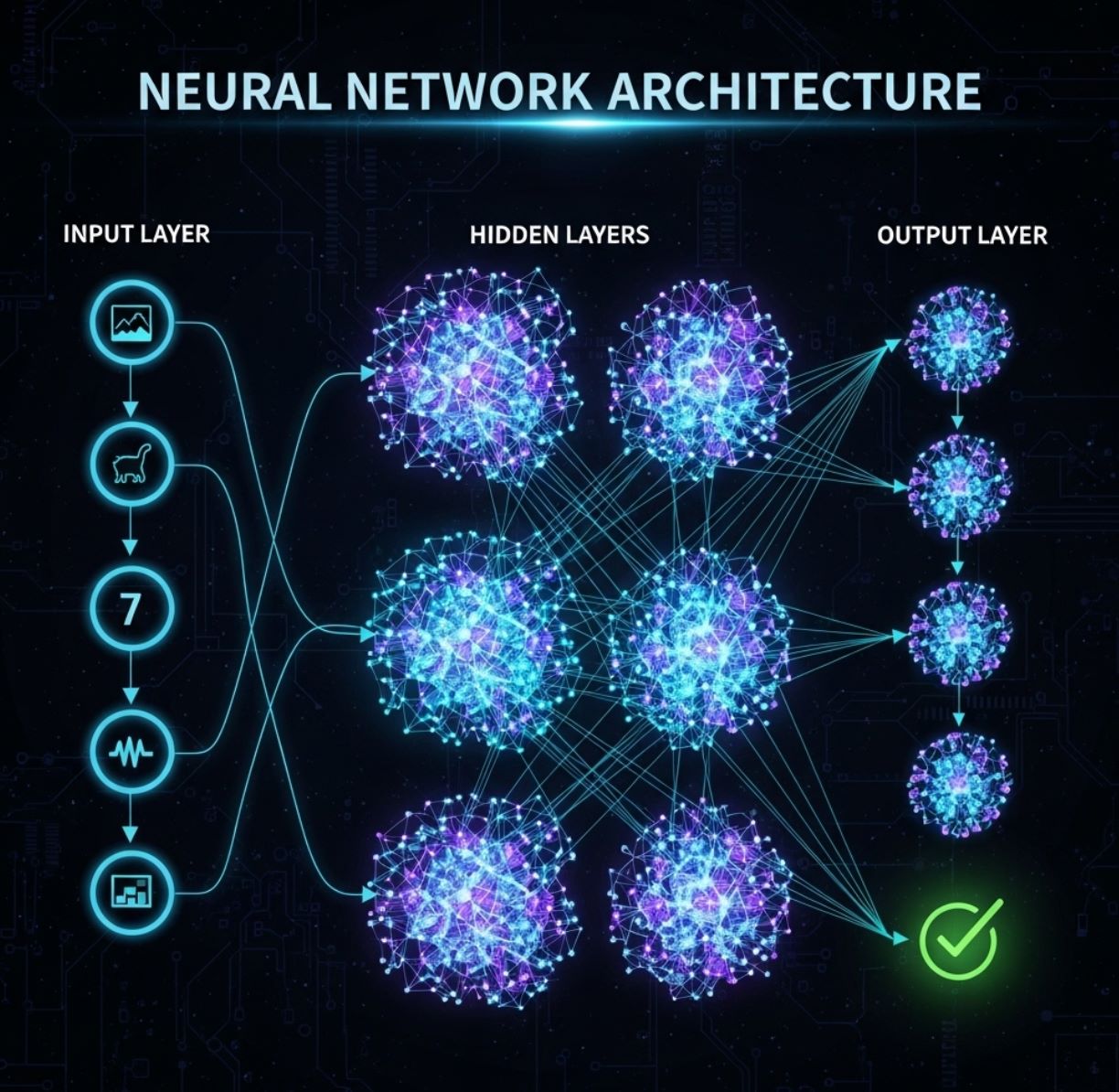

— Neural Network Architecture Fundamentals

Input Layer

Hidden Layers

Output Layer

Thanks to this mechanism, important signals (with high weights) are propagated through the network, while noise or weak signals are suppressed.

Simple Neural Networks

- Few hidden layers (1-2)

- Limited parameters

- Basic pattern recognition

- Faster training time

Deep Neural Networks

- Multiple hidden layers (3+)

- Millions of parameters

- Complex nonlinear relationships

- Requires large datasets

When a neural network has multiple hidden layers (usually more than two), it is called a deep neural network. Deep neural networks are the foundation of current deep learning techniques. These networks have millions of parameters (weights) and can learn extremely complex nonlinear relationships between inputs and outputs.

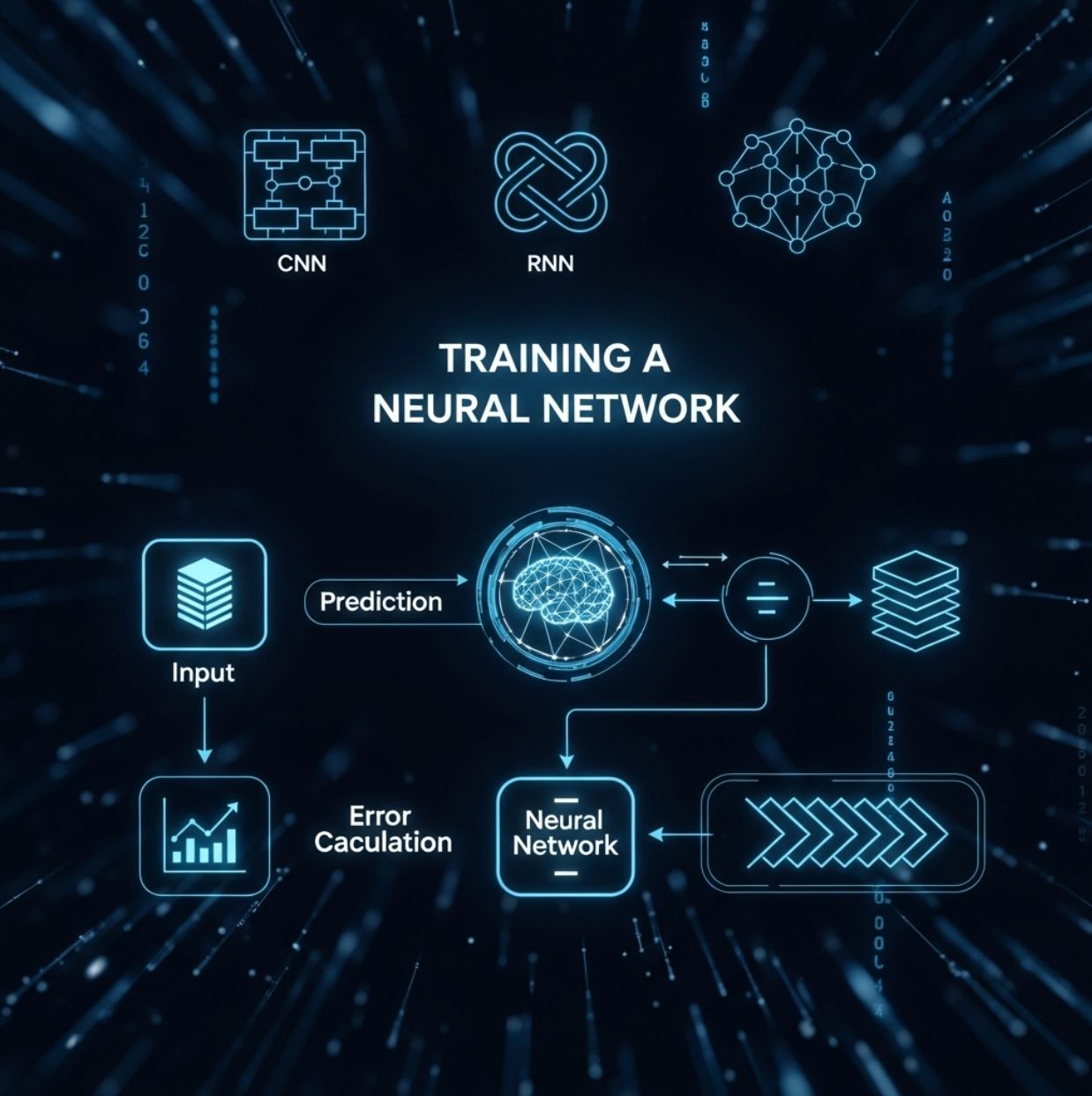

Training Process of Artificial Neural Networks

A neural network is not a rigid system programmed with fixed rules but learns how to solve tasks through data examples. The process of "teaching" a neural network is called training.

Data Input

During training, the network is provided with a large amount of input data and (usually) corresponding desired output information so it can adjust its internal parameters.

Prediction & Comparison

The neural network uses the difference between its predicted results and the expected actual results to adjust weights (parameters) inside, improving its performance.

Weight Adjustment

After each prediction, the network compares the prediction with the correct answer and adjusts the connection weights to improve accuracy for the next prediction.

Based on this error, the network updates weights—strengthening connections that led to correct predictions and weakening those that led to errors. This process repeats thousands or millions of times until the neural network converges to a state where the prediction error is within an acceptable range.

Supervised Learning

Training with labeled data

- Clear input-output pairs

- Direct error calculation

Unsupervised Learning

Training with unlabeled data

- Pattern discovery

- Feature extraction

Reinforcement Learning

Training with rewards/punishments

- Trial and error approach

- Optimal strategy learning

After training, the neural network can generalize knowledge: it not only "memorizes" the training data but can also apply what it has learned to predict new, unseen data. Training can be supervised (with labeled data), unsupervised (with unlabeled data), or reinforcement learning (with rewards/punishments), depending on the specific task.

The goal is for the network to learn the hidden patterns in the data. Once well-trained, artificial neural networks become powerful tools that allow fast and accurate classification, recognition, or prediction—for example, Google's search algorithm is a famous large-scale neural network in practice.

— Deep Learning Applications in Practice

Feedforward Networks

The simplest form, transmitting signals one-way from input to output. Information flows in a single direction without loops or cycles.

Recurrent Neural Networks (RNN)

Suitable for sequential data like text or audio. These networks have memory capabilities and can process sequences of varying lengths.

Convolutional Neural Networks (CNN)

Specialized in processing image/video data. They use convolutional layers to detect local features and patterns in visual data.

Autoencoders

Often used for data compression and feature learning. They learn to encode input data into a compressed representation and then decode it back.

It is worth noting that many neural network architectures have been developed to suit different data types and tasks. Each of these networks has slightly different structures and operating methods but all follow the general principle of neural networks: many interconnected neurons learning from data.

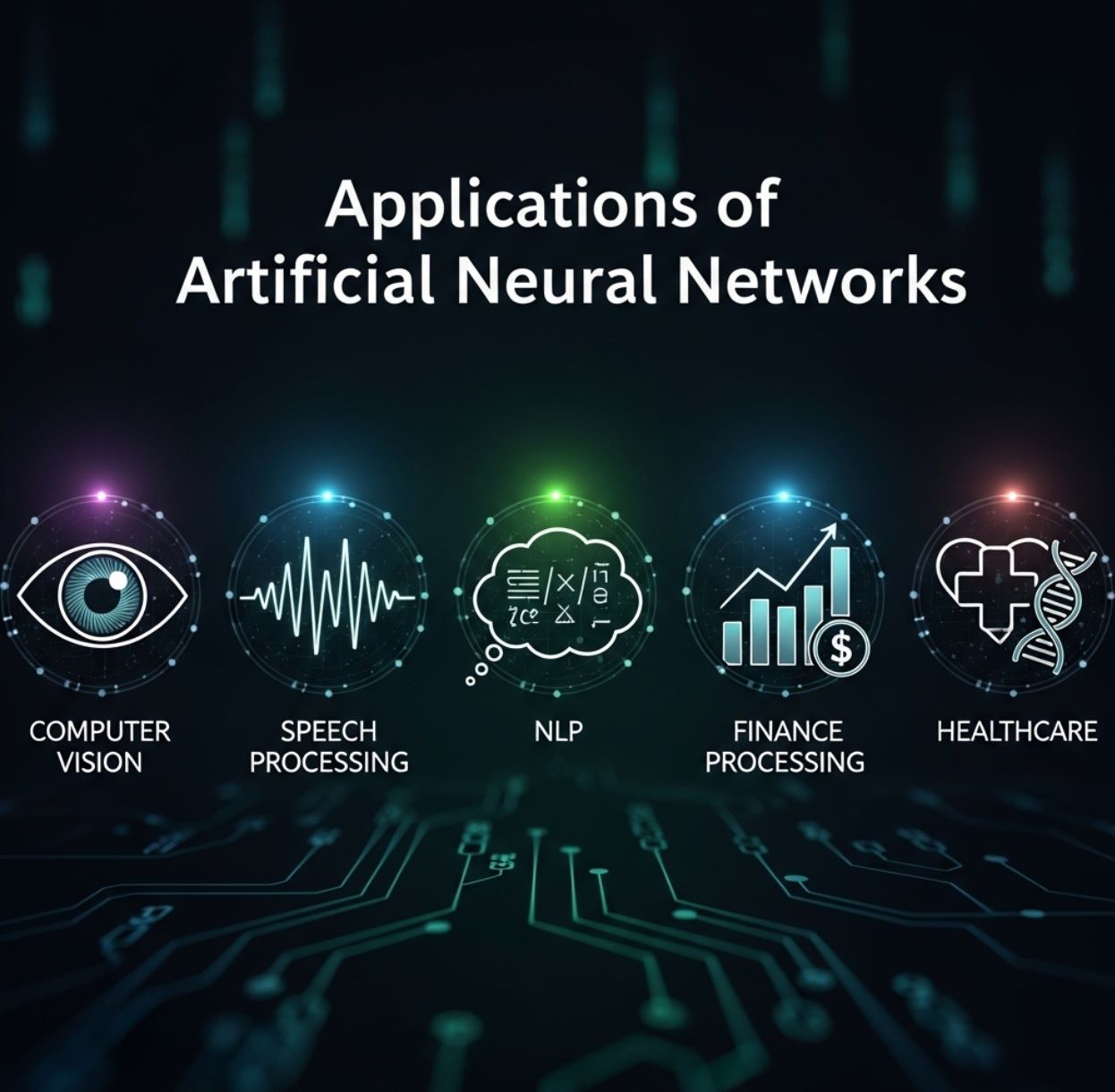

Practical Applications of Artificial Neural Networks

Thanks to their ability to learn and process complex models, artificial neural networks have been widely applied across many different fields. Below are some typical applications of neural networks in practice:

Computer Vision

Neural networks help computers "see" and understand image and video content similarly to humans. For example, in self-driving cars, neural networks are used to recognize traffic signs, pedestrians, vehicles, and more from camera images.

CNN models enable computers to automatically classify objects in images (face recognition, distinguishing cats from dogs, etc.) with increasing accuracy.

Speech Processing

Virtual assistants like Amazon Alexa, Google Assistant, Siri, etc., operate based on neural networks to recognize speech and understand human language. This technology allows converting speech to text, activating voice commands, or even mimicking voices.

Thanks to neural networks, computers can analyze audio features (tone, intonation) and comprehend content regardless of regional accents or different languages.

Natural Language Processing (NLP)

In the field of language, neural networks are used to analyze and generate natural language. Applications such as machine translation, chatbots, automated question-answering systems, or sentiment analysis on social media use neural network models (often RNNs or modern Transformer architectures) to understand and respond to human language.

Neural networks enable computers to learn grammar, semantics, and context for more natural communication.

Finance and Business

In finance, neural networks are applied to forecast market fluctuations such as stock prices, currency exchange rates, interest rates, based on vast historical data. By recognizing patterns in past data, neural networks can support predicting future trends and detecting fraud (e.g., identifying unusual credit card transactions).

Many banks and insurance companies also use neural networks to assess risks and make decisions (such as loan approvals, portfolio management) more effectively.

Healthcare

In medicine, neural networks assist doctors in diagnosis and treatment decisions. A typical example is using CNN to analyze medical images (X-rays, MRIs, cell images) to detect early signs of diseases that may be missed by the naked eye.

Additionally, neural networks are used to predict disease outbreaks, analyze gene sequences, or personalize treatment plans for patients based on large genetic and medical record data. Neural networks help improve accuracy and speed in diagnosis, contributing to better healthcare quality.

Future Outlook and Conclusion

From analyzing images and audio to understanding language and forecasting trends, neural networks have opened up new possibilities never seen before. In the future, with the growth of big data and computing power, artificial neural networks promise to continue evolving and delivering more breakthrough applications, helping shape the next generation of intelligent technology.

Follow INVIAI to stay updated with more useful information!

No comments yet. Be the first to comment!