What is Natural Language Processing?

Natural Language Processing (NLP) – or natural language processing – is a field of artificial intelligence (AI) focused on enabling computers to understand and interact with human language.

Natural Language Processing (NLP) – or natural language processing – is a field of artificial intelligence (AI) focused on enabling computers to understand and interact with human language. Simply put, NLP uses machine learning methods to give computers the ability to interpret, interact with, and understand the natural language we use every day.

This is considered one of the most complex challenges in AI because language is a sophisticated tool for expressing thoughts and communication unique to humans, requiring machines to "understand" the hidden meanings behind sentences.

Natural language here refers to human languages such as Vietnamese, English, Chinese, etc., as opposed to computer languages. The goal of NLP is to program computers to automatically process and understand these languages, and even generate sentences similar to humans.

Why is natural language processing important?

In the digital age, the volume of language data (text, audio, conversations) has grown enormously from many sources such as emails, messages, social networks, videos, etc. Unlike structured data (numbers, tables), language data in text or audio form is unstructured data – very difficult to process automatically without NLP.

Natural language processing technology helps computers analyze this unstructured data effectively, understand intent, context, and emotions in human words. Thanks to this, NLP becomes the key for machines to communicate and serve humans more intelligently.

Natural Interaction

Enables natural communication between humans and computers without learning complex commands.

Time & Cost Savings

Automates complex language-related tasks, reducing manual effort and operational costs.

Enhanced Experience

Personalizes services and improves user experience across various applications.

Natural Language Processing is important because it enables natural interaction between humans and computers. Instead of learning computer languages, we can give commands or ask questions in our native language. NLP automates many complex language-related tasks, thereby saving time and costs, while enhancing user experience across almost every field.

Businesses can use NLP to automatically analyze thousands of customer feedbacks on social media to extract valuable insights, while chatbots powered by NLP can consistently respond to customers 24/7.

— Industry Application Example

Proper application of NLP helps companies optimize processes, increase productivity, and even personalize services for each user.

Clearly, natural language processing has become a core technology driving many smart applications around us, helping machines "understand language" better than ever before.

Common applications of NLP

Thanks to its ability to "understand" language, NLP is widely applied across various fields. Below are some key applications of natural language processing:

Virtual Assistants & Chatbots

NLP enables the creation of virtual assistants like Siri, Alexa, or chatbots on websites, Facebook Messenger, etc., that can understand user questions and respond automatically.

- Answer frequently asked questions

- Assist with scheduling and shopping

- Resolve customer issues 24/7

Sentiment & Opinion Analysis

Companies use NLP to analyze customer feedback on social media, surveys, or product reviews.

- Detect sentiment (positive/negative)

- Identify attitudes and sarcasm

- Understand customer opinions and market trends

Machine Translation

Machine translation is a classic NLP application. Translation software (like Google Translate) uses NLP to convert text or speech from one language to another while preserving meaning and context.

Speech Processing

- Speech recognition: Converts spoken language into text

- Text-to-speech: Creates natural-sounding voices

- Voice-controlled systems in cars and smart homes

Classification & Information Extraction

NLP can automatically classify texts by topic and extract important information:

- Spam vs. non-spam email filtering

- News categorization

- Medical records data extraction

- Legal document filtering

Automated Content Generation

Modern language models (such as GPT-3, GPT-4) can generate natural language – creating human-like text:

- Write articles and compose emails

- Create poetry and write code

- Support content creation

- Automatic customer service responses

Overall, any task involving natural language (text, speech) can apply NLP to automate or enhance efficiency. From information retrieval, question answering, document analysis, to educational support (e.g., automatic essay grading, virtual tutoring) – natural language processing plays a crucial role.

How does NLP work?

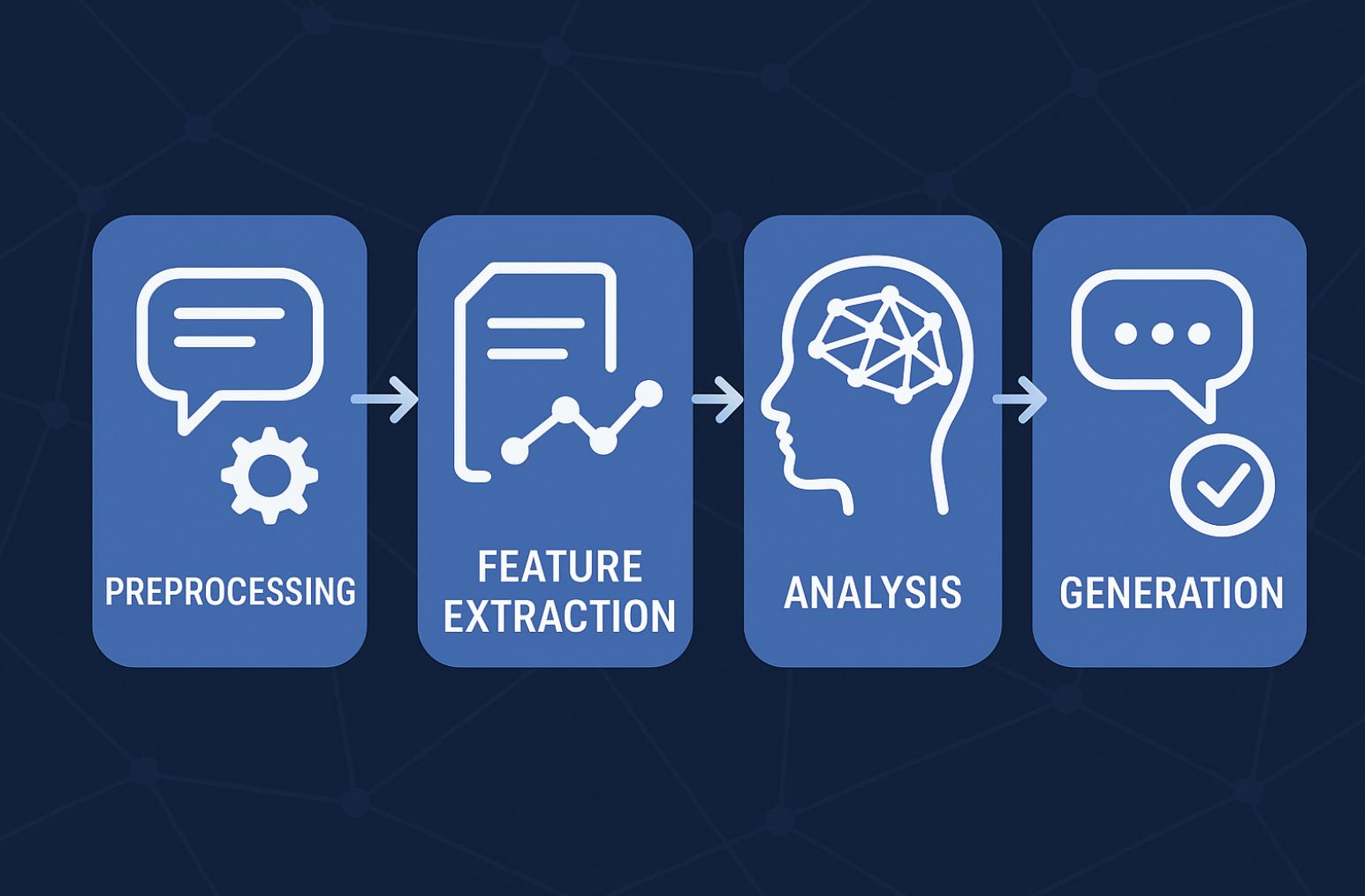

To enable computers to understand human language, NLP combines various techniques from computer science and linguistics. Essentially, an NLP system goes through the following main steps when processing language:

Preprocessing

First, text or speech is converted into raw data for the computer. For text, NLP performs sentence splitting, tokenization, converts all to lowercase, removes punctuation and stop words (words like "the", "is" that carry little meaning).

Then, stemming/lemmatization may be applied – reducing words to their root form (e.g., "running" to "run"). For speech, the initial step is speech recognition to obtain text. The result of preprocessing is cleaned and normalized language data ready for machine learning.

Feature Extraction

Computers do not directly understand words, so NLP must represent language as numbers. This step converts text into numerical features or vectors.

Common techniques include Bag of Words, TF-IDF (term frequency-inverse document frequency), or more advanced word embeddings (like Word2Vec, GloVe) – assigning each word a vector representing its meaning. These vectors help algorithms understand semantic relationships between words (e.g., "king" is closer to "queen" than to "car" in vector space).

Context Analysis & Understanding

Once numerical data is available, the system uses machine learning models and algorithms to analyze syntax and semantics.

For example, syntactic analysis identifies the role of words in a sentence (which is the subject, verb, object, etc.), while semantic analysis helps understand the meaning of the sentence in context. Modern NLP uses deep learning models to perform these tasks, enabling computers to gradually comprehend sentence meaning almost like humans.

Language Generation or Action

Depending on the purpose, the final step may be to produce results for the user. For example, for a question, the NLP system will find an appropriate answer from data and respond (in text or speech). For a command, NLP will trigger an action on the machine (e.g., play music when hearing "Play music").

In machine translation, this step generates the translated sentence in the target language. For chatbots, this is when natural responses are generated based on understanding from previous steps.

However, this breakdown helps us visualize how NLP works to transform human language into a form computers understand and respond to appropriately.

Approaches in NLP

Throughout its development history, Natural Language Processing has gone through several generations of different approaches. From the 1950s to today, we can identify three main approaches in NLP:

Rule-based NLP (1950s-1980s)

This was the first approach. Programmers wrote sets of language rules in if-then format for machines to process sentences.

- Pre-programmed sentence patterns

- No machine learning involved

- Rigid rule-based responses

- Very limited understanding

- No self-learning capability

- Difficult to scale

- Requires linguistic experts

Statistical NLP (1990s-2000s)

Starting from the 1990s, NLP shifted to statistical machine learning. Instead of manually writing rules, algorithms were used to let machines learn language models from data.

Probability-based

Calculates probabilities to select appropriate word meanings based on context

Practical Applications

Enabled spell checking and word suggestion systems like T9 on old phones

This approach allows more flexible and accurate natural language processing, as machines can calculate probabilities to select the appropriate meaning of a word/sentence based on context.

Deep Learning NLP (2010s-Present)

Since the late 2010s, deep learning with neural network models has become the dominant method in NLP. Thanks to the massive amount of text data on the Internet and increased computing power, deep learning models can automatically learn highly abstract language representations.

Transformer Model

Major breakthrough with self-attention mechanism for better context understanding

BERT

Google's model significantly improved search quality

GPT Series

GPT-2, GPT-3, GPT-4 enabled fluent text generation

Modern Trends: Foundation Models

A modern trend is using foundation models – large pre-trained AI models on billions of words. These models (e.g., OpenAI's GPT-4 or IBM's Granite) can be quickly fine-tuned for various NLP tasks, from meaningful text summarization to specialized information extraction.

Time Efficient

Saves training time with pre-trained models

High Performance

Achieves superior results across tasks

Enhanced Accuracy

Retrieval-augmented generation improves answer precision

This shows NLP is evolving dynamically and continuously innovating technically.

Challenges and new trends in NLP

Current Challenges

Despite many achievements, natural language processing still faces significant challenges. Human language is extremely rich and diverse: the same sentence can have multiple meanings depending on context, not to mention slang, idioms, wordplay, sarcasm. Helping machines correctly understand human intent in all cases is not easy.

Context & Reasoning

To answer user questions accurately, NLP systems must have fairly broad background knowledge and some reasoning ability, not just understand isolated words.

Multilingual Complexity

Each language has unique characteristics:

- Vietnamese differs from English in script and structure

- Japanese and Chinese don't separate words clearly

- Regional dialects and cultural nuances

Emerging Trends

Regarding trends, modern NLP aims to create systems that are smarter and more "knowledgeable". Larger language models (with more parameters and training data) like GPT-4, GPT-5, etc., are expected to continue improving natural language understanding and generation.

Explainable NLP

Researchers are interested in making NLP explainable – meaning we can understand why a machine makes a decision based on which language features, instead of a mysterious "black box."

Real-world Knowledge Integration

New models can combine language processing with knowledge bases or external data to better understand context.

Real-time Information

Question-answering systems can look up information from Wikipedia or the internet in real-time

Enhanced Accuracy

Provides accurate answers rather than relying solely on learned data

Multimodal NLP

The trend toward multimodal NLP processes text, images, and audio simultaneously so machines can understand language in a broader context.

NLP is also moving closer to general AI with interdisciplinary research involving cognitive science and neuroscience, aiming to simulate how humans truly understand language.

Conclusion

In summary, Natural Language Processing has been, is, and will continue to be a core field in AI with vast potential. From helping computers understand human language to automating numerous language tasks, NLP is making a profound impact on all aspects of life and technology.

With the development of deep learning and big data, we can expect smarter machines with more natural communication in the near future. Natural language processing is the key to bridging the gap between humans and computers, bringing technology closer to human life in a natural and efficient way.

No comments yet. Be the first to comment!