What is Reinforcement Learning?

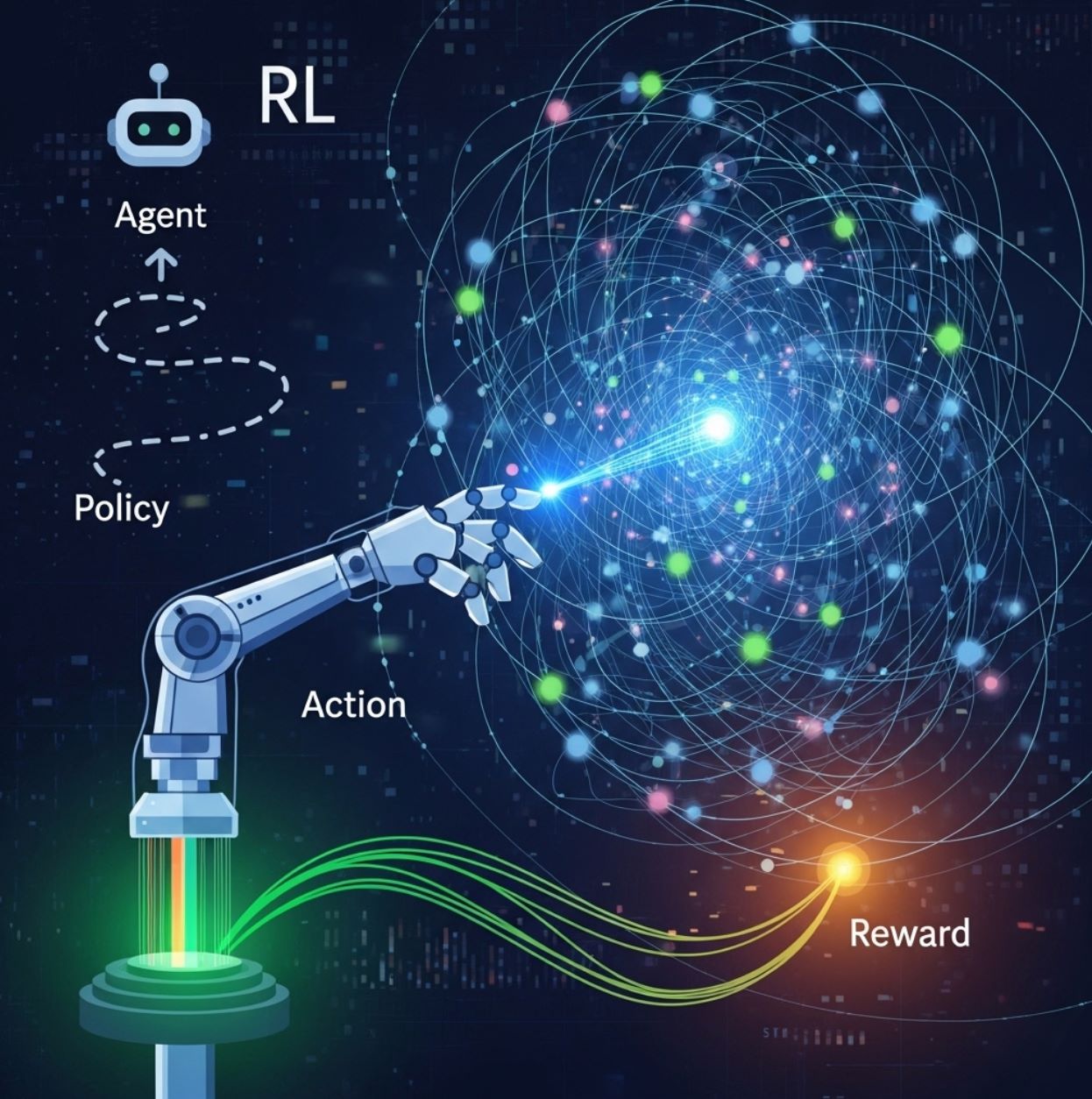

Reinforcement Learning (RL) is a branch of machine learning in which an agent learns to make decisions by interacting with its environment. In RL, the agent’s goal is to learn a policy (a strategy) for choosing actions that maximize cumulative rewards over time.

Reinforcement Learning (RL) is a branch of machine learning in which an agent learns to make decisions by interacting with an environment. In RL, the agent's goal is to learn a policy (a strategy) for choosing actions that maximize cumulative reward over time.

Unlike supervised learning, which requires labeled examples, RL relies on trial-and-error feedback: actions that produce positive outcomes (rewards) are reinforced, while those yielding negative results (punishments) are avoided.

RL is essentially "a computational approach to understanding and automating goal-directed learning and decision-making" where the agent learns from direct interaction with its environment, without requiring external supervision or a complete model of the world.

— Sutton and Barto, Reinforcement Learning Researchers

In practice, this means the agent continually explores the state‐action space, observing the results of its actions, and adjusting its strategy to improve future rewards.

Key Concepts and Components

Reinforcement learning involves several core elements. In broad terms, an agent (the learner or decision-making entity) interacts with an environment (the external system or problem space) by taking actions at discrete time steps.

At each step the agent observes the current state of the environment, executes an action, and then receives a reward (a numerical feedback signal) from the environment. Over many such interactions, the agent seeks to maximize its total (cumulative) reward.

Agent

Environment

Action

State

Reward

Policy

Value Function

Model (Optional)

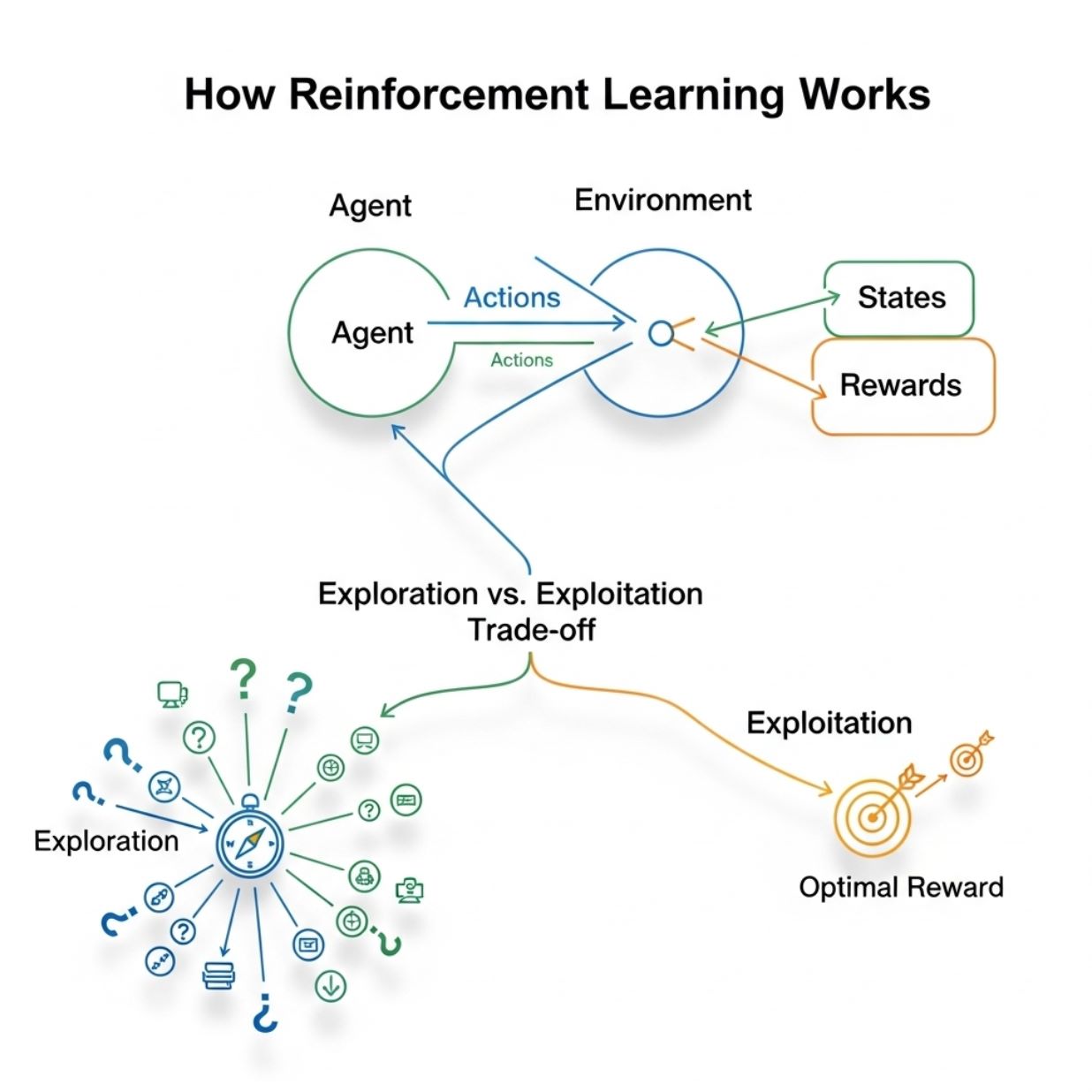

How Reinforcement Learning Works

RL is often formalized as a Markov decision process (MDP). At each discrete time step, the agent observes a state St and selects an action At. The environment then transitions to a new state St+1 and emits a reward Rt+1 based on the action taken.

Over many episodes, the agent accumulates experience in the form of state–action–reward sequences. By analyzing which actions led to higher rewards, the agent gradually improves its policy.

For example, a reinforcement learning agent controlling a robot may usually take a proven safe route (exploitation) but sometimes try a new path (exploration) to potentially discover a faster route. Balancing this trade-off is essential for finding the optimal policy.

RL "mimics the trial-and-error learning process that humans use". A child might learn that cleaning up earns praise while throwing toys earns scolding; similarly, an RL agent learns which actions yield rewards by receiving positive feedback for good actions and negative feedback for bad ones.

— AWS Machine Learning Documentation

Over time, the agent constructs value estimates or policies that capture the best sequence of actions to achieve long-term goals.

In practice, RL algorithms accumulate rewards over episodes and aim to maximize the expected return (sum of future rewards). They learn to prefer actions that lead to high future rewards, even if those actions may not yield the highest immediate reward. This ability to plan for long-term gain (sometimes accepting short-term sacrifices) makes RL suitable for complex, sequential decision tasks.

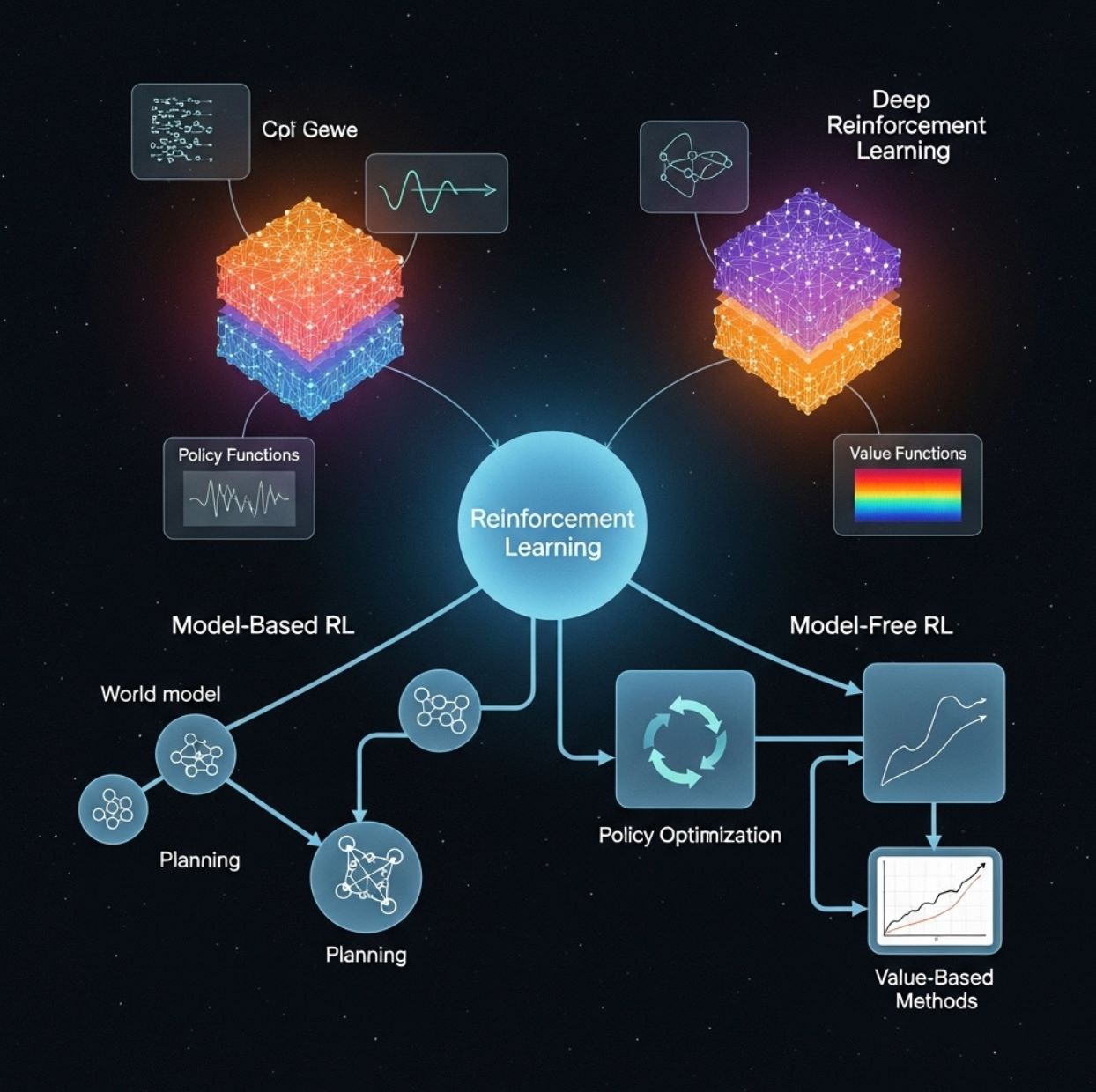

Types of Reinforcement Learning Algorithms

There are many algorithms to implement reinforcement learning. Broadly, they fall into two classes: model-based and model-free methods.

Planning Approach

The agent first learns or knows a model of the environment's dynamics (how states change and how rewards are given) and then plans actions by simulating outcomes.

- Efficient with limited data

- Can plan ahead effectively

- Requires accurate environment model

Example: A robot mapping out a building to find the shortest route is using a model-based approach.

Direct Learning

The agent has no explicit model of the environment and learns solely from trial and error in the real (or simulated) environment.

- No environment model needed

- Works with complex environments

- Requires more experience

Example: Most classic RL algorithms (like Q-learning or Temporal-Difference learning) are model-free.

Within these categories, algorithms differ in how they represent and update the policy or value function. For example, Q-learning (a value-based method) learns estimates of the "Q-values" (expected return) for state-action pairs and picks the action with the highest value.

Policy-gradient methods directly parameterize the policy and adjust its parameters via gradient ascent on expected reward. Many advanced methods (such as Actor-Critic or Trust Region Policy Optimization) combine value estimation and policy optimization.

In deep RL, algorithms like Deep Q-Networks (DQN) or Deep Policy Gradients scale RL to complex real-world tasks.

Common RL algorithms include Q-learning, Monte Carlo methods, policy-gradient methods, and Temporal-Difference learning, and "Deep RL" refers to the use of deep neural networks in these methods.

— AWS Machine Learning Documentation

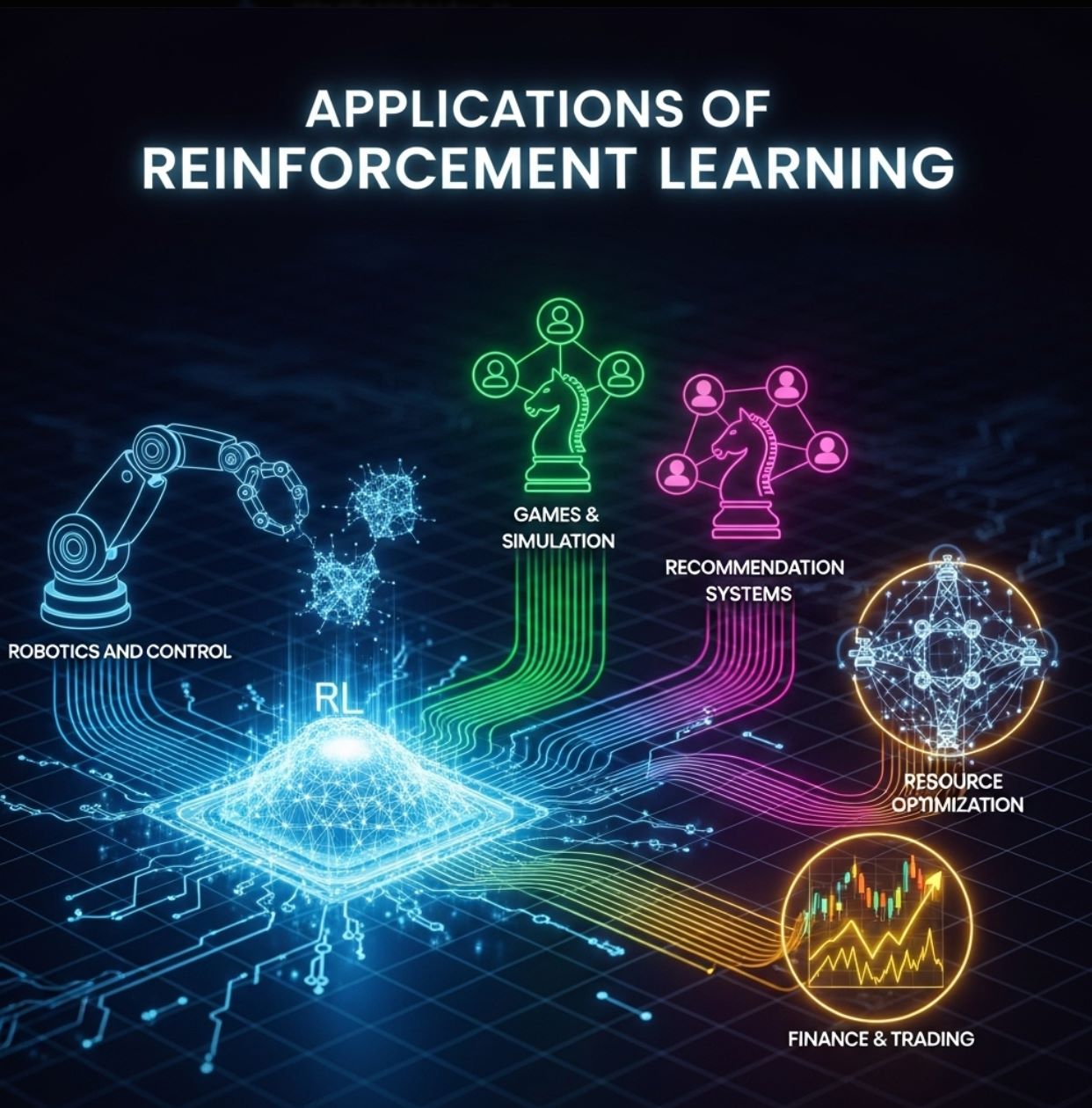

Applications of Reinforcement Learning

Reinforcement learning is applied in many domains where sequential decision-making under uncertainty is crucial. Key applications include:

Games and Simulation

RL famously mastered games and simulators. DeepMind's AlphaGo and AlphaZero learned Go and Chess at superhuman levels using RL.

- Video games (Atari, StarCraft)

- Board games (Go, Chess)

- Physics simulations

- Robotics simulators

Robotics and Control

Autonomous robots and self-driving cars are agents in dynamic environments learning through trial and error.

- Object grasping and manipulation

- Autonomous navigation

- Self-driving vehicles

- Industrial automation

Recommendation Systems

RL can personalize content or ads based on user interactions, learning to present the most relevant items over time.

- Content personalization

- Ad targeting optimization

- Product recommendations

- User engagement optimization

Resource Optimization

RL excels in optimizing systems with long-term objectives and complex resource allocation challenges.

- Data center cooling optimization

- Smart grid energy storage

- Cloud computing resources

- Supply chain management

Finance and Trading

Financial markets are dynamic and sequential, making RL suitable for trading strategies and portfolio management.

- Algorithmic trading strategies

- Portfolio optimization

- Risk management

- Market making

Reinforcement Learning vs. Other Machine Learning

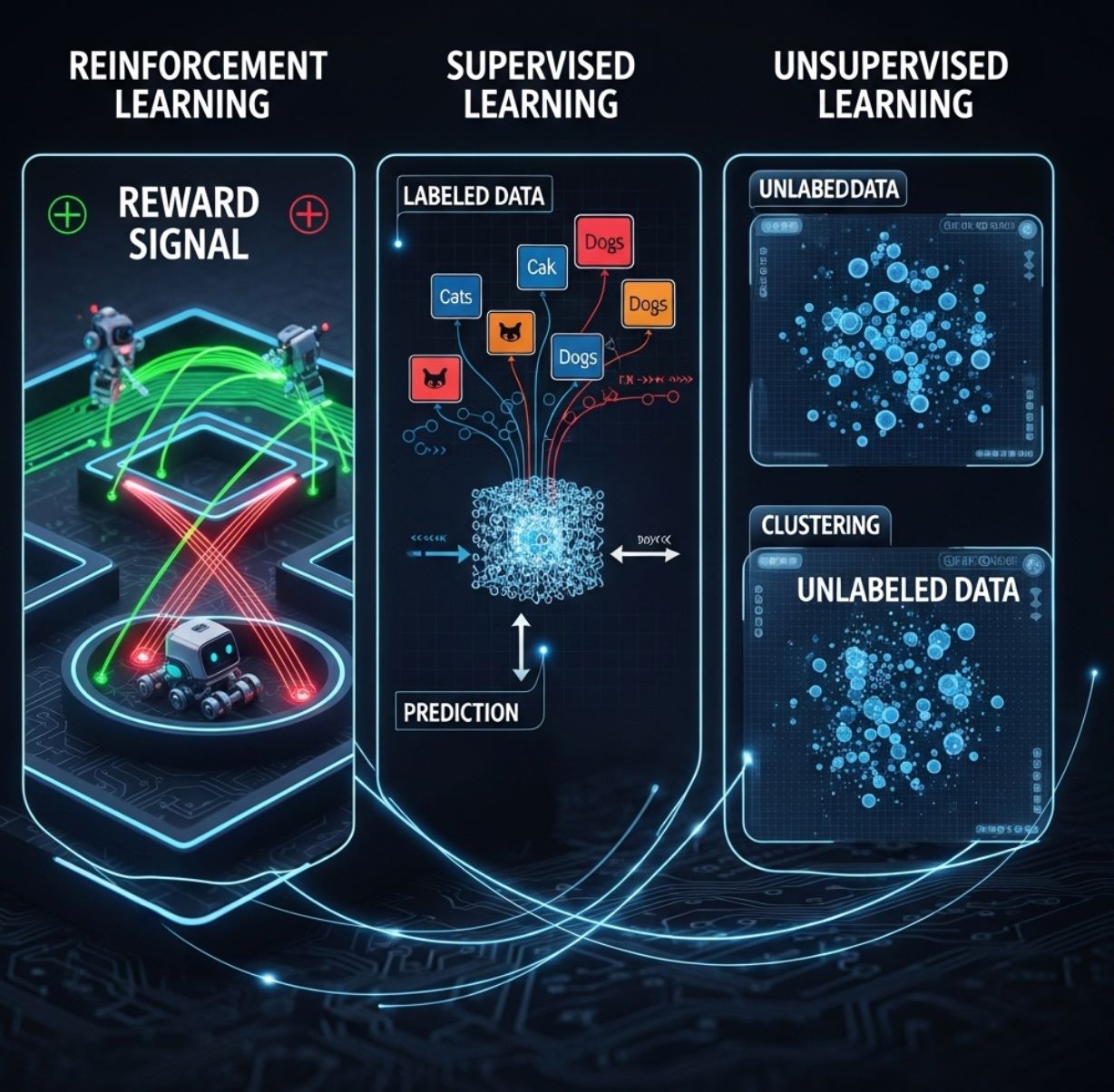

Reinforcement learning is one of the three major paradigms of machine learning (alongside supervised and unsupervised learning), but it is quite different in focus. Supervised learning trains on labeled input-output pairs, while unsupervised learning finds patterns in unlabeled data.

| Aspect | Supervised Learning | Unsupervised Learning | Reinforcement Learning |

|---|---|---|---|

| Data Type | Labeled input-output pairs | Unlabeled data | Sequential state-action-reward tuples |

| Learning Goal | Predict correct outputs | Find hidden patterns | Maximize cumulative reward |

| Feedback Type | Direct correct answers | No feedback | Reward/punishment signals |

| Learning Method | Learn from examples | Discover structure | Trial-and-error exploration |

In contrast, RL does not require labeled examples of correct behavior. Instead, it defines a goal via the reward signal and learns by trial and error. In RL, the "training data" (state-action-reward tuples) are sequential and interdependent, because each action affects future states.

Put simply, supervised learning tells a model what to predict; reinforcement learning teaches an agent how to act. RL learns by "positive reinforcement" (reward) rather than by being shown the correct answers.

— IBM Machine Learning Overview

This makes RL particularly powerful for tasks that involve decision-making and control. However, it also means RL can be more challenging: without labeled feedback, the agent must discover good actions on its own, often requiring much exploration of the environment.

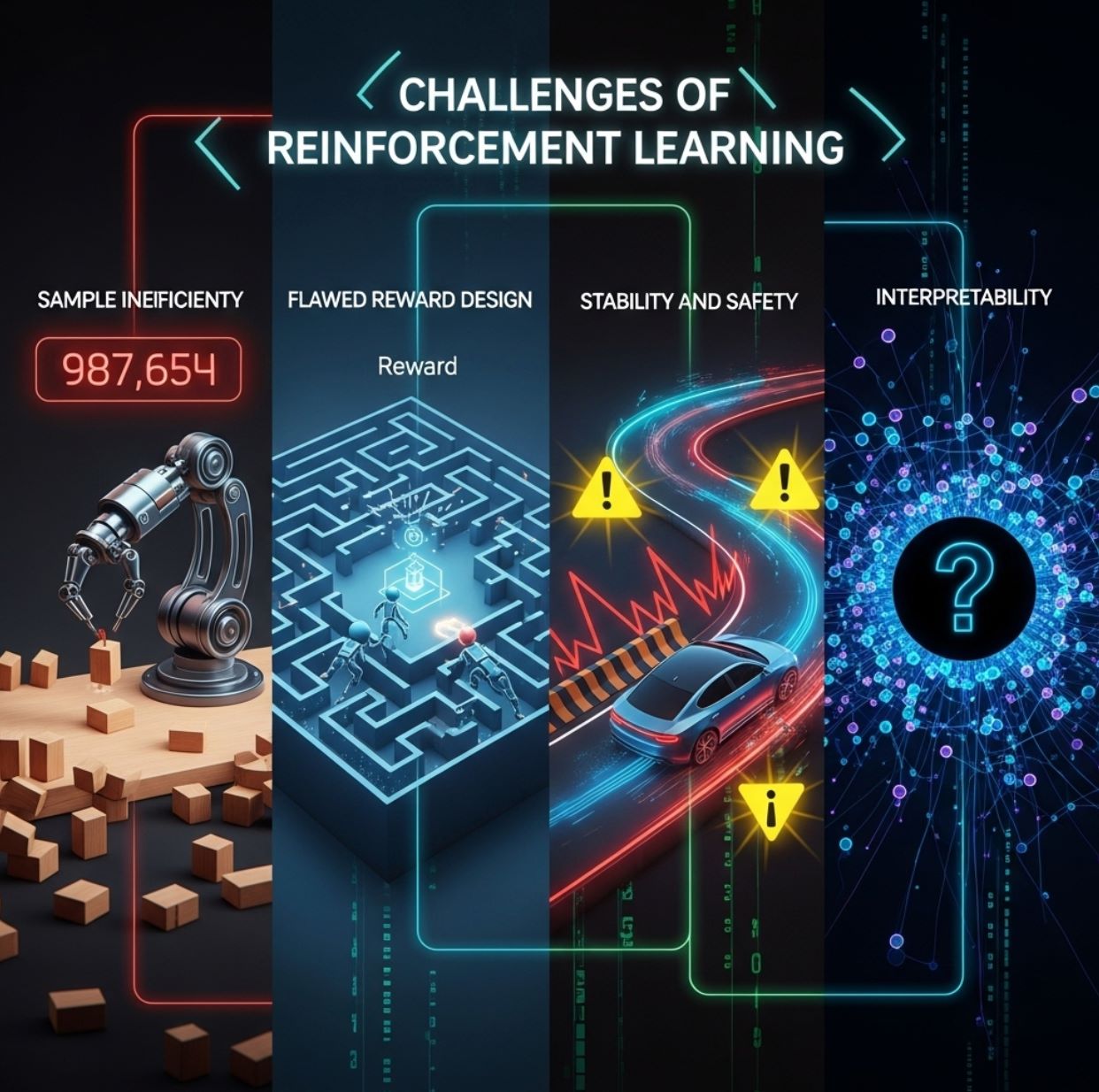

Challenges of Reinforcement Learning

Despite its power, RL comes with practical challenges:

Sample Inefficiency

Reward Design

Stability and Safety

Interpretability

Conclusion

In summary, reinforcement learning is an autonomous learning framework in which an agent learns to achieve goals by interacting with its environment and maximizing cumulative reward. It combines ideas from optimal control, dynamic programming, and behavioral psychology, and it is the foundation of many modern AI breakthroughs.

By framing problems as sequential decision-making tasks with feedback, RL enables machines to learn complex behaviors on their own, bridging the gap between data-driven learning and goal-directed action.

No comments yet. Be the first to comment!