AI Achievements

Artificial Intelligence (AI) has made remarkable strides in recent years, transforming industries from healthcare and finance to art and entertainment. From generative language models that craft human-like text to AI systems mastering complex games and scientific research, these achievements showcase the rapid evolution of machine intelligence. In this article, we explore the most impressive recent AI breakthroughs, highlighting their impact, potential applications, and the future of AI innovation.

For many years (2023–2025), artificial intelligence has leapt forward across many fronts. Large language models (LLMs) and chatbots, multimodal systems, scientific AI tools, and robotics all saw breakthroughs.

Tech giants released new AI assistants, open-source communities rolled out powerful models, and even regulators moved to address AI's impact.

Below we survey the most striking achievements, from GPT-4 extensions and Google's Gemini to AlphaFold's Nobel Prize and AI-driven discoveries in science and art.

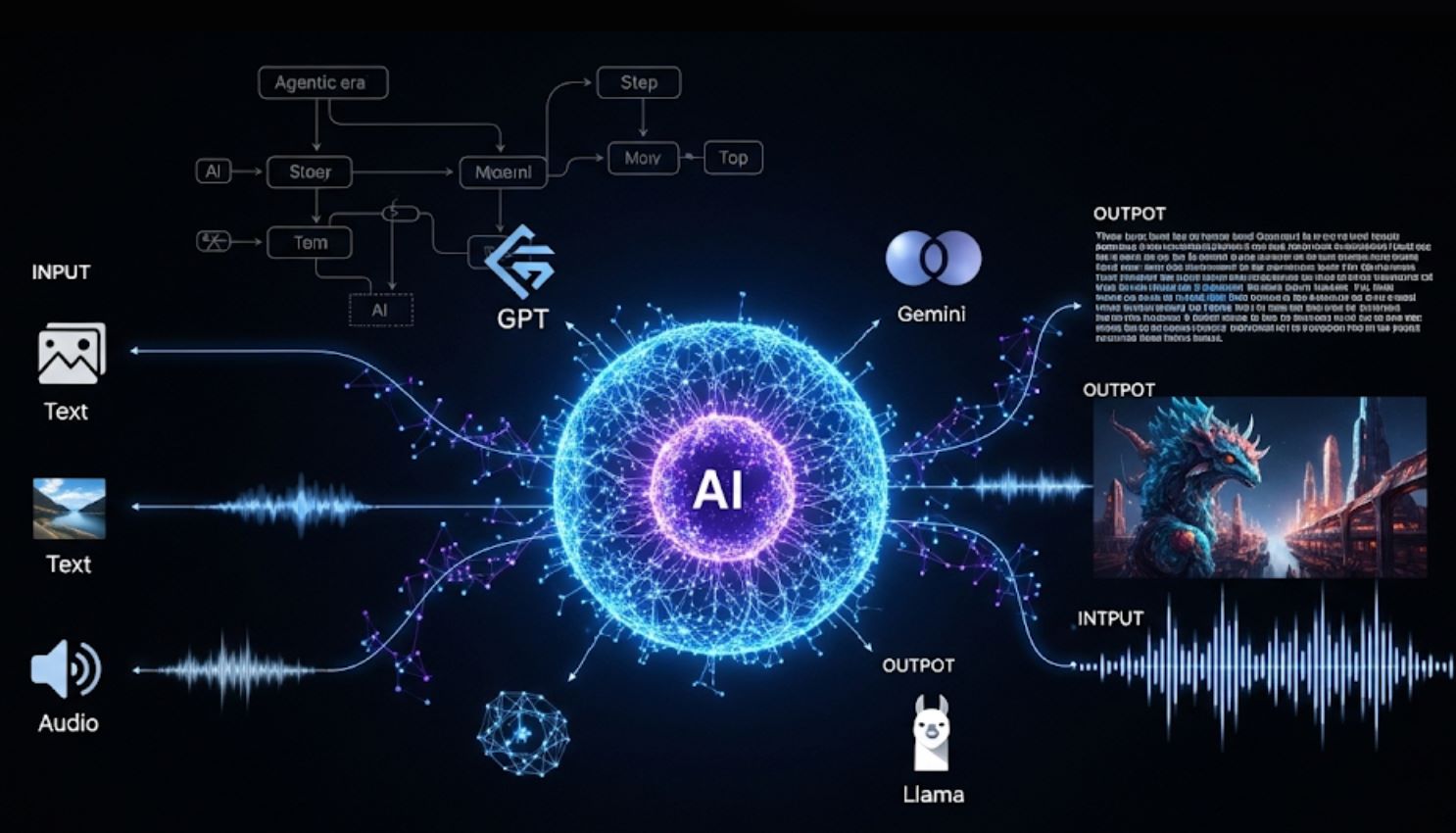

Generative Language Models and Chatbots

Modern LLMs became vastly more capable and multimodal. OpenAI's GPT-4 Turbo (announced Nov 2023) can now process 128,000 tokens in one prompt (roughly 300 pages of text) and is much cheaper to run than GPT-4.

GPT-4o represents a fundamental shift toward truly multimodal AI that can seamlessly handle text, images, and audio in real-time conversations.

— OpenAI Research Team, May 2024

In May 2024 OpenAI introduced GPT-4o (Omni), an upgraded model that handles text, images, and audio in real time – effectively giving GPT-4 conversational "vision and hearing". ChatGPT itself now has built-in image and voice features: users can upload photos or speak to the bot, and it will respond based on that visual or audio input.

GPT-4 Turbo & GPT-4o

GPT-4 Turbo (Nov 2023): Lowered costs and extended context length to 128K tokens.

GPT-4o (May 2024): Made AI truly multimodal, generating text, speech and images interchangeably with near-human speed.

ChatGPT Evolution

By late 2023, ChatGPT "can now see, hear, and speak" – images and audio can be uploaded or spoken as prompts.

Integrated DALL·E 3 (Oct 2023) for conversational image generation.

Google's Gemini Series

In Dec 2024, Google DeepMind rolled out the first Gemini 2.0 models ("Flash" and prototypes) designed for the "agentic era" – AI that can autonomously carry out multi-step tasks.

- Testing with over 1 billion users

- Enhanced reasoning capabilities

- Advanced multimodal abilities

Open Source & Enterprise Models

Meta released LLaMA 3 in April 2024 (open-weight LLMs up to 400B parameters) claiming superior performance.

- Anthropic's Claude 3 advancement

- Microsoft Copilot integration

- OpenAI Assistants API

They also power new "assistant" apps via APIs (Google's "AI Overviews", OpenAI's Assistants API, etc.), making AI more accessible to developers and end-users.

Multimodal & Creative AI Advances

AI's creativity and visual understanding have exploded. Text-to-image and text-to-video models reached new heights:

OpenAI's DALL·E 3 (Oct 2023) generates photorealistic images from prompts and is even integrated with ChatGPT for guided prompt-writing.

Google introduced Imagen 3 (Oct 2024) and Veo 2 (Dec 2024) – state-of-the-art text-to-image and text-to-video engines – which dramatically improve quality, detail, and consistency in AI art and video generation.

Even music AI improved with Google's MusicFX tools and related research (e.g. MusicLM experiments).

Advanced Generation Capabilities

- DALL·E 3 and Imagen 3: Can follow subtle prompts (including embedded text in images) with high fidelity

- Google's Veo 2: Generates short video clips from single text descriptions, marking significant progress in video synthesis

- Stable Diffusion and Midjourney: Released newer versions (v3, v6) with enhanced realism throughout 2024

Apple Intelligence Integration

Apple launched Apple Intelligence (in iOS 18 and macOS 15, late 2024) – built-in generative AI on iPhone/iPad/Mac.

Writing & Communication

- Rewrite, proofread, summarize in Mail/Pages

- Enhanced Siri capabilities

- Natural language processing

Visual & Creative Tools

- Image Playground: Create illustrations via text

- Genmoji: AI-generated custom emoji

- Clean Up: Remove unwanted objects from photos

Historic Art Market Achievement

A striking example: in Nov 2024 Sotheby's sold the first painting by a humanoid robot.

Record-Breaking AI Art Sale

A portrait of Alan Turing drawn by the AI-powered robot Ai-Da fetched US$1.08 million.

This record-breaking sale ("A.I. God: Portrait of Alan Turing") underscores AI's growing role in creativity and its cultural impact.

Early AI Art

- Novelty-focused outputs

- Surreal, abstract images

- Limited practical applications

- Basic text-to-image only

Modern AI Creativity

- Useful image generation (logos, diagrams, maps)

- Human-like realism

- Integrated creative workflows

- Multimodal capabilities

Overall, generative models are democratizing creativity: anyone can now generate art, music or video with a few words. The industry focus has shifted from mere novelty (surreal images) to useful image generation (logos, diagrams, maps) and human-like realism.

(In March 2025 OpenAI even released "4o Image Generation", integrating its best image model into GPT-4o for precise, photorealistic outputs guided by conversation.)

These tools are quickly being woven into apps, browsers, and creative workflows.

AI in Science, Medicine and Math

AI achievements have fueled scientific discovery and research advances:

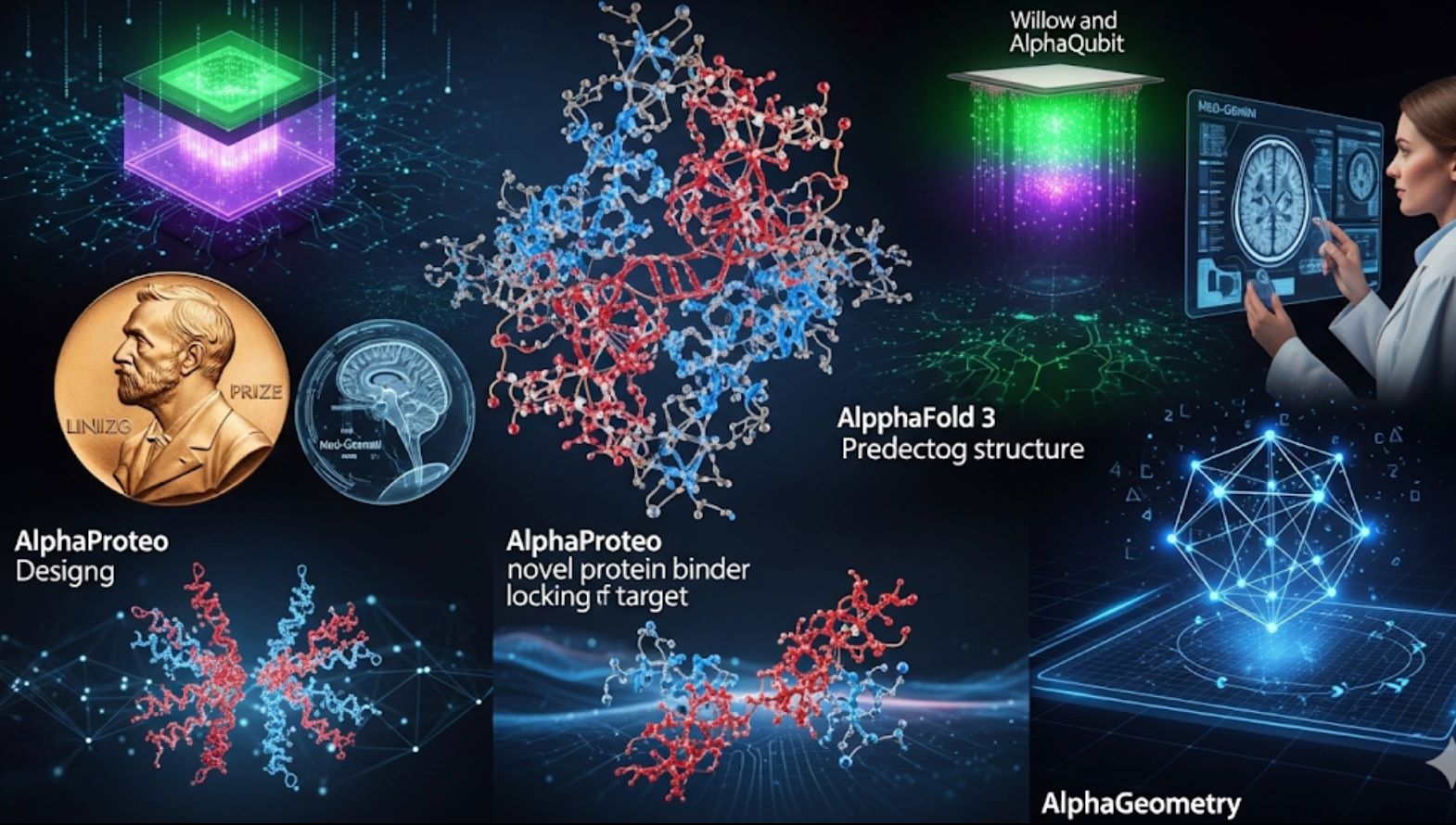

AlphaFold 3 – Revolutionary Biomolecular Prediction

In Nov 2024 Google DeepMind (with Isomorphic Labs) unveiled AlphaFold 3, a new model that predicts the 3D structures of all biomolecules (proteins, DNA, RNA, ligands, etc.) simultaneously, with unprecedented accuracy.

Its creators immediately released a free AlphaFold Server so researchers worldwide can predict molecular structures. This expands on AlphaFold 2's protein-only predictions and is expected to transform drug discovery and genomics research.

AlphaProteo – Drug Design

Also in 2024, DeepMind announced AlphaProteo, an AI that designs novel protein binders – molecules that bind to target proteins with high strength.

- Accelerates antibody creation

- Develops biosensors

- Generates drug leads

- Creates protein structures for specified targets

Mathematics – AlphaGeometry

DeepMind's AlphaGeometry and AlphaProof proved another breakthrough.

- 19 seconds to solve International Mathematical Olympiad problem

- Silver medalist level performance

- Advanced high-school math capability

Quantum Computing Breakthroughs – AlphaQubit & Willow

AI also improved cutting-edge hardware. In 2024 Google announced AlphaQubit, an AI-based decoder that identifies errors in quantum computers (e.g. Google's Sycamore chips) far better than prior methods.

Then in Dec 2024 Google unveiled Willow, a new quantum chip that, using advanced error correction, solved a benchmark task in under 5 minutes that would take today's best supercomputer ~10^24 years.

Med-Gemini represents a significant leap in medical AI capabilities, achieving 91.1% accuracy on US medical exam benchmarks – a performance that surpasses previous models by a substantial margin.

— Google Health AI Research Team, 2024

In medicine and health, AI models made strides too. For example, Google's new Med-Gemini (fine-tuned on medical data) scored 91.1% on a US medical exam benchmark (USMLE-style), beating prior models by a wide margin.

AI-enabled tools for radiology and pathology (e.g. Derm and Path Foundations) were released to improve image analysis. Overall, AI is now an indispensable research partner – from mapping the human brain at nanoscale (with AI-assisted EM imaging) to accelerating TB screening in Africa, as reported by Google researchers.

AI in Robotics and Automation

Robots powered by AI are learning complex real-world tasks.

Tesla's Optimus humanoid robots were publicly demoed in October 2024 ("We, Robot" event). Several dozen Optimus units walked, stood and even danced on stage – though later reports noted the initial demos were partly remote-controlled by humans.

Still, the event highlighted rapid progress toward general-purpose robots.

DeepMind's ALOHA Robots

Google's AI lab made impressive headway in domestic robots. In 2024 the ALOHA robot (Autonomous Legged Household Assistant) learned to tie shoelaces, hang a shirt, repair another robot, insert gears and even clean a kitchen using only AI planning and vision.

"ALOHA Unleashed" open-sources showed robots coordinating two arms for tasks, a first in general-purpose manipulation.

Robotic Transformers

DeepMind introduced RT-2 (Robotic Transformer 2), a vision-language-action model that can learn from both internet images and real robot data.

RT-2 lets robots interpret instructions like a human would by leveraging web knowledge. It was demonstrated helping a robot sort objects by following text commands.

Industry Applications

Other companies also advanced: Boston Dynamics continued refining Atlas and Spot robots (though no single headline breakthrough), and AI-driven autonomous vehicles improved (Tesla's Full Self-Driving Beta saw wider rollout, though full autonomy remains unsolved).

In manufacturing, AI-centric firms like Figure AI raised funds to build household robots for chores.

Demonstration Phase

- Impressive controlled demonstrations

- Specific task learning

- Limited real-world deployment

- Human oversight required

Full Autonomy

- Safe human collaboration

- General-purpose capabilities

- Reliable real-world operation

- Scale deployment

These efforts show robots doing progressively harder tasks without explicit programming. However, true fully autonomous humanoids are still on the horizon.

The demonstrations (Optimus, ALOHA, RT-2) are milestones, but researchers caution there's more work before robots can safely and reliably work alongside humans at scale.

AI in Products, Industry and Society

AI's impact extends to everyday products and even policy:

AI Integration in Everyday Technology

Major tech products incorporated AI agents. Microsoft's Copilot (embedded in Windows, Office, Bing) and Google's Bard/Bard AI in Search (Gemini behind it) brought LLM power to users.

Apple's devices got Apple Intelligence (as above) and hardware makers like Nvidia sold record numbers of AI GPUs, powering both cloud and consumer AI.

EU AI Act - First Comprehensive AI Law

Reflecting AI's reach, regulators acted too. On August 1, 2024 the EU AI Act came into force, the first comprehensive AI law.

Risk-Based Framework

- Low-risk AI: Minimal rules (spam filters, video games)

- Transparency rules: AI systems must disclose they are AI

- High-risk AI: Strict oversight (medical, hiring tools)

- Unacceptable AI: Banned (government social scoring)

Global Impact

This set of rules (along with forthcoming guidelines on general-purpose models) is a major achievement in AI governance and is likely to influence standards worldwide.

Historic Investment and Valuations

The AI sector itself saw historic funding and valuations:

| Company | Achievement | Value/Impact | Significance |

|---|---|---|---|

| OpenAI | Valuation | $157 billion | Record |

| NVIDIA | Market Cap | $3.5+ trillion | AI Hardware Leader |

| Multiple Startups | Funding Rounds | Multi-billion $ | Growth Phase |

These numbers underscore how AI has become central to the tech economy.

Looking Forward: AI's Transformative Impact

In short, AI is no longer confined to labs or novelty demos – it's embedded in phones, cars, workplaces and public policy.

Knowledge Revolution

GPT-4's vast knowledge capabilities demonstrate AI's potential as a universal knowledge assistant.

Scientific Breakthroughs

AlphaFold's scientific revolutions show AI's power to accelerate human discovery and research.

Daily Integration

AI is becoming seamlessly integrated into our daily tools and workflows.

The advancements above – from GPT-4's vast knowledge to AlphaFold's scientific revolutions – demonstrate AI's rapid maturation.

As we head into 2025, these achievements foreshadow even more powerful and practical AI applications in our daily lives.

No comments yet. Be the first to comment!