Generative AI is a branch of artificial intelligence that uses deep-learning (neural network) models trained on massive datasets to create new content. These models learn patterns in text, images, audio or other data so they can produce original outputs (like articles, images, or music) in response to user prompts.

In other words, generative AI generates media “from scratch” rather than simply analyzing or classifying existing data. The diagram here illustrates how generative models (center circle) sit within neural networks, which are part of machine learning and the broader AI field.

For example, IBM describes generative AI as deep-learning models that “generate high-quality text, images, and other content based on the data they were trained on”, and it relies on sophisticated neural algorithms that identify patterns in huge datasets to produce novel outputs.

How Generative AI Works

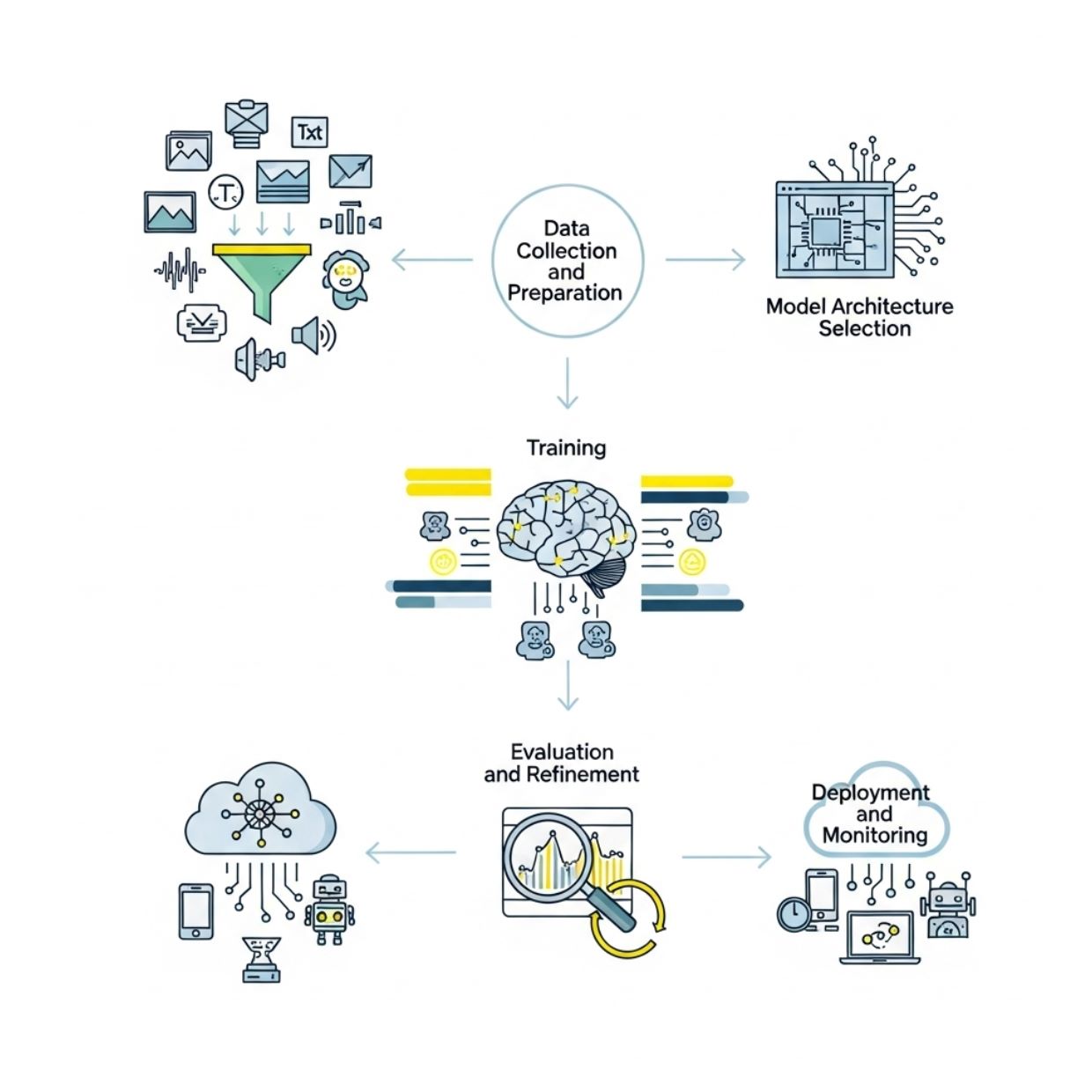

Building a generative AI system typically involves three main phases:

- Training (Foundation Model): A large neural network (often called a foundation model) is trained on vast amounts of raw, unlabeled data (e.g. terabytes of internet text, images or code). During training, the model learns by predicting missing pieces (for instance, filling in the next word in millions of sentences). Over many iterations it adjusts itself to capture complex patterns and relationships in the data. The result is a neural network with encoded representations that can generate content autonomously in response to inputs.

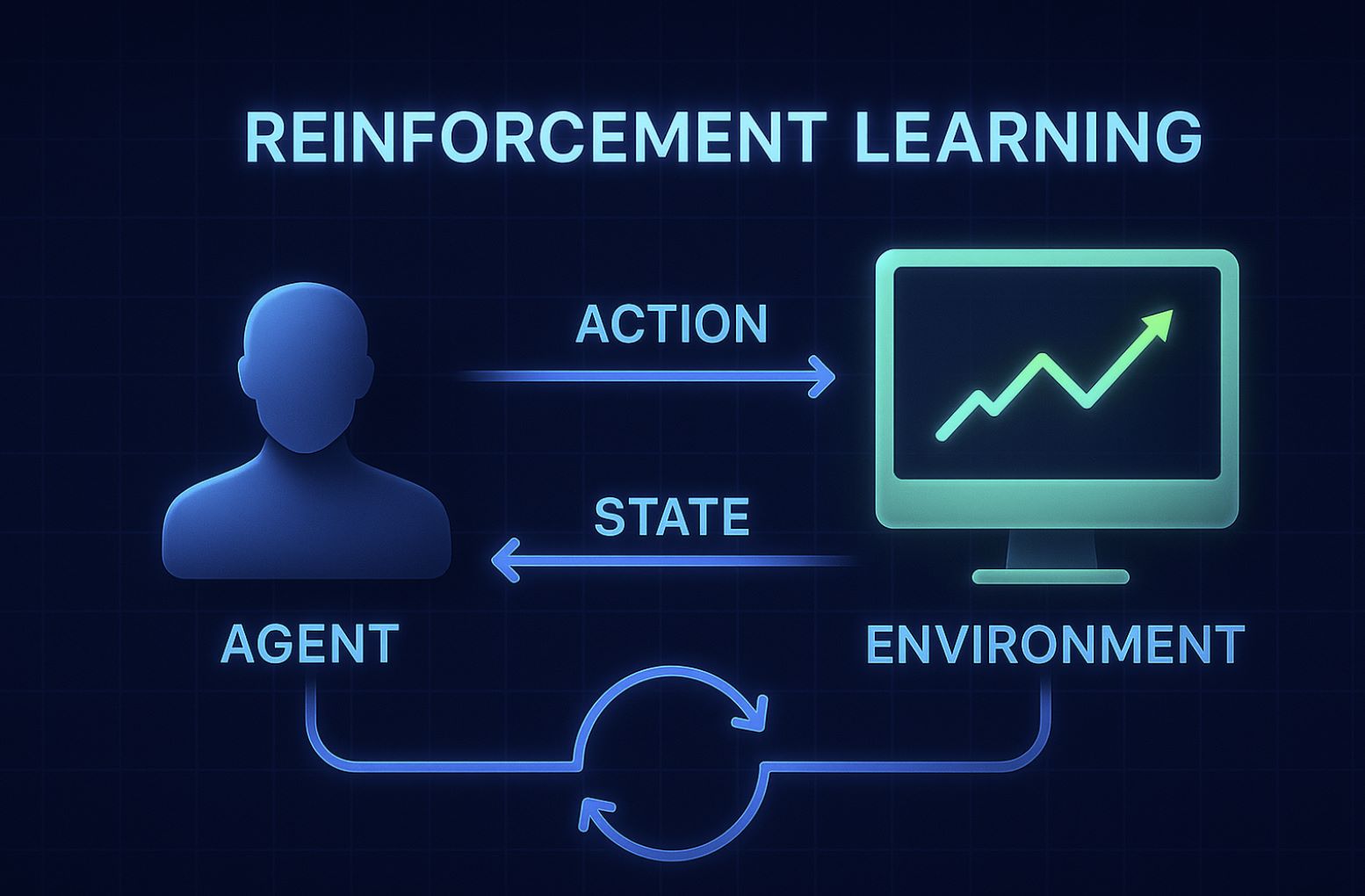

- Fine-tuning: After initial training, the model is customized for specific tasks by fine-tuning. This may involve additional training on labeled examples or Reinforcement Learning from Human Feedback (RLHF), where humans rate the model’s outputs and the model adjusts to improve quality. For example, a chatbot model can be fine-tuned using a set of customer questions and ideal answers to make its responses more accurate and relevant.

- Generation: Once trained and tuned, the model generates new content from a prompt. It does so by sampling from the patterns it has learned – e.g. predicting one word at a time for text, or refining pixel patterns for images. In practice, “the model generates new content by identifying patterns in existing data”. Given a user’s prompt, the AI predicts a sequence of tokens or images step-by-step to create the output.

- Retrieval and Refinement (RAG): Many systems also use Retrieval-Augmented Generation to improve accuracy. Here the model pulls in external information (like documents or a database) at generation time to ground its answers in up-to-date facts, supplementing what it learned during training.

Each phase is compute-intensive: training a foundation model can require thousands of GPUs and weeks of processing. The trained model can then be deployed as a service (e.g. a chatbot or image API) that generates content on demand.

Key Model Types and Architectures

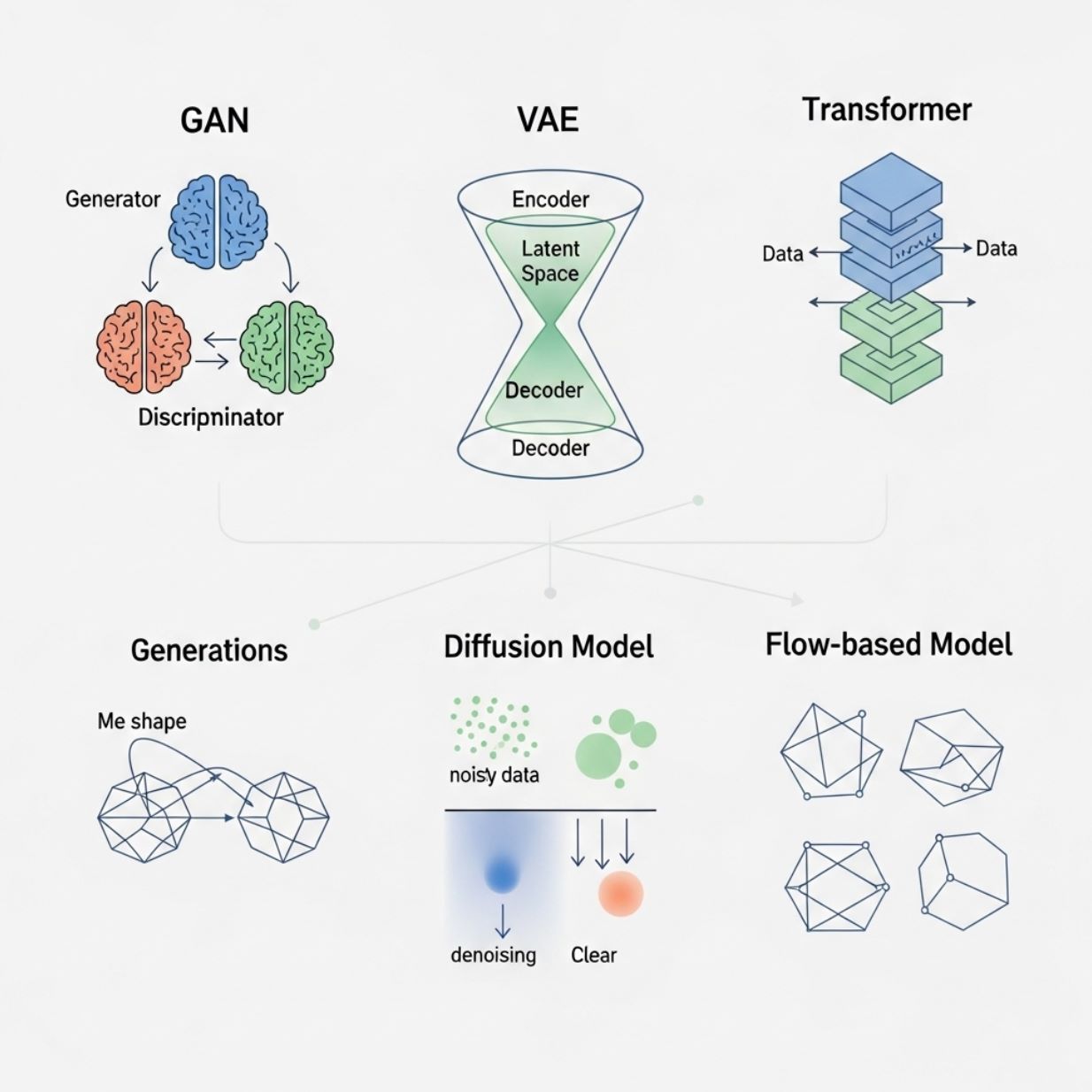

Generative AI uses several modern neural architectures, each suited to different media:

- Large Language Models (LLMs) / Transformers: These are at the core of today’s text-based generative AI (e.g. OpenAI’s GPT-4, Google Bard). They use transformer networks with attention mechanisms to produce coherent, context-aware text (or even code). LLMs are trained on billions of words and can complete sentences, answer questions, or write essays with human-like fluency.

- Diffusion Models: Popular for image (and some audio) generation (e.g. DALL·E, Stable Diffusion). These models start with random noise and iteratively “denoise” it into a coherent image. The network learns to reverse a corruption process and thus can generate highly realistic visuals from text prompts. Diffusion models have largely replaced older methods for AI art because of their fine-grained control over image details.

- Generative Adversarial Networks (GANs): An earlier image-generation technique (circa 2014) with two neural networks in competition: a generator creates images and a discriminator judges them. Through this adversarial process, GANs produce extremely realistic images and are used for tasks like style transfer or data augmentation.

- Variational Autoencoders (VAEs): Another older deep learning model that encodes data into a compressed space and decodes it to generate new variations. VAEs were among the first deep generative models for images and speech (circa 2013) and demonstrated early success, although modern generative AI has largely moved to transformers and diffusion for highest quality output.

- (Other): There are also specialized architectures for audio, video, and multimodal content. Many cutting-edge models combine these techniques (e.g. transformers with diffusion) to handle text+image together. IBM notes that today’s multimodal foundation models can support generating several kinds of content (text, images, sound) from a single system.

Together, these architectures power the range of generative tools in use today.

Applications of Generative AI

Generative AI is being applied across many fields. Key use cases include:

- Marketing & Customer Experience: Auto-writing marketing copy (blogs, ads, emails) and producing personalized content on the fly. It also powers advanced chatbots that can converse with customers or even take actions (e.g. assist with orders). For example, marketing teams can generate multiple ad variants instantly and tailor them by demographic or context.

- Software Development: Automating code generation and completion. Tools like GitHub Copilot use LLMs to suggest code snippets, fix bugs, or translate between programming languages. This dramatically speeds up repetitive coding tasks and aids application modernization (e.g. converting old codebases to new platforms).

- Business Automation: Drafting and reviewing documents. Generative AI can quickly write or revise contracts, reports, invoices, and other paperwork, reducing manual effort in HR, legal, finance and more. This helps employees focus on complex problem-solving rather than routine drafting.

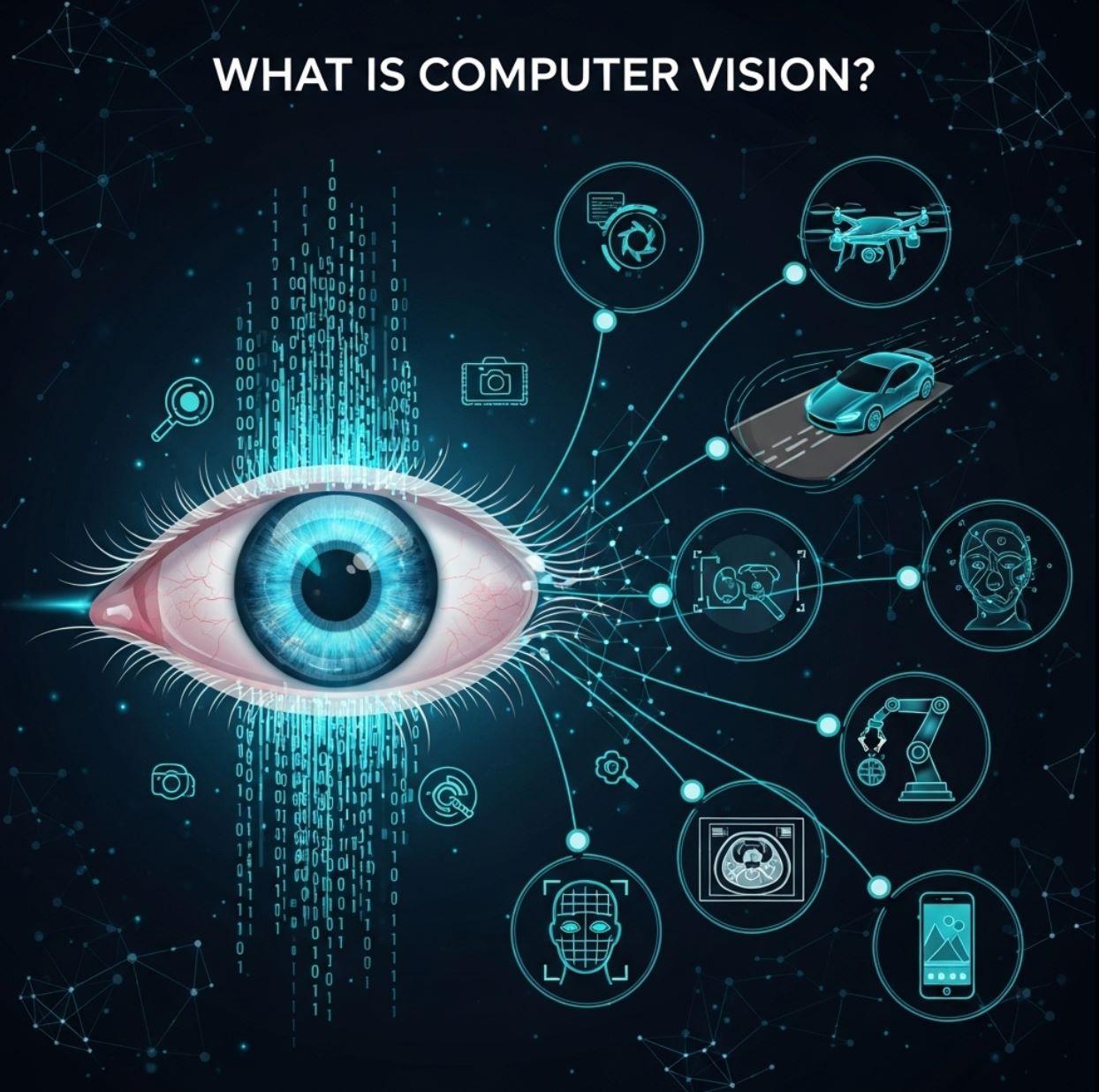

- Research & Healthcare: Suggesting novel solutions to complex problems. In science and engineering, models can propose new drug molecules or design materials. For instance, AI can generate synthetic molecular structures or medical images for training diagnostic systems. IBM notes generative AI is used in healthcare research to create synthetic data (e.g. medical scans) when real data is scarce.

- Creative Arts & Design: Assisting or creating artwork, graphics, and media. Designers use generative AI to produce original art, logos, game assets or special effects. Models like DALL·E, Midjourney or Stable Diffusion can create illustrations or modify photos on demand. They offer new creative tools, for example generating multiple variations of an image to inspire artists.

- Media & Entertainment: Generating audio and video content. AI can compose music, generate natural-sounding speech, or even draft short videos. For example, it can produce voiceover narration in a chosen style or create music tracks based on a text description. While full video generation is still emerging, tools already exist to create animation clips from text prompts, with quality improving rapidly.

These examples barely scratch the surface; the technology is evolving so quickly that new applications (e.g. personalized tutoring, virtual reality content, automated news writing) are emerging all the time.

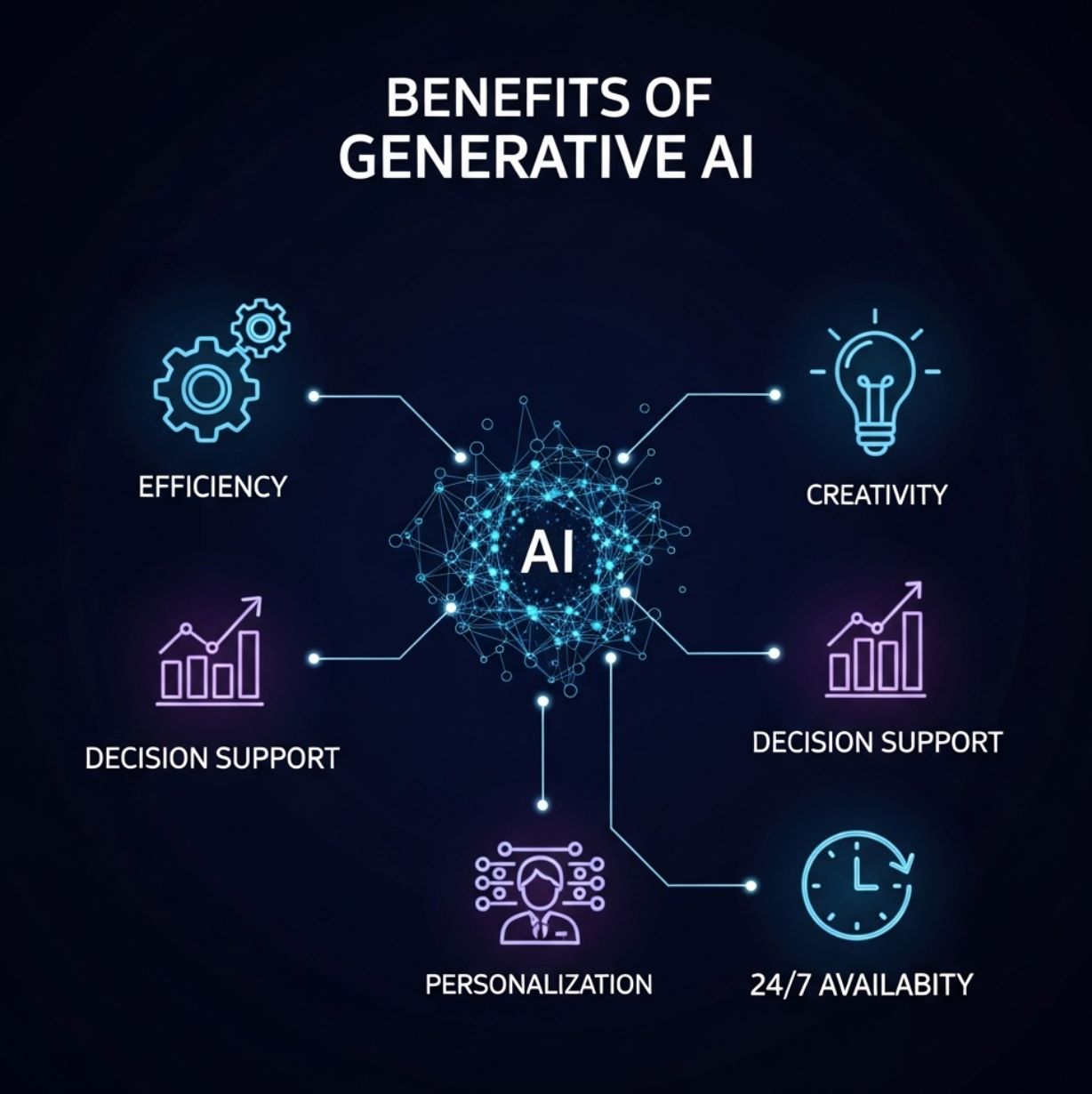

Benefits of Generative AI

Generative AI brings several advantages:

- Efficiency and Automation: It automates time-consuming tasks. For instance, it can draft emails, code or design ideas in seconds, greatly accelerating work and freeing people to focus on higher-level tasks. Organizations report dramatic productivity gains as teams generate content and ideas far faster than before.

- Enhanced Creativity: It can boost creativity by brainstorming and exploring variations. A writer or artist can generate multiple drafts or design options at the click of a button, helping overcome writer’s or artist’s block. This “creative partner” capability means even non-experts can experiment with new concepts.

- Better Decision Support: By quickly analyzing large datasets, generative AI can surface insights or hypotheses that help human decision-making. For example, it can summarize complex reports or suggest statistical patterns across data. IBM notes it enables smarter decisions by sifting through data to generate helpful summaries or predictive ideas.

- Personalization: Models can tailor outputs to individual preferences. For example, they can generate personalized marketing content, recommend products, or adapt interfaces for each user’s context. This real-time personalization improves user engagement.

- 24/7 Availability: AI systems don’t tire. They can provide around-the-clock service (e.g. chatbots that answer questions day and night) without fatigue. This ensures consistent performance and constant access to information or creative assistance.

In sum, generative AI can save time, spark innovation, and handle large-scale creative or analytical tasks with speed and scale.

Challenges and Risks of Generative AI

Despite its power, generative AI has significant limitations and dangers:

- Inaccurate or Fabricated Outputs (“Hallucinations”): Models can produce plausible-sounding but false or nonsensical answers. For example, a legal research AI might confidently cite fake case quotes. These “hallucinations” arise because the model doesn’t truly understand facts – it only predicts likely continuations. Users must fact-check AI outputs carefully.

- Bias and Fairness: Since AI learns from historical data, it can inherit societal biases in that data. This may lead to unfair or offensive results (e.g. biased job recommendations or stereotyped image captions). Preventing bias requires careful curation of training data and ongoing evaluation.

- Privacy and IP Concerns: If users feed sensitive or copyrighted material into a model, it might inadvertently reveal private details in its outputs or infringe on intellectual property. Models can also be probed to leak parts of their training data. Developers and users must safeguard inputs and monitor outputs for such risks.

- Deepfakes and Misinformation: Generative AI can create highly realistic fake images, audio or video (deepfakes). These can be used maliciously to impersonate individuals, spread false information, or scam victims. Detecting and preventing deepfakes is a growing concern for security and media integrity.

- Lack of Explainability: Generative models are often “black boxes”. It’s typically impossible to understand why they produced a given output or to audit their decision process. This opacity makes it hard to guarantee reliability or trace errors. Researchers are working on explainable AI techniques, but this remains an open challenge.

Other issues include the massive computational resources required (raising energy costs and carbon footprint) and legal/ethical questions about content ownership. All told, while generative AI is powerful, it requires careful human oversight and governance to mitigate its risks.

The Future of Generative AI

Generative AI is advancing at a breakneck pace. Adoption is growing rapidly: surveys find about one-third of organizations already use generative AI in some way, and analysts predict that roughly 80% of companies will have deployed it by 2026. Experts expect this technology to add trillions of dollars to the global economy and transform industries.

For example, Oracle reports that after ChatGPT’s debut, generative AI “became a global phenomenon” and is “expected to add trillions to the economy” by enabling massive productivity gains.

Looking ahead, we’ll see more specialized and powerful models (for science, law, engineering, etc.), better techniques to keep outputs accurate (e.g. advanced RAG and better training data), and integration of generative AI into everyday tools and services.

Emerging concepts like AI agents – systems that use generative AI to autonomously perform multi-step tasks – represent a next step (for example, an agent that can plan a trip using AI-generated recommendations and then book hotels and flights). At the same time, governments and organizations are beginning to develop policies and standards around ethics, safety, and copyright for generative AI.

>>>Do you want to know:

What is Narrow AI and General AI?

In summary, generative AI refers to AI systems that create new, original content by learning from data. Powered by deep neural networks and large foundation models, it can write text, generate images, compose audio and more, enabling transformative applications.

While it offers huge benefits in creativity and efficiency, it also brings challenges like errors and bias that users must address. As the technology matures, it will increasingly become an integral tool across industries, but responsible use will be essential to harness its potential safely.