AI Development Trends in the Next 5 Years

Artificial Intelligence (AI) is becoming a key driver of global digital transformation. Over the next five years, AI will continue to evolve with major trends such as intelligent automation, generative AI, and applications in healthcare, education, finance, and data management. These advancements not only help businesses optimize performance and enhance customer experience but also raise challenges related to ethics, security, and employment. Understanding future AI trends will enable individuals and organizations to seize opportunities and adapt quickly in the new technological era.

Artificial intelligence (AI) has progressed at breakneck speed in recent years – from generative AI tools like ChatGPT becoming household names to self-driving cars leaving the lab and hitting public roads.

As of 2025, AI is permeating almost every sector of the economy, and experts widely view it as a transformative technology of the 21st century.

The next five years will likely see AI's influence deepen even further, bringing both exciting innovations and new challenges.

This article examines the key AI development trends projected to shape our world over the next half-decade, drawing on insights from leading research institutions and industry observers.

- 1. Surging Adoption and Investment in AI

- 2. Advances in AI Models and Generative AI

- 3. Rise of Autonomous AI Agents

- 4. Specialized AI Hardware and Edge Computing

- 5. AI Transforming Industries and Daily Life

- 6. Responsible AI and Regulation

- 7. Global Competition and Collaboration

- 8. AI's Impact on Jobs and Skills

- 9. Conclusion: Shaping the AI Future

Surging Adoption and Investment in AI

AI adoption is at an all-time high. Businesses across the globe are embracing AI to boost productivity and gain competitive advantages. Nearly four out of five organizations worldwide are now using or exploring AI in some form – a historic peak in engagement.

This surge in funding is driven by confidence in AI's tangible business value: 78% of organizations reported using AI in 2024 (up from 55% in 2023) as companies integrate AI into products, services, and core strategies.

Analysts project this momentum will continue, with the global AI market growing from about $390 billion in 2025 to over $1.8 trillion by 2030 – an astonishing ~35% annual growth rate. Such growth, unprecedented even compared to past tech booms, reflects how integral AI is becoming to modern enterprise.

We're on the precipice of an entirely new technology foundation, where the best of AI is available to any business.

— Industry Leader, Technology Sector

Productivity Gains

Early adopters report significant returns from AI implementation.

- 15–30% improvements in productivity

- Enhanced customer satisfaction

- Double-digit revenue boosts

Enterprise Integration

AI moving from pilot projects to full-scale deployment.

- 60% of SaaS products have AI features

- AI "copilots" across departments

- Cloud service demand soaring

Strategic Imperative

AI strategy is now mission-critical for competitive advantage.

- Systematic workflow infusion

- Employee upskilling programs

- Process reengineering

Productivity gains and ROI are key drivers. Early adopters are already seeing significant returns from AI. Studies find top firms using AI report 15–30% improvements in metrics like productivity and customer satisfaction in AI-enabled workflows.

For example, small and mid-sized businesses that implemented generative AI have seen double-digit revenue boosts in some cases. Much of AI's value comes from cumulative incremental gains – automating countless small tasks and optimizing processes – which can transform a company's efficiency when scaled across the organization.

As a result, having a clear AI strategy is now mission-critical. Companies that successfully embed AI into their operations and decision-making stand to leap ahead of competitors, while those that lag in adoption risk falling irreparably behind. Indeed, industry analysts predict a widening gap between AI leaders and laggards in the next few years, potentially reshaping entire market landscapes.

Enterprise AI integration is accelerating. In 2025 and beyond, we will see businesses of all sizes moving from pilot projects to full-scale AI deployment. Cloud computing giants (the "hyperscalers") report that enterprise demand for AI-powered cloud services is soaring, and they are investing heavily in AI infrastructure to capture this opportunity.

These providers are partnering with chipmakers, data platforms, and software firms to offer integrated AI solutions that meet enterprises' needs for performance, profitability, and security. Notably, over 60% of software-as-a-service products now have AI features built in, and companies are rolling out AI "copilots" for functions ranging from marketing to HR.

The mandate for executives is clear: treat AI as a core part of business, not a tech experiment. In practice, this means systematically infusing AI into workflows, upskilling employees to work alongside AI, and reengineering processes to fully leverage intelligent automation. Organizations that take these steps are expected to see outsized benefits in the coming years.

Advances in AI Models and Generative AI

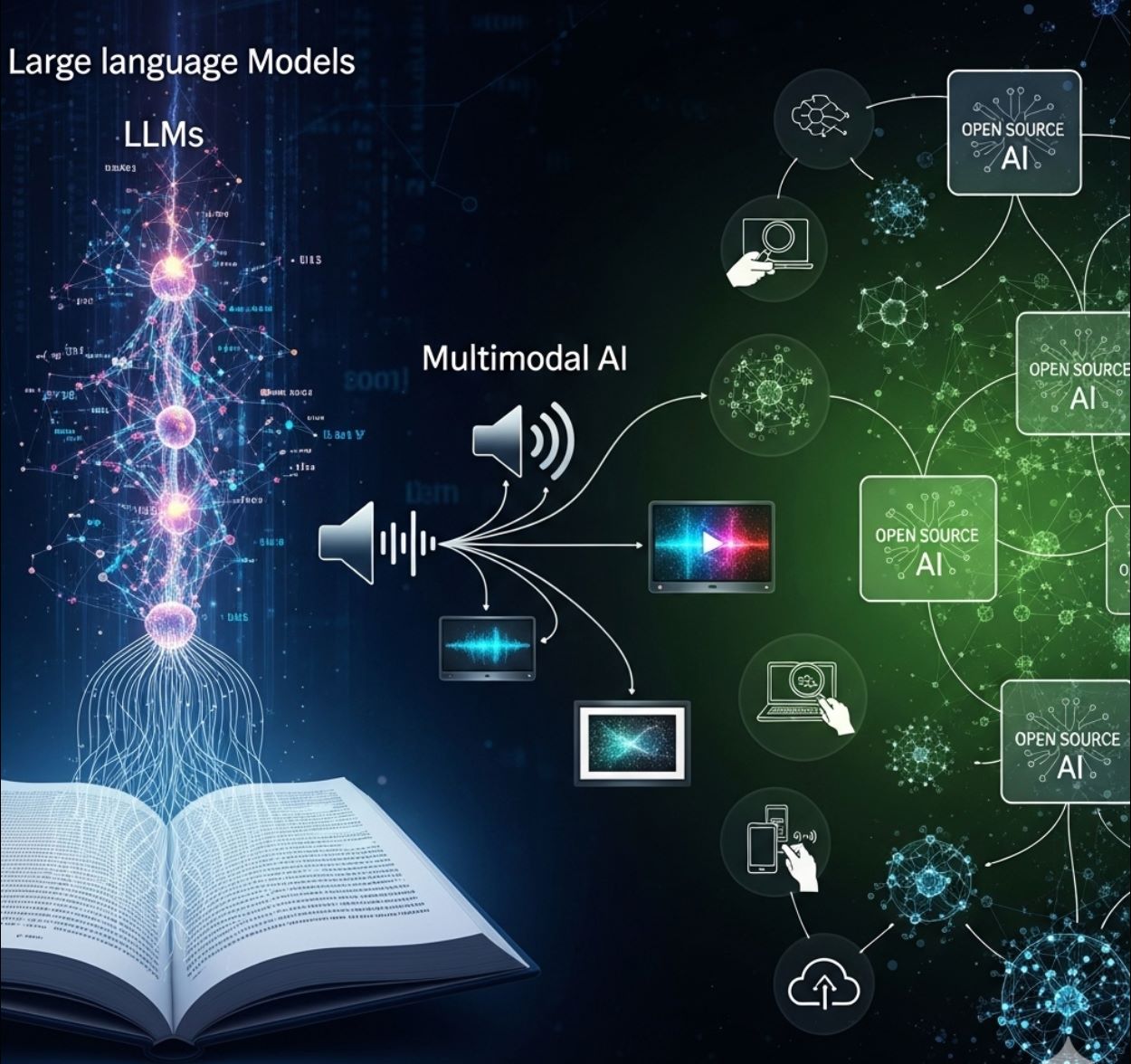

Foundation models and generative AI are evolving rapidly. Few technologies have grown as explosively as generative AI. Since the debut of large language models (LLMs) like GPT-3 and image generators like DALL·E 2 in 2022, generative AI usage has skyrocketed.

User Milestone

Daily Usage

Future Focus

By early 2023, ChatGPT surpassed 100 million users, and today over 4 billion prompts are entered into major LLM platforms per day. The next five years will bring even more capable AI models.

Tech companies are racing to develop frontier AI models that push the boundaries of natural language processing, code generation, visual creativity, and beyond. Crucially, they are also striving to improve AI's reasoning abilities – enabling models to logically solve problems, plan, and "think" through complex tasks more like a human.

This focus on AI reasoning is one of the biggest drivers of R&D at present. In the enterprise realm, the holy grail is having AI that can understand business data and context deeply enough to assist with decision-making, not just content generation. Companies developing advanced LLMs believe the most promising opportunity now is applying AI's reasoning power to proprietary enterprise data – enabling use cases from intelligent recommendations to strategic planning support.

Multi-modal and High-Performance AI

Another trend is the rise of multimodal AI systems that can process and generate different types of data (text, images, audio, video) in an integrated way. Recent breakthroughs have seen AI models generate realistic videos from text prompts and excel at tasks blending language and vision.

- AI models analyzing images and answering questions in natural language

- Complex textual prompts producing short videos

- Advanced robotics perception capabilities

- AI-generated video content creation

Benchmark tests introduced in 2023 to push these limits (like MMMU and GPQA) have already seen performance jump by tens of percentage points within a year, indicating how quickly AI is learning to tackle complex, multimodal challenges.

Computing Cost Reduction

A noteworthy trend in AI development is the drive toward smaller, more efficient models and wider accessibility. Between late 2022 and late 2024, the computing cost of running an AI system at GPT-3.5 level dropped by over 280×.

Advances in model optimization and new architectures mean that even relatively small models can reach strong performance on many tasks, making AI more accessible to organizations of all sizes.

Open Source Revolution

Open-source AI is on the rise: open-weight models from the research community are closing the quality gap with big proprietary models, reducing performance differences on benchmarks from about 8% to under 2% in just one year.

Performance Gap

- ~8% difference vs proprietary models

- Limited accessibility

Near Parity

- Under 2% performance difference

- Widespread accessibility

By 2025–2030, we are likely to see a flourishing ecosystem of open AI models and tools that developers worldwide can use, democratizing AI development beyond the tech giants.

For instance, new multimodal models can analyze an image and answer questions about it using natural language, or take a complex textual prompt and produce a short video. These capabilities will mature by 2030, opening up new creative and practical applications – from AI-generated video content to advanced robotics perception.

We can expect future AI models to be more general-purpose, seamlessly handling multiple input types and tasks. This convergence of modalities, coupled with the ongoing scale-up of model architectures, points toward more powerful "foundation models" by the end of the decade – albeit accompanied by higher computational demands.

The combination of cheaper computation and purpose-built AI hardware will enable AI to be embedded literally everywhere – from smart appliances to industrial sensors – because the processing can be done either on tiny edge devices or streamed from highly-optimized cloud servers.

Rise of Autonomous AI Agents

One of the most intriguing emerging trends is the advent of autonomous AI agents – AI systems endowed not only with intelligence but with the ability to act on their own to accomplish goals. Sometimes dubbed "agentic AI," this concept combines advanced AI models (like LLMs) with decision-making logic and tool use, allowing AI to execute multistep tasks with minimal human intervention.

Over the next five years, we can expect AI agents to move from experimental demos to practical workplace tools. In fact, enterprise leaders predict AI agents could effectively double the size of their workforce by taking on a host of routine and knowledge-based tasks.

Customer Service

AI agents autonomously handling routine customer inquiries with natural conversation.

- 24/7 availability

- Instant response times

- Consistent service quality

Content & Code Generation

Generating first-draft marketing copy, software code, and prototype products from specifications.

- Marketing content creation

- Software development assistance

- Design to prototype conversion

For example, AI agents can already autonomously handle routine customer service inquiries, generate first-draft marketing copy or software code, and turn design specs into prototype products. As this technology matures, companies will deploy AI agents as "digital workers" across departments – from virtual sales associates that engage customers in natural conversation, to AI project managers that coordinate simple workflows.

AI agents are set to revolutionize the workforce, blending human creativity with machine efficiency to unlock unprecedented levels of productivity.

— Workforce Expert, Industry Research

Human-Only Workforce

- Manual task execution

- Limited availability

- Repetitive work burden

- Capacity constraints

Human-AI Collaboration

- AI handles routine tasks

- 24/7 digital workforce

- Humans focus on strategy

- Scalable operations

Crucially, these agents are not meant to replace humans, but to augment them. In practice, human employees will work in tandem with AI agents: people will supervise agents, provide high-level guidance, and focus on complex or creative tasks while delegating repetitive work to their digital counterparts.

Early adopters report that such human-AI collaboration can dramatically speed up processes (e.g. resolving customer requests or coding new features faster) while freeing up humans for strategic work.

Rethink Workflows

Organizations need to redesign processes to integrate AI agents effectively, identifying tasks suitable for automation.

Train Staff

Employees require training to leverage AI agents and develop new management approaches for human-AI collaboration.

Establish Governance

Create oversight roles and governance frameworks to ensure AI actions remain aligned with business goals and ethical standards.

To capitalize on this trend, organizations will need to start rethinking their workflows and roles. New management approaches are required to integrate AI agents effectively – including training staff to leverage agents, creating oversight roles to monitor agent output, and establishing governance so that autonomous AI actions remain aligned with business goals and ethical standards.

It's a significant change management challenge: a recent industry survey found many companies are only beginning to consider how to orchestrate a blended human–AI workforce. Nonetheless, those that succeed may unlock unprecedented levels of productivity and innovation.

By 2030, it wouldn't be surprising if enterprises have entire "AI agent teams" or Centers for AI Agents that handle substantial operations, fundamentally redefining how work gets done.

Specialized AI Hardware and Edge Computing

The rapid advancement of AI capabilities has gone hand-in-hand with exploding computational needs, spurring major innovation in hardware. In the next few years, expect to see a new generation of AI-specific chips and distributed computing strategies to support AI's growth.

AI's hunger for processing power is already extreme – training cutting-edge models and enabling them to reason through complex tasks requires enormous compute cycles. To meet this demand, semiconductor companies and big tech firms are designing custom silicon optimized for AI workloads.

AI Accelerators (ASICs)

Edge AI Deployment

Unlike general-purpose CPUs or even GPUs, these AI accelerators (often ASICs – application-specific integrated circuits) are tailored to efficiently run neural network computations. Tech executives report that many customers are now considering specialized AI chips for their data centers to gain higher performance per watt.

The advantage of such chips is clear: an ASIC built for a particular AI algorithm can vastly outperform a general GPU on that task, which is especially useful for edge AI scenarios (running AI on smartphones, sensors, vehicles, and other devices with limited power). Industry insiders predict demand for these AI accelerators will accelerate as companies deploy more AI at the edge in coming years.

At the same time, cloud providers are scaling up their AI computing infrastructure. The major cloud platforms (Amazon, Microsoft, Google, etc.) are investing billions in data center capacity, including developing their own AI chips and systems, to serve the growing need for AI model training and inference on demand.

They view AI workloads as a huge revenue opportunity, as enterprises increasingly migrate their data and machine learning tasks to the cloud. This centralization helps businesses access powerful AI without buying specialized hardware themselves.

However, it's worth noting that supply constraints have emerged – for example, the world's appetite for high-end GPUs has led to shortages and delays in some cases. Geopolitical factors like export restrictions on advanced chips also create uncertainty. These challenges will likely drive even more innovation, from new chip fabs being built to novel hardware architectures (including neuromorphic and quantum computing in the longer-term horizon).

Cloud AI Supercomputing

Massive AI computing clusters optimized for model training and inference.

- Billions in infrastructure investment

- Custom AI chip development

- On-demand AI processing

Edge AI Devices

Efficient AI chips bringing intelligence to everyday devices.

- Smart appliances integration

- Industrial sensor networks

- Real-time processing

On a positive note, the efficiency of AI hardware is steadily improving. Each year, chips become faster and more energy-efficient: recent analyses show AI hardware costs declining ~30% annually while energy efficiency (compute per watt) improves ~40% per year.

This means that even as AI models grow more complex, the cost per operation is coming down. By 2030, running sophisticated AI algorithms may cost only a fraction of what it does today.

The combination of cheaper computation and purpose-built AI hardware will enable AI to be embedded literally everywhere – from smart appliances to industrial sensors – because the processing can be done either on tiny edge devices or streamed from highly-optimized cloud servers.

In summary, the next five years will solidify the trend of AI-specific hardware at both extremes: massive AI supercomputing clusters in the cloud, and efficient AI chips bringing intelligence to the edge. Together, these will form the digital backbone powering AI's expansion.

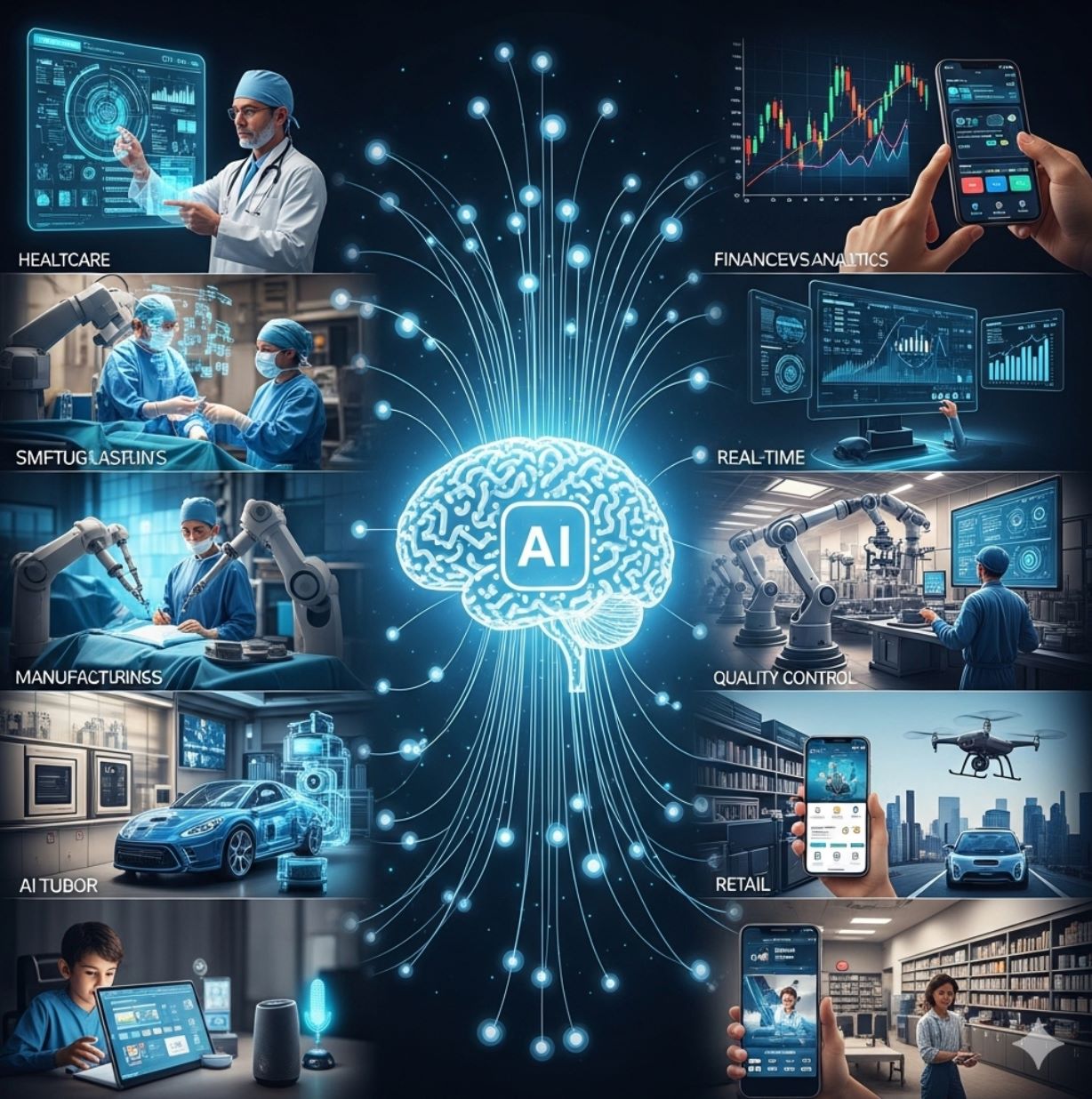

AI Transforming Industries and Daily Life

AI is not confined to tech labs – it is increasingly embedded in everyday life and across every industry. The coming years will witness deeper integration of AI into sectors like healthcare, finance, manufacturing, retail, transportation, and more, fundamentally changing how services are delivered.

Healthcare Revolution

AI is helping doctors diagnose diseases earlier and manage patient care more effectively. The U.S. FDA approved 223 AI-powered medical devices in 2023, a massive jump from just 6 such approvals in 2015.

- AI analyzing medical images (MRI, X-rays) for tumor detection

- Algorithms monitoring vital signs and predicting health crises

- Generative AI summarizing medical notes and drafting patient reports

- AI translation tools converting medical jargon to plain language

- Drug development timelines cut by over 50% with AI assistance

Financial Services Innovation

The finance industry was an early adopter of AI and will continue to push the frontier. Banks and insurers use AI for fraud detection, real-time risk assessment, and algorithmic trading.

Current Applications

Future Developments

Going forward, we can expect AI "financial advisors" and autonomous wealth management agents that personalize investment strategies for clients. AI can also draft analyst reports and handle routine customer service via chatbots.

Manufacturing & Logistics

In factories and supply chains, AI is driving efficiency through predictive maintenance, computer vision quality control, and AI-driven robotics.

- Predictive Maintenance: Sensors plus machine learning predict equipment failures before they happen

- Computer Vision: Assembly line systems automatically spot defects in real time

- AI Robotics: Handle delicate or complex assembly tasks alongside humans

- Digital Twins: Virtual simulations test optimizations before real-world application

- Generative Design: AI suggests engineering improvements humans might miss

Retail & Customer Service

AI is transforming how we shop and interact with businesses through personalized recommendations, dynamic pricing, and intelligent customer support.

Personalization

AI recommendation engines and dynamic pricing algorithms.

- Personalized product suggestions

- Real-time price optimization

- Demand forecasting

Customer Experience

24/7 AI chatbots and virtual assistants enhancing service.

- Instant customer support

- Smart mirrors and AR fitting rooms

- Supply chain optimization

These examples barely scratch the surface. It's notable that even traditionally low-tech fields like agriculture, mining, and construction are now leveraging AI, whether through autonomous farm equipment, AI-driven mineral exploration, or smart energy management.

In fact, every industry is seeing AI usage increase, including sectors previously not seen as AI-heavy. Companies in these domains are finding that AI can optimize resource use, reduce waste, and improve safety (for instance, AI systems monitoring worker fatigue or machinery conditions in real time).

On the consumer front, daily life is becoming intertwined with AI in subtle ways. Many people already wake up to smartphone apps that use AI to curate their news or plan their commute.

Virtual assistants in our phones, cars, and homes are getting smarter and more conversational each year. Self-driving vehicles and delivery drones, while not yet ubiquitous, are likely to become common in the next five years, at least in certain cities or for certain services (robotaxi fleets, automated grocery deliveries, etc.).

Education is also feeling AI's impact: personalized learning software can adapt to students' needs, and AI tutors provide on-demand help in various subjects. Overall, the trajectory is that AI will increasingly operate in the background of everyday activities – making services more convenient and personalized – to the point that by 2030 we might simply take these AI-driven conveniences for granted as part of normal life.

Responsible AI and Regulation

The breakneck pace of AI development has raised important questions about ethics, safety, and regulation, and these will be central themes in the coming years. Responsible AI – ensuring AI systems are fair, transparent, and safe – is no longer just a buzzword but a business imperative.

In 2024, AI-related incidents (like biased outcomes or safety failures) have risen sharply, yet few major AI developers have standardized evaluation protocols for ethics and safety. This gap between recognizing AI risks and actually mitigating them is something many organizations are now racing to close.

Industry surveys indicate that in 2025, company leaders will no longer tolerate ad-hoc or "in pockets" AI governance; they are moving toward systematic, transparent oversight of AI across the enterprise. The reasoning is simple: as AI becomes intrinsic to operations and customer experiences, any failure – whether a flawed recommendation, a privacy breach, or just an unreliable model output – can cause real harm to the business (from reputational damage to regulatory penalties).

AI Audits

Regular validation of AI models with internal teams or external experts to ensure proper functioning within legal and ethical bounds.

Risk Management

Systematic AI risk management practices becoming the norm across enterprises for trustworthy operations.

Strategic Alignment

Aligning AI performance with business value while maintaining ethical standards and regulatory compliance.

Successful AI governance will be measured not only by avoiding risks but by delivering on strategic objectives and ROI – aligning AI performance with business value in a trustworthy way.

— AI Assurance Leader, Industry Expert

Therefore, expect to see rigorous AI risk management practices becoming the norm. Companies are beginning to conduct regular AI audits and validations of their models, either with upskilled internal teams or external experts, to ensure the AI is working as intended and within legal/ethical bounds.

U.S. Regulatory Growth

Global Frameworks

Regulators around the world are also stepping up. AI regulation is tightening at both national and international levels. In 2024, U.S. federal agencies introduced 59 AI-related regulatory actions – more than double the number in the previous year.

The European Union is finalizing its comprehensive AI Act, which will impose requirements on AI systems (especially high-risk applications) around transparency, accountability, and human oversight. Other regions are not far behind: organizations like the OECD, United Nations, and African Union all released AI governance frameworks in 2024 to guide nations on principles like transparency, fairness, and safety.

Innovation-Focused

- Faster AI innovation

- Rapid deployment

- Market-driven approach

Safety-Focused

- Slower certain applications

- Higher public trust

- Comprehensive oversight

This trend of global cooperation on AI ethics and standards is expected to intensify, even as different countries take varied approaches. Notably, differences in regulatory philosophy may influence AI's trajectory in each region. Analysts have pointed out that relatively flexible regimes (such as the U.S.) might allow faster AI innovation and deployment, whereas stricter rules (like the EU's) could slow certain applications but potentially build more public trust.

Another aspect of responsible AI is addressing issues of bias, misinformation, and overall trustworthiness of AI outputs. New tools and benchmarks are being developed to evaluate AI systems on these criteria – for example, HELM (Holistic Evaluation of Language Models) Safety and other tests that gauge how factually correct and safe AI-generated content is.

We're likely to see these kinds of standardized checks become a required part of AI system development. Meanwhile, the public's perception of AI's risks and benefits will influence how hard regulators and companies push on oversight.

Interestingly, optimism about AI varies widely by region: surveys show citizens in countries like China, Indonesia, and much of the developing world are highly optimistic about AI's net benefits, whereas public opinion in Western countries is more cautious or even skeptical.

If optimism grows (as it has slowly increased in Europe and North America recently), there may be more social license to deploy AI solutions – provided there are assurances in place that these systems are fair and secure.

In summary, the next five years will be pivotal for AI governance. We will likely see the first comprehensive AI laws come into effect (e.g. in the EU), more governments investing in AI oversight bodies, and companies weaving Responsible AI principles into their product development lifecycles.

The goal is to strike a balance where innovation isn't stifled – "supple" regulatory approaches can enable continued rapid advances – yet consumers and society are protected from potential downsides. Achieving this balance is no easy task, but it's one of the defining challenges as AI moves from a nascent technology to a mature, ubiquitous one.

Global Competition and Collaboration

AI development in the next half-decade will also be shaped by the intense global competition to lead in AI, coupled with efforts at international collaboration. Currently, the United States and China are the two heavyweight contenders in the AI arena.

United States Leadership

China's Rapid Progress

The U.S. leads in many metrics – for instance, in 2024, U.S. institutions produced 40 of the world's top AI models, versus 15 from China and only a handful from Europe. However, China is rapidly closing the gap in key areas.

Chinese-developed AI models have caught up significantly in quality, achieving near-parity with U.S. models on major benchmarks in 2024. Moreover, China outpaces every other country in sheer volume of AI research papers and patents, signaling its long-term commitment to AI R&D.

This rivalry is likely to spur faster innovation – a modern space race but in AI – as each nation pours resources into one-upping the other's advances. We've already seen an escalation in AI investment commitments by governments: China announced a colossal $47.5 billion national fund for semiconductor and AI technology, while the U.S., EU, and others are also investing billions in AI research initiatives and talent development.

Europe

Strong focus on trustworthy AI and open-source projects.

- Ethical AI leadership

- Open-source contributions

- Regulatory frameworks

India

Large-scale AI applications and global talent supply.

- Education & healthcare AI

- 50%+ global AI workforce

- Scalable implementations

Emerging Players

Singapore, UAE, and others carving specialized niches.

- AI governance innovation

- Smart nation initiatives

- Research investments

That said, AI is far from a two-country story. Global collaboration and contributions are increasing. Regions like Europe, India, and the Middle East are producing notable AI innovations and models of their own.

For example, Europe has a strong focus on trustworthy AI and is home to many open-source AI projects. India is leveraging AI for large-scale applications in education and healthcare, and also supplying much of the global AI talent (India and the U.S. together account for over half the global AI workforce in terms of skilled professionals).

There's also a push in smaller countries to carve out niches – such as Singapore's investments in AI governance and smart nation initiatives, or the UAE's efforts in AI research and deployment. International bodies are convening discussions on AI standards so that there's at least some alignment – illustrated by the OECD and UN frameworks mentioned earlier, and events like the Global Partnership on AI (GPAI) which bring multiple countries together to share best practices.

Rapid Adoption

- Near-ubiquitous AI integration

- Smart cities deployment

- Experimental freedom

Measured Progress

- Heavier regulations

- Slower adoption rates

- Trust-building focus

While geopolitical competition will continue (and likely even intensify in areas like AI for military use or economic advantage), there is a parallel recognition that issues like AI ethics, safety, and addressing global challenges require cooperation. We may see more cross-border research collaborations addressing things like AI for climate change, pandemic response, or humanitarian projects.

One interesting facet of the global AI landscape is how differing attitudes and user bases will shape AI's evolution. As noted, public sentiment is very positive in some developing economies, which could make those markets more permissive ground for AI experimentation in sectors like fintech or education technology.

In contrast, regions with skeptical publics might impose heavier regulations or face slower adoption due to low trust. By 2030, we might witness a kind of bifurcation: some countries achieving near-ubiquitous AI integration (smart cities, AI in daily governance, etc.), while others proceed more cautiously.

However, even the cautious regions acknowledge they cannot ignore AI's potential – for example, the United Kingdom and European countries are investing in AI safety and infrastructure (the UK plans a national AI research cloud, France has public supercomputing initiatives for AI, etc.).

So, the race is not just about building the fastest AI, but building the right AI for each society's needs.

In essence, the next five years will see a complex interplay of competition and collaboration. We will likely witness breakthrough AI achievements emerging from unexpected places around the world, not just Silicon Valley or Beijing.

And as AI becomes a staple of national power (akin to oil or electricity in previous eras), how nations manage both cooperation and rivalry in this domain will significantly influence the trajectory of AI development globally.

AI's Impact on Jobs and Skills

Finally, no discussion of AI's near-term future is complete without examining its impact on work and employment – a topic on the minds of many. Will AI take our jobs, or create new ones? The evidence so far suggests a bit of both, but with a strong leaning toward augmentation over pure automation.

Jobs Created

Jobs Displaced

The World Economic Forum projected that by 2025, AI would create about 97 million new jobs globally while displacing around 85 million – a net gain of 12 million jobs.

These new roles range from data scientists and AI engineers to entirely new categories like AI ethicists, prompt engineers, and robot maintenance experts. We are already seeing that prediction play out: over 10% of job postings today are for roles that barely existed a decade ago (for example, Head of AI or Machine Learning Developer).

Importantly, rather than mass unemployment, the early impact of AI in workplaces has been to boost worker productivity and shift skill demands. Industries adopting AI fastest have seen up to 3× higher revenue growth per employee since the AI boom began around 2022.

In those sectors, workers aren't being rendered redundant; instead, they're becoming more productive and more valuable. In fact, wages are rising twice as fast in AI-intensive industries compared to industries with lower AI adoption.

Even workers in roles that are highly automatable are seeing wage increases if they possess AI-related skills, indicating that companies value employees who can work effectively with AI tools. Across the board, there is a growing premium on AI skills – workers who can leverage AI (even at a basic level, like using AI-driven analytics or content generation tools) earn higher salaries.

One analysis found that employees with AI skills command a 56% wage premium on average over those in similar roles without those skills. This premium has more than doubled in just a year, highlighting how quickly "AI literacy" is becoming a must-have competency.

At-Risk Roles

Jobs facing potential displacement or redefinition.

- Administrative tasks

- Data entry positions

- Repetitive processing roles

- Simple customer queries

Emerging Opportunities

New tasks requiring human creativity and AI oversight.

- AI supervision and guidance

- Creative problem-solving

- Strategic decision-making

- Human-AI collaboration

That said, AI is undeniably reshaping the nature of jobs. Many routine or lower-level tasks are being automated – AI can take over data entry, report generation, simple customer queries, and so on. This means some jobs will be eliminated or redefined.

Workers in administrative, repetitive-processing roles are particularly at risk of displacement. However, even as those tasks vanish, new tasks emerge that require human creativity, judgment, and oversight of AI.

The net effect is a shift in the skill set needed for most professions. A LinkedIn analysis predicts that by 2030, around 70% of the skills used in an average job will be different from the skills that were needed in that job a few years ago.

In other words, nearly every job is evolving. To adapt, continuous learning and reskilling are essential for the workforce.

Education Integration

Two-thirds of countries have introduced computer science (including AI modules) into K-12 curricula for foundational AI literacy.

Corporate Training

37% of executives plan to invest more in training employees on AI tools, with companies heavily investing in upskilling programs.

Online Learning

Rise of online courses and certifications in AI, including free programs by tech firms and universities for millions of learners.

Thankfully, there's a major push toward AI education and upskilling: two-thirds of countries have introduced computer science (often including AI modules) into K-12 curricula, and companies are heavily investing in employee training programs. Globally, 37% of executives say they plan to invest more in training employees on AI tools in the immediate term.

We're also seeing the rise of online courses and certifications in AI – for example, free programs by tech firms and universities to teach AI basics to millions of learners.

Thanks in part to AI, the nature of jobs is shifting from mastering specific tasks to constantly acquiring new ones.

— Industry Report, Workforce Analysis

Another aspect of AI in the workplace is the emergence of the "human-AI team" as the fundamental unit of productivity. As described earlier, AI agents and automation handle parts of the work, while humans provide supervision and expertise.

Forward-looking companies are redefining roles so that entry-level work (which AI might handle) is less of a focus; instead, they hire people directly into more strategic roles and rely on AI to do the grunt work.

This could flatten traditional career ladders and require new ways to train talent (since junior staff won't learn by doing simple tasks if AI is doing those tasks). It also raises the importance of change management in organizations. Many employees feel anxiety or overwhelm about the pace of change AI brings.

Leaders therefore need to actively manage this transition – communicating the benefits of AI, involving employees in AI adoption, and assuring them that the goal is to enhance human work, not replace it. Companies that successfully cultivate a culture of human-AI collaboration – where using AI is second nature to staff – will likely see the biggest performance gains.

In summary, the labor market over the next five years will be characterized by transformative change rather than catastrophe. AI will automate certain tasks and job functions, but it will also create demand for new expertise and make many workers more productive and valuable.

The challenge (and opportunity) lies in guiding the workforce through this transition. Those individuals and organizations that embrace lifelong learning and adapt roles to leverage AI will thrive in the new AI-powered economy. Those that do not may struggle to stay relevant.

As one report succinctly put it, thanks in part to AI, the nature of jobs is shifting from mastering specific tasks to constantly acquiring new ones. The coming years will test our ability to keep pace with this shift – but if we do, the outcome could be a more innovative, efficient, and even more human-centric world of work.

Conclusion: Shaping the AI Future

The trajectory of AI development in the next five years is poised to bring about profound changes across technology, business, and society. We will likely witness AI systems growing more capable – mastering multiple modalities, exhibiting improved reasoning, and operating with greater autonomy.

At the same time, AI will become deeply woven into the fabric of everyday life: powering decisions in boardrooms and governments, optimizing operations in factories and hospitals, and enhancing experiences from customer service to education.

The opportunities are immense – from boosting economic productivity and scientific discovery to helping address global challenges like climate change (indeed, AI is expected to accelerate the shift to renewable energy and smarter resource use). But realizing AI's full potential will require navigating the accompanying risks and hurdles. Issues of ethics, governance, and inclusivity will demand continued attention so that the benefits of AI are broadly shared and not overshadowed by pitfalls.

Human choices and leadership will shape the AI future. AI itself is a tool – a remarkably powerful and complex tool, but one that ultimately reflects the objectives we set for it.

— Technology Leadership Perspective

One overarching theme is that human choices and leadership will shape the AI future. AI itself is a tool – a remarkably powerful and complex tool, but one that ultimately reflects the objectives we set for it.

Business Implementation

Thoughtful and ethical AI integration

Policy Framework

Balanced innovation and protection

Education & Preparation

Preparing people for AI-driven changes

The next five years present a critical window for stakeholders to guide AI development responsibly: businesses must implement AI thoughtfully and ethically; policymakers must craft balanced frameworks that foster innovation while protecting the public; educators and communities must prepare people for the changes AI will bring.

The international and interdisciplinary collaboration around AI needs to deepen, ensuring that we collectively steer this technology toward positive outcomes. If we succeed, 2030 may mark the dawn of a new era where AI significantly augments human potential – helping us work smarter, live healthier, and tackle problems previously out of reach.

In that future, AI would not be viewed with fear or hype, but rather as an accepted, well-governed part of modern life that works for humanity. Achieving this vision is the grand challenge and promise of the next five years in AI development.

In that future, AI would not be viewed with fear or hype, but rather as an accepted, well-governed part of modern life that works for humanity. Achieving this vision is the grand challenge and promise of the next five years in AI development.

No comments yet. Be the first to comment!