AI and Algorithmic Bias

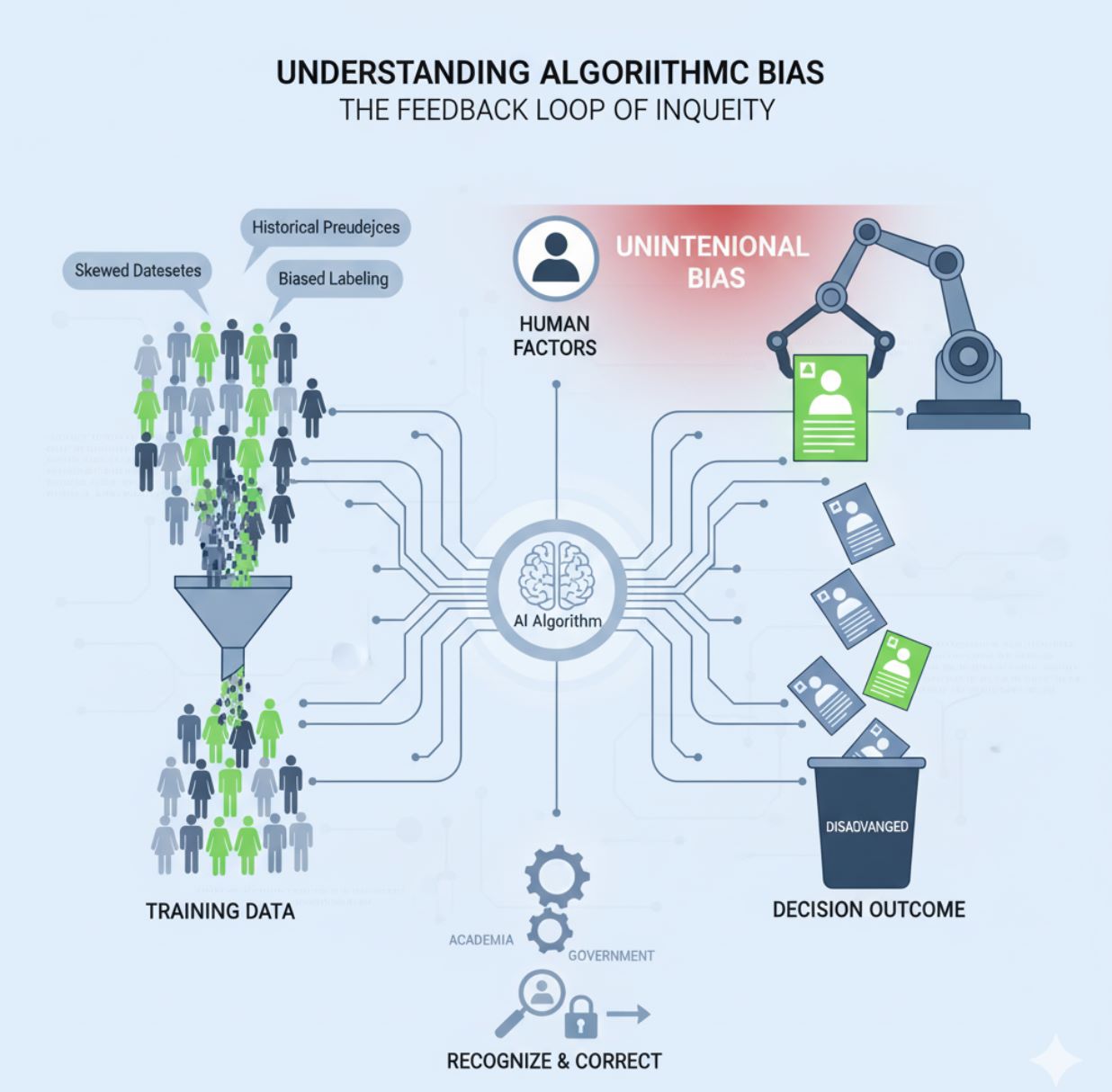

AI algorithms are increasingly used across sectors, from hiring to finance, but they carry risks of bias and discrimination. Automated AI decisions can reflect or amplify social injustices if training data is biased or non-diverse. Understanding algorithmic bias helps businesses, developers, and users identify, manage, and build fairer, more transparent AI systems.

Are you wondering about algorithmic biases in AI? Join INVIAI to learn more about AI and Algorithmic Bias in this article!

Artificial Intelligence (AI) is increasingly embedded in our daily lives – from hiring decisions to healthcare and policing – but its use has raised concerns about algorithmic bias. Algorithmic bias refers to systematic and unfair prejudices in AI systems' outputs, often reflecting societal stereotypes and inequalities.

In essence, an AI algorithm can unintentionally reproduce human biases present in its training data or design, leading to discriminatory outcomes.

Below, we explore what causes algorithmic bias, real-world examples of its impact, and how the world is striving to make AI fairer.

Understanding Algorithmic Bias and Its Causes

Algorithmic bias typically arises not because AI "wants" to discriminate, but because of human factors. AI systems learn from data and follow rules created by people – and people have biases (often unconscious). If the training data is skewed or reflects historical prejudices, the AI will likely learn those patterns.

Biased Training Data

Historical prejudices embedded in datasets

- Incomplete datasets

- Unrepresentative samples

- Historical discrimination patterns

Biased Data Labeling

Human prejudices in data annotation

- Subjective categorization

- Cultural assumptions

- Unconscious stereotyping

Optimization Issues

Algorithms optimized for accuracy over fairness

- Overall accuracy focus

- Minority group neglect

- Fairness trade-offs ignored

AI algorithms inherit the biases of their creators and data unless deliberate steps are taken to recognize and correct those biases.

— Key Research Finding

It's important to note that algorithmic bias is usually unintentional. Organizations often adopt AI to make decisions more objective, but if they "feed" the system biased information or fail to consider equity in design, the outcome can still be inequitable. AI bias can unfairly allocate opportunities and produce inaccurate results, negatively impacting people's well-being and eroding trust in AI.

Understanding why bias happens is the first step toward solutions – and it's a step that academia, industry, and governments worldwide are now taking seriously.

Real-World Examples of AI Bias

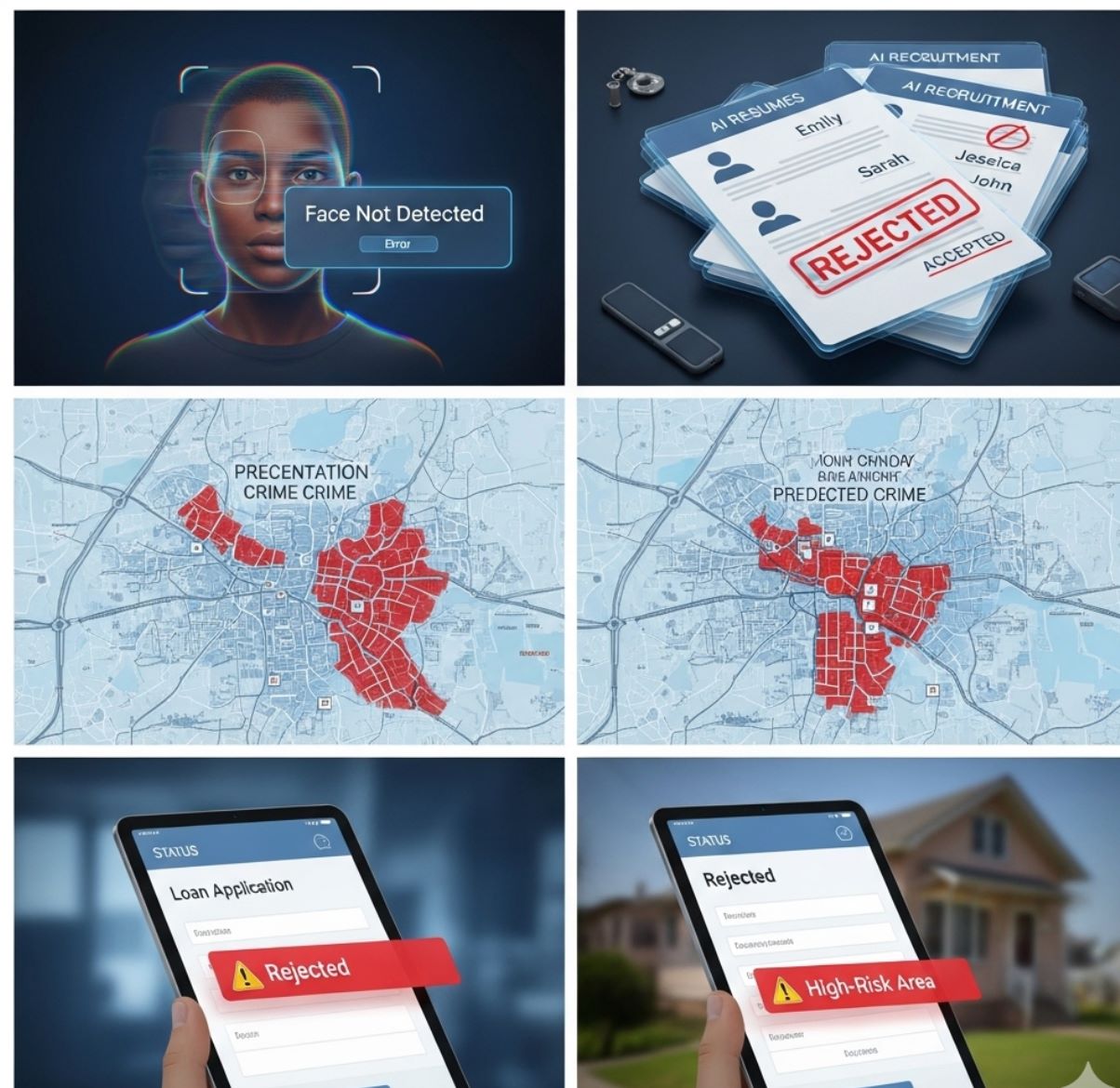

Bias in AI is not just a hypothetical concern; numerous real-world cases have exposed how algorithmic bias can lead to discrimination. Notable instances of AI bias across different sectors include:

Criminal Justice System

Case: U.S. recidivism prediction algorithm

Impact: Biased against Black defendants, frequently misjudged Black defendants as high-risk and white defendants as low-risk, compounding racial disparities in sentencing.

Consequence: Amplified historic biases in policing and courts

Hiring & Recruitment

Case: Amazon's AI recruiting tool

Impact: Scrapped after discriminating against women. Trained on past resumes mostly from men, downgraded resumes containing "women's" or all-female colleges.

Consequence: Would have unfairly filtered out qualified women for technical jobs

Facial Recognition Technology Bias

Face recognition technology has shown significant bias in accuracy across demographic lines. A comprehensive 2019 study by the U.S. National Institute of Standards and Technology (NIST) revealed alarming disparities:

- False positive identifications for Asian and African-American faces were 10 to 100 times more likely than for Caucasian faces

- Highest misidentification rates were for Black women in one-to-many searches

- Dangerous bias has already led to innocent people being falsely arrested

Generative AI and Content Bias

Even the latest AI systems are not immune. A 2024 UNESCO study revealed that large language models often produce regressive gender and racial stereotypes:

Domestic Focus

- Described in domestic roles 4x more often

- Linked to "home" and "children"

- Traditional gender stereotypes

Professional Focus

- Associated with "executive" and "salary"

- Linked to "career" advancement

- Leadership terminology

AI's risks are compounding on top of existing inequalities, resulting in further harm to already marginalised groups.

— UNESCO Warning

These examples underscore that algorithmic bias is not a distant or rare problem – it's happening across domains today. From job opportunities to justice, healthcare to online information, biased AI systems can replicate and even intensify existing discrimination.

The harm is often borne by historically disadvantaged groups, raising serious ethical and human rights concerns. Given that millions now use generative AI in daily life, even subtle biases in content can amplify inequalities in the real world, reinforcing stereotypes at scale.

Why Does AI Bias Matter?

The stakes for addressing AI bias are high. Left unchecked, biased algorithms can entrench systemic discrimination behind a veneer of tech neutrality. Decisions made (or guided) by AI – who gets hired, who gets a loan or parole, how police target surveillance – carry real consequences for people's lives.

Human Rights Impact

Undermines equality and non-discrimination principles

- Denied opportunities

- Economic disparities

- Threats to personal freedom

Trust Erosion

Damages public confidence in technology

- Reduced AI adoption

- Reputation damage

- Innovation barriers

Diminished Benefits

Limits AI's positive potential

- Inaccurate outcomes

- Reduced effectiveness

- Unequal access to benefits

If those decisions are unfairly skewed against certain genders, races, or communities, social inequities widen. This can lead to denied opportunities, economic disparities, or even threats to personal freedom and safety for affected groups.

In the bigger picture, algorithmic bias undermines human rights and social justice, conflicting with principles of equality and non-discrimination upheld by democratic societies.

Moreover, algorithmic bias can diminish the potential benefits of AI. AI has the promise to improve efficiency and decision-making, but if its outcomes are discriminatory or inaccurate for subsets of the population, it cannot reach its full positive impact.

For example, an AI health tool that works well for one demographic but poorly for others is not truly effective or acceptable. As the OECD observed, bias in AI unfairly limits opportunities and can cost businesses their reputation and users' trust.

In short, addressing bias is not just a moral imperative but also critical to harnessing AI's benefits for all individuals in a fair manner.

Strategies for Mitigating AI Bias

Because algorithmic bias is now widely recognized, a range of strategies and best practices have emerged to mitigate it. Ensuring AI systems are fair and inclusive requires action at multiple stages of development and deployment:

Better Data Practices

Since biased data is a root cause, improving data quality is key. This means using diverse, representative training datasets that include minority groups, and rigorously checking for skew or gaps.

- Use diverse, representative training datasets that include minority groups

- Rigorously audit data for historical biases (different outcomes by race/gender)

- Correct or balance biased data before training the model

- Apply data augmentation or synthetic data for underrepresented groups

- Implement ongoing monitoring of AI outputs to flag bias issues early

Fair Algorithm Design

Developers should consciously integrate fairness constraints and bias mitigation techniques into model training. This might include using algorithms that can be tuned for fairness (not just accuracy).

Apply Fairness Techniques

Use algorithms tuned for fairness, apply techniques to equalize error rates among groups, re-weight data, or alter decision thresholds thoughtfully.

Use Bias Testing Tools

Leverage open-source tools and frameworks for testing models for bias and making adjustments during development.

Define Fairness Criteria

Work with domain experts and affected communities when defining fairness criteria, as multiple mathematical definitions of fairness exist and sometimes conflict.

Human Oversight and Accountability

No AI system should operate in a vacuum without human accountability. Human oversight is crucial to catch and correct biases that a machine might learn.

Human-in-the-Loop

- Recruiters reviewing AI-screened candidates

- Judges considering AI risk scores with caution

- Medical professionals validating AI diagnoses

Accountability Measures

- Regular audits of AI decisions

- Bias impact assessments

- Explainable AI reasoning

- Clear responsibility assignment

Organizations must remember they are accountable for decisions made by their algorithms just as if made by employees. Transparency is another pillar here: being open about how an AI system works and its known limitations can build trust and allow independent scrutiny.

Some jurisdictions are moving toward mandating transparency for high-stakes algorithmic decisions (requiring public agencies to disclose how algorithms are used in decisions affecting citizens). The goal is to ensure AI augments human decision-making without replacing ethical judgment or legal responsibility.

Diverse Teams and Regulation

Inclusive Development

A growing chorus of experts emphasizes the value of diversity among AI developers and stakeholders. AI products reflect the perspectives and blind spots of those who build them.

Regulation and Ethical Guidelines

Governments and international bodies are now actively stepping in to ensure AI bias is addressed:

- UNESCO's Recommendation on AI Ethics (2021): First global framework unanimously adopted, enshrining principles of transparency, fairness, and non-discrimination

- EU AI Act (2024): Makes bias prevention a priority, requiring strict evaluations for fairness in high-risk AI systems

- Local Government Action: More than a dozen major cities (San Francisco, Boston, Minneapolis) have banned police use of facial recognition due to racial bias

The Path Forward: Building Ethical AI

AI and algorithmic bias is a global challenge that we are only beginning to effectively tackle. The examples and efforts above make clear that AI bias is not a niche issue – it affects economic opportunities, justice, health, and social cohesion worldwide.

Achieving this will require ongoing vigilance: continually testing AI systems for bias, improving data and algorithms, involving diverse stakeholders, and updating regulations as technology evolves.

At its core, combating algorithmic bias is about aligning AI with our values of equality and fairness. As UNESCO's Director-General Audrey Azoulay noted, even "small biases in [AI] content can significantly amplify inequalities in the real world".

Small biases in AI content can significantly amplify inequalities in the real world.

— Audrey Azoulay, UNESCO Director-General

Therefore, the pursuit of unbiased AI is critical to ensure technology uplifts all segments of society rather than reinforcing old prejudices.

By prioritizing ethical principles in AI design – and backing them up with concrete actions and policies – we can harness AI's innovative power while safeguarding human dignity.

No comments yet. Be the first to comment!