Golden Rules When Using AI

Applying AI effectively requires strategy and caution. These 10 golden rules will help you maximize productivity, avoid common pitfalls, and use AI safely in daily tasks.

Artificial intelligence (AI) offers powerful tools to boost creativity, productivity, and problem-solving, but we must use it wisely. Experts emphasize that AI should respect core human values like human rights, dignity, transparency and fairness.

To get the most out of AI and avoid pitfalls, follow these ten golden rules when using AI.

- 1. Understand AI's Strengths and Limits

- 2. Communicate Clearly with Prompts

- 3. Protect Privacy and Data Security

- 4. Always Double-Check AI Outputs

- 5. Be Aware of Bias and Fairness

- 6. Keep a Human in the Loop (Accountability)

- 7. Use AI Ethically and Legally

- 8. Be Transparent About AI Use

- 9. Keep Learning and Stay Informed

- 10. Use Trusted Tools and Follow Guidelines

- 11. Key Takeaways

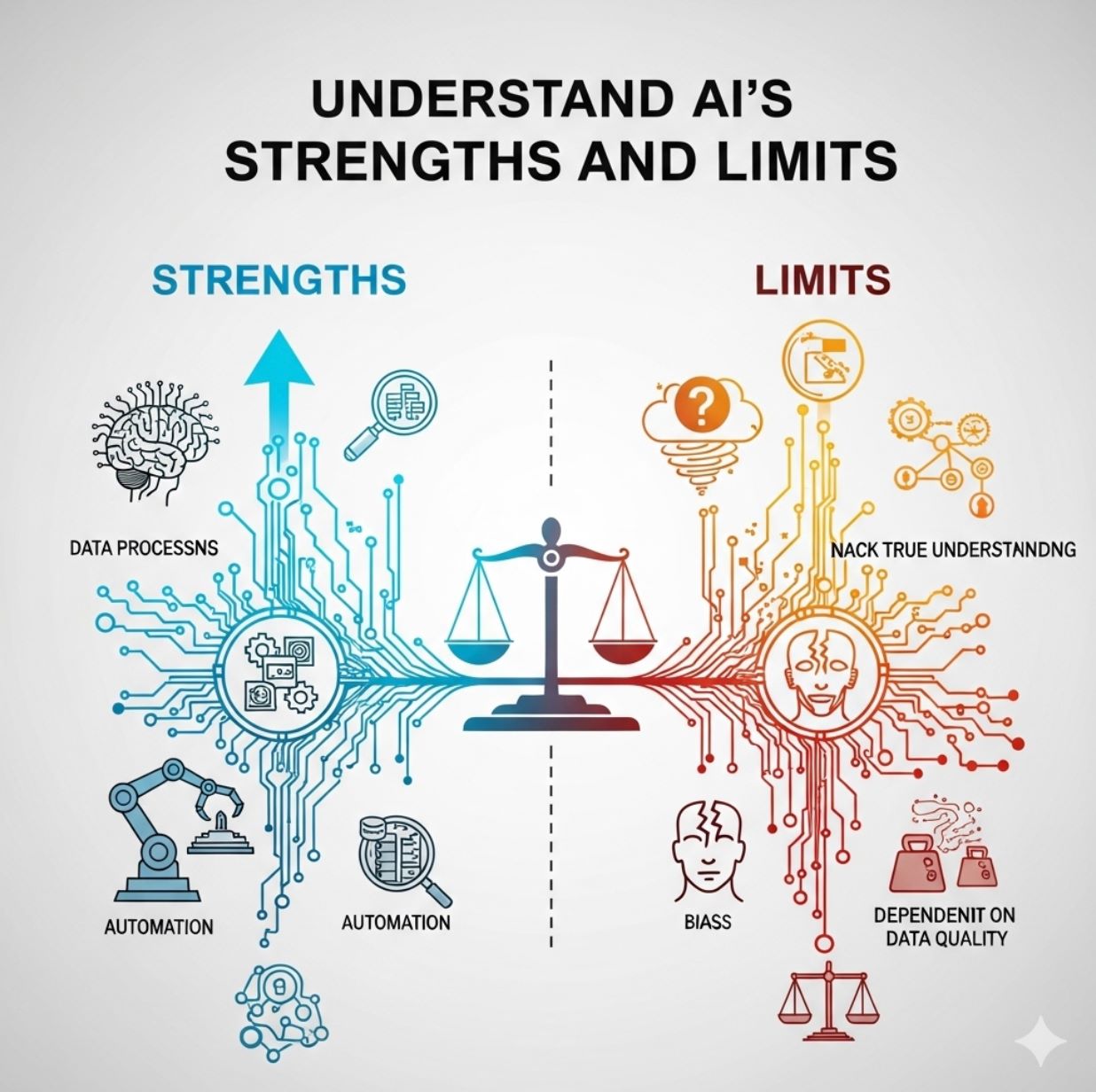

1. Understand AI's Strengths and Limits

AI is a smart assistant, not an all-knowing oracle. It can generate ideas and save time, but it can also make mistakes or "hallucinate" information.

What AI Does Well

- Generate creative ideas quickly

- Process large amounts of data

- Automate repetitive tasks

- Provide 24/7 availability

What AI Struggles With

- Making critical decisions independently

- Understanding context like humans

- Providing 100% accurate information

- Replacing human judgment

For important decisions (like health or finance), involve real experts – AI can help with research but shouldn't replace human judgment. In short, trust but verify: double- and triple-check AI results.

2. Communicate Clearly with Prompts

Think of your AI model like a very smart colleague. Give it clear, detailed instructions and examples. OpenAI's guidelines advise: "Be specific, descriptive and as detailed as possible about the desired context, outcome, length, format, style, etc." when you write prompts.

Poor Example

"Write about sports"

Too general, lacks context and direction

Better Example

"Write a short, friendly blog post about why daily exercise boosts mood, in a conversational tone."

Specific, detailed, with clear expectations

Be Specific

Define exactly what you want

- Clear objectives

- Detailed requirements

Set Style

Specify tone and format

- Conversational tone

- Professional format

Provide Context

Give background information

- Target audience

- Use case scenario

Good prompts (context + specifics) lead to better, more accurate AI responses. This is basically good communication: the more context and guidance you give, the better the AI can help you.

3. Protect Privacy and Data Security

Think twice before typing in addresses, passwords, medical info, or confidential business details: fraudsters and hackers can exploit what you share online.

If you wouldn't post something on social media, don't feed it to an AI chatbot.

— Privacy Security Best Practice

Never Share

- Personal addresses

- Passwords or credentials

- Medical information

- Financial details

- Confidential business data

Safe Practices

- Use trusted platforms only

- Read privacy policies

- Turn off training features

- Use approved company tools

- Keep personal info out of queries

Many free or unvetted AI apps can misuse your data or even train on it without clear consent. Always use well-known, trusted platforms (or approved company tools) and read their privacy policies.

In many jurisdictions, you have rights over your data: by law, designers should only collect the data strictly necessary and get permission for its use.

Practically, this means turning off training or memory features when possible and keeping personal info out of your queries.

4. Always Double-Check AI Outputs

AI can invent facts or confidently give wrong answers. Never copy an AI's work as-is. For every important AI-generated piece—facts, summaries, suggestions—cross-check against reliable sources.

Compare Sources

Compare the AI's answers to official data or expert sources.

Run Quality Checks

Run outputs through plagiarism or grammar checks (AI sometimes mimics text verbatim, causing copyright issues).

Apply Human Judgment

Use your own expertise or intuition: if a claim sounds unbelievable, look it up.

AI should not remove the "ultimate human responsibility".

— UNESCO AI Ethics Guide

Remember, you are accountable for what AI does for you. In practice, this means you stay in control: edit, fact-check and refine the AI's work before publishing or acting on it.

5. Be Aware of Bias and Fairness

AI models learn from human-created data, so they can inherit social biases. This can affect hiring decisions, loan approvals, or even everyday language use.

Rule: think critically about outputs. If an AI keeps suggesting the same gender or race for a job, or if it stereotypes groups, pause and question it.

AI "should treat all people fairly" and systems should not discriminate and must be used equitably.

— White House AI Bill of Rights & Microsoft AI Principles

Diversify Examples

Use diverse examples and perspectives when you use AI

Test Scenarios

Test the AI with scenarios involving different demographics

Address Issues

If you spot bias, refine your prompt or switch tools

6. Keep a Human in the Loop (Accountability)

AI can automate tasks, but humans must stay in charge. The UNESCO recommendation stresses that AI should never "displace ultimate human responsibility".

In practice, this means designing your workflow so a person reviews or supervises AI results.

Customer Service Example

Data Analysis Example

Plan Fail-Safes

Always have a backup plan

- Human intervention ready

- Emergency shutdown options

Keep Records

Document AI usage

- When AI was used

- How decisions were made

Enable Auditing

Maintain transparency

- Trace logs of decisions

- Investigation capabilities

Accountability also means keeping records: note when and how you used AI, so you can explain your actions if needed.

Some organizations even require AI projects to be auditable, with trace logs of decisions. This ensures someone can investigate and correct issues, which aligns with principles of transparency and accountability.

7. Use AI Ethically and Legally

Always follow the law and moral guidelines. Do not use AI for prohibited or harmful tasks (for example, creating malware, plagiarizing copyrighted text, or deceiving others).

Prohibited Uses

- Creating malware

- Plagiarizing copyrighted content

- Deceiving others

- Generating harmful content

Respect IP Rights

- Give credit when needed

- Avoid outright copying

- Check copyright status

- Follow fair use guidelines

Respect intellectual property: if AI helps generate an image or article, be careful to give credit when needed and avoid outright copying.

The U.S. AI Bill of Rights emphasizes data privacy and fairness, but it also implies you must keep AI use within ethical bounds.

Many countries are passing AI laws (like the EU's AI Act prioritizes safety and rights). Stay updated on these so you're not unwittingly breaking new regulations.

In short: do the right thing. If a request seems shady or illegal, it probably is. When in doubt, consult a supervisor or legal advisor.

8. Be Transparent About AI Use

Transparency builds trust. If you are using AI to generate content (articles, reports, code, etc.), consider letting your audience or team know. Explain how AI is assisting (e.g. "This summary was drafted by AI, then edited by me").

People should know when AI systems are being used and how they affect decisions.

— White House AI Blueprint "Notice and Explanation" Principle

Label Content

Mark AI-generated content clearly

Cite Sources

Give credit to original authors when adapting content

Share Workflow

Explain which tools and steps you used

For example, if a company uses AI to screen job applicants, candidates should be informed about it.

In practical terms, label AI-generated content, and be clear about data sources. If you adapt someone else's writing with AI, cite the original author. In a work setting, share your AI workflow with colleagues (which AI tools you used and what steps you took).

9. Keep Learning and Stay Informed

AI is evolving fast, so keep your skills and knowledge up to date. Follow reputable news (technology blogs, official AI forums, or international guidelines like UNESCO's recommendations) to learn about new risks and best practices.

Public education and training are key to safe AI use.

— UNESCO AI Recommendation on "AI Literacy"

Here's how to practice continual learning:

Formal Learning

- Take online courses or webinars on AI safety and ethics

- Read up on new features of the AI tools you use

Community Learning

- Share tips and resources with friends or colleagues (e.g. how to write better prompts or spot AI bias)

- Teach younger users (or kids) that AI can help but should be questioned

By learning together, we build a community that uses AI wisely. After all, all users share responsibility for ensuring AI benefits everyone.

10. Use Trusted Tools and Follow Guidelines

Finally, stick with reputable AI tools and official guidance. Download AI apps only from official sources (e.g. the legitimate website or app store) to avoid malware or fraud.

In the workplace, use company-approved AI platforms that meet security and privacy standards.

Choose Reputable Vendors

Support ethical AI development

- Clear data policies

- Ethical commitments

Use Safety Features

Leverage built-in protections

- Disable data training

- Set content filters

Backup Data

Maintain independence

- Independent backups

- Avoid vendor lock-in

Support AI vendors that commit to ethical development. For example, prefer tools with clear data policies and ethical commitments (as many big tech companies now publish). Use built-in safety features: some platforms allow disabling data training or setting content filters.

And always back up your own data independently of the AI service, so you're not locked out if something goes wrong.

Key Takeaways

In summary, AI is a powerful partner when used responsibly. By following these ten golden rules – respecting privacy, checking facts, staying ethical and informed, and keeping humans in control – you can harness AI's benefits safely.

As technology advances, these principles will help ensure AI remains a force for good.

No comments yet. Be the first to comment!