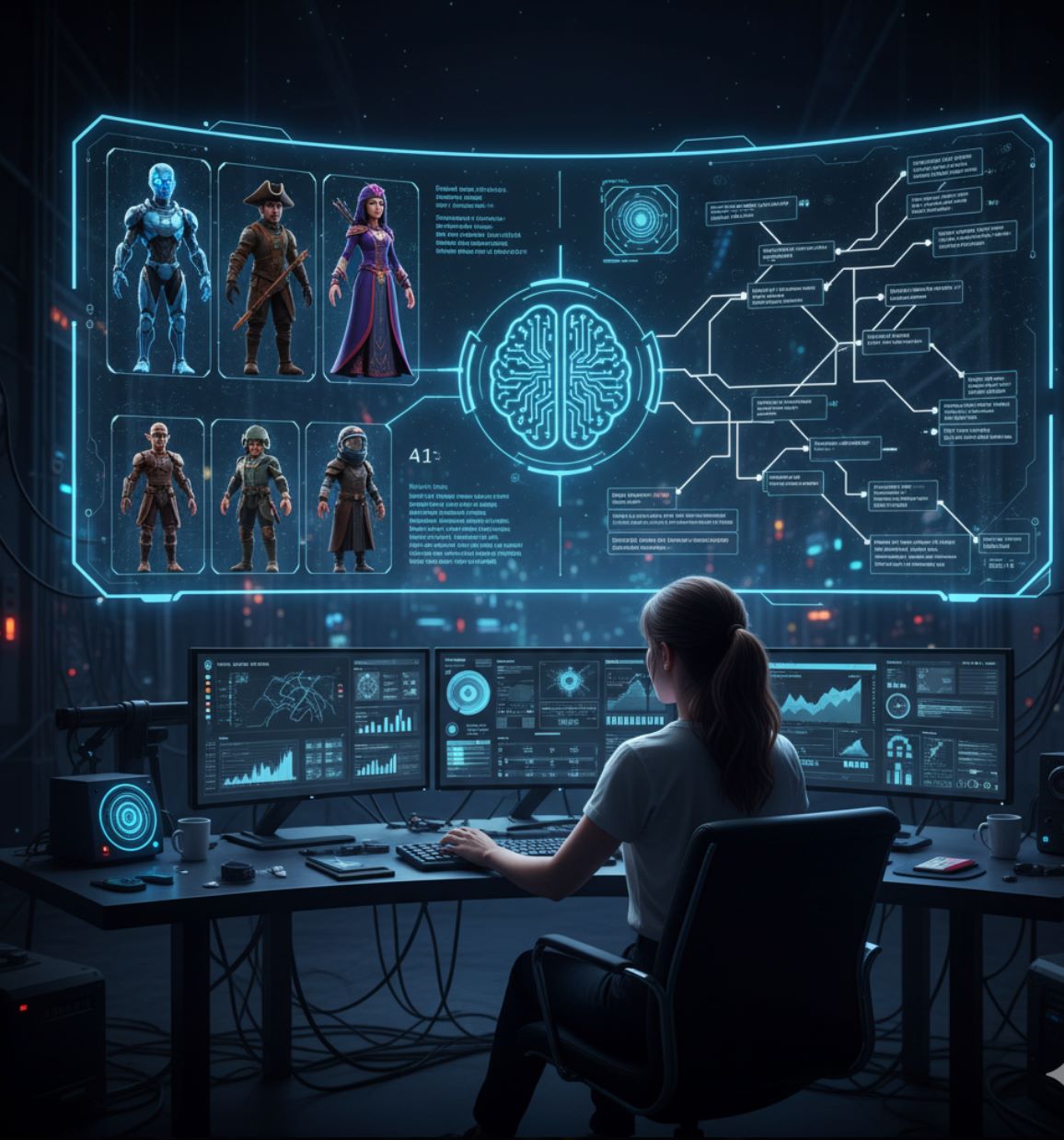

AI generates maps and game environments automatically

AI not only saves development time but also brings infinite unique, creative, and detailed virtual worlds—paving the way for a future where maps and game environments are fully automated.

Artificial intelligence is revolutionizing how game developers create maps and environments. Modern AI tools can automatically generate detailed game worlds that once took teams hours of design.

Instead of hand-crafting every tile or model, developers can input high-level prompts or data and let AI fill in the rest. For example, Google DeepMind's new "Genie 3" model can take a text description (like "foggy mountain village at sunrise") and instantly produce a fully navigable 3D world.

Industry experts note that tools like Recraft now enable entire game environments (textures, sprites, level layouts) to be generated from simple text commands. This fusion of AI with traditional procedural methods greatly speeds up development and opens up endless creative possibilities.

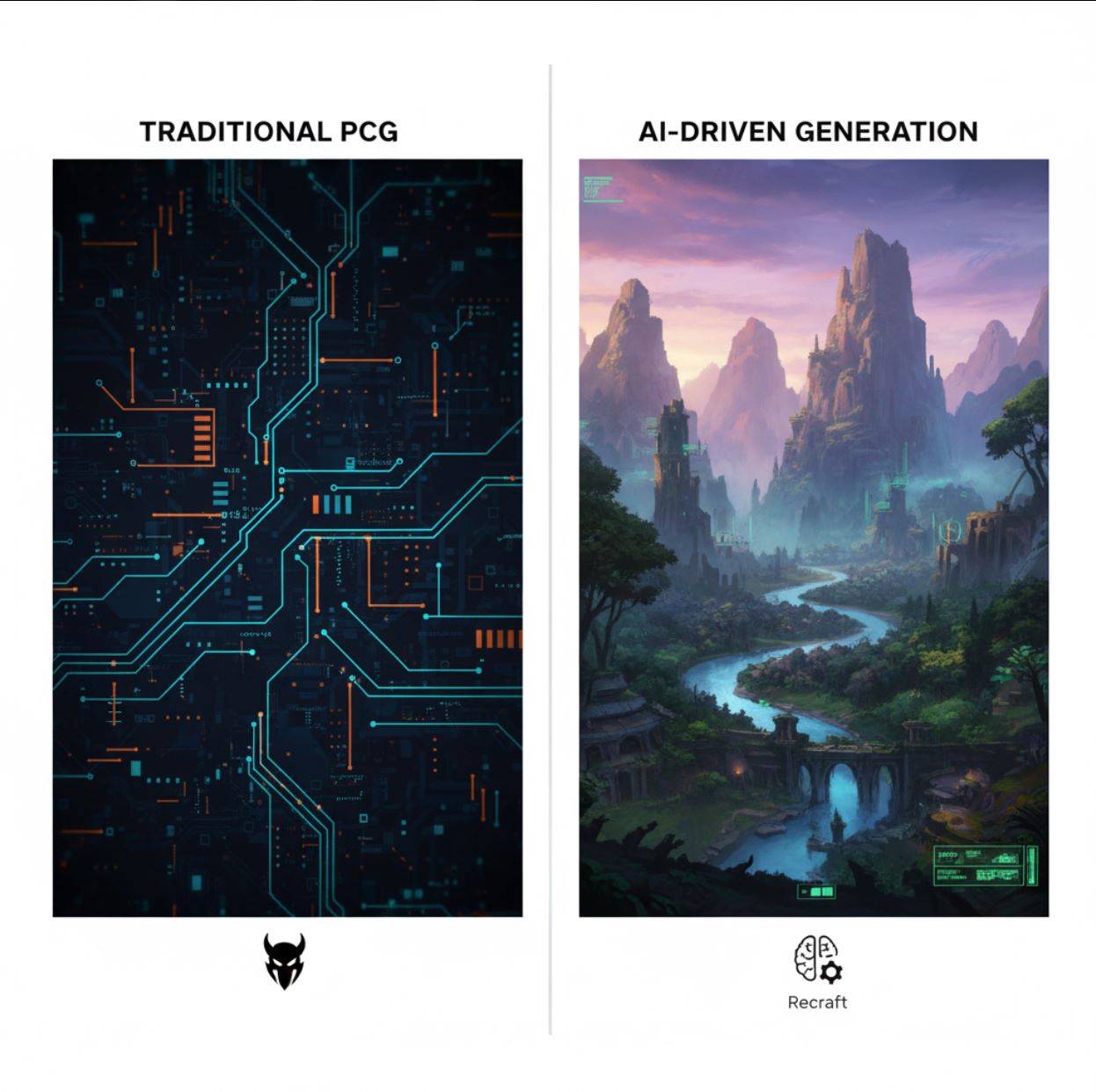

Traditional vs. AI-Based Map Generation

Procedural Generation (PCG)

Earlier games use algorithmic methods like Perlin noise for terrain or rule-based tile placement to create levels and maps.

- Powers vast randomized worlds (Diablo, No Man's Sky)

- Reduces manual work significantly

- Delivers "endless content by dynamically creating levels"

- Can produce repetitive patterns

- Requires extensive parameter fine-tuning

Machine Learning Generation

Generative models (GANs, diffusion networks, transformer "world models") learn from real examples or gameplay data.

- Produces more varied and realistic environments

- Follows creative text prompts

- Captures complex spatial patterns

- Generates assets through simple commands

- Mimics learned styles and themes

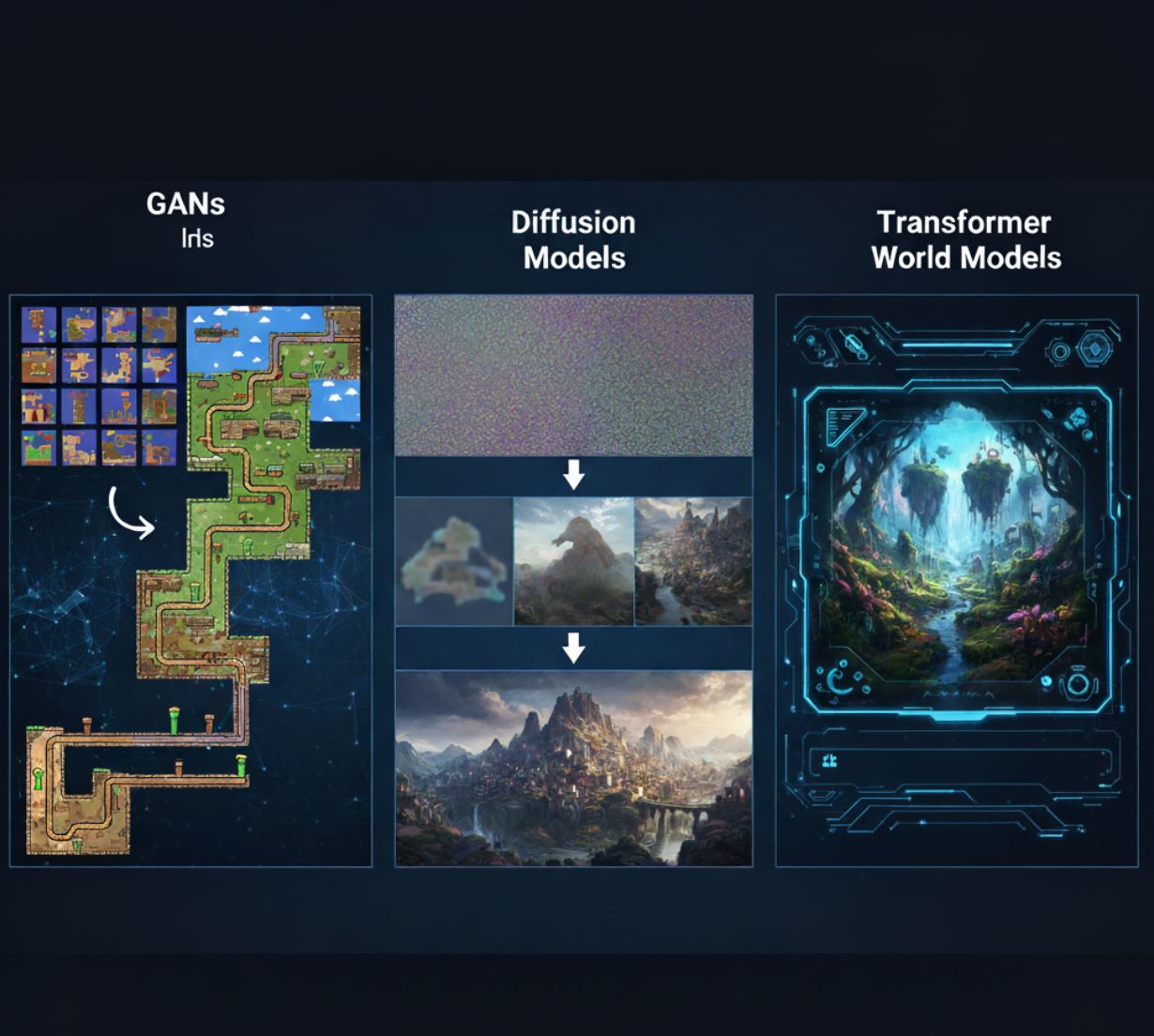

Generative AI Techniques

AI uses several advanced techniques to build game environments, each with unique strengths:

GANs (Generative Adversarial Networks)

Neural networks trained on collections of maps or terrain images that create new maps with realistic features by learning data statistics.

- Self-attention GANs improve level coherence

- Capture long-range patterns in 2D levels

- Generate complex platformer stages

- Create plausible 3D terrain from examples

Diffusion Models

AI systems like Stable Diffusion that iteratively refine random noise into structured images and environments.

- Text-conditioned generation from prompts

- Transform noise into detailed landscapes

- 3D diffusion for game assets and scenes

- Rich textures and geometry output

Transformer World Models

Large transformer-based AIs that generate entire interactive worlds, like DeepMind's Genie 3.

- Interpret text prompts in real time

- Render consistent 3D environments

- Understand game-like spatial logic

- Act as automated level designers

Top AI Tools for Game Environment Generation

Promethean AI

Application Information

| Specification | Details |

|---|---|

| Developer | Promethean AI — founded by veteran game artists and technical art professionals dedicated to empowering creative teams with AI-driven production tools. |

| Supported Platforms | Integrates with 3D editors (Unreal Engine, Unity, Maya, Blender). Desktop applications available for Windows and macOS via WebCatalog wrappers. |

| Availability | Globally accessible with no regional restrictions. Interface supports English and serves international creative teams worldwide. |

| Pricing Model | Free version for non-commercial use. Enterprise licensing required for commercial projects and advanced features. |

What is Promethean AI?

Promethean AI is an AI-powered assistant designed for creative teams, game studios, and digital artists working on virtual world creation, asset management, and environment building. It automates repetitive tasks and accelerates production workflows, allowing artists to focus on creativity and storytelling rather than mundane technical work.

The platform integrates seamlessly into existing art production pipelines without requiring teams to change editors or upload proprietary assets to external servers. This makes it ideal for studios prioritizing data security and workflow continuity.

How Promethean AI Works

Promethean AI plugs directly into your existing 3D editor environment (Unreal Engine, Unity, Maya, Blender) through APIs or native integrations. It enables teams to generate intelligent suggestions, auto-place assets, and streamline set dressing while respecting established workflows and creative preferences.

The AI learns from user behavior — observing how artists place objects, apply color palettes, and compose environments. Over time, it evolves into a personalized creative AI assistant that surfaces relevant ideas, suggests appropriate assets, handles repetitive layout tasks, and accelerates world-building processes.

Key Features

Intelligent object placement and environment composition suggestions that adapt to your creative style.

Automatically index, tag, and reuse existing assets across new scenes with intelligent categorization.

Works with your current 3D software through APIs (C++, C#, Python) — no need to change editors.

SSO support, enterprise-grade data protection, and customizable workflows for studio requirements.

Shared boards for teams to exchange inspiration, components, and creative assets in real-time.

Dramatically improve prototyping and set dressing speed, reducing both production time and costs.

The AI adapts to your team's unique aesthetic preferences and pipeline requirements over time.

Keep proprietary assets private and local — no forced cloud uploads or external data sharing.

Download or Access Link

Getting Started Guide

Promethean AI is enterprise-focused. Begin by applying for pilot access or requesting a demo through their official website.

Use the provided SDKs or APIs (C++, C#, Python) to connect Promethean AI with your production environment and 3D editors.

Link your local asset repositories. Promethean AI indexes and categorizes them without requiring external uploads.

Invoke intelligent suggestions for object placement, scene filling, and set dressing. The AI proposes environment layouts based on context and style.

Use collaboration boards to gather inspiration, reuse components, and enable multiple team members to contribute simultaneously.

Provide feedback on suggestions (accepted or rejected) to help the AI better align with your creative style and preferences.

Complete scenes in your editor as usual. Promethean AI doesn't lock you into proprietary formats — maintain full creative control.

Important Considerations

- The free version offers limited functionality compared to full enterprise licensing — commercial use requires a paid license.

- Integration may require technical expertise (API setup, pipeline adaptation) — not entirely plug-and-play for non-technical users.

- Some users report occasional compatibility or export challenges with specific creative software configurations.

- AI suggestions may not always perfectly align with artistic vision — manual overrides and adjustments are sometimes necessary.

- Teams must verify that Promethean AI's security features comply with their internal data protection policies.

- Strong hardware and network performance recommended, especially when indexing or managing large asset libraries.

- As an evolving product, some features may still be in pilot or beta phase with ongoing development.

Frequently Asked Questions

No — Promethean AI integrates via APIs to work alongside your current pipeline. You can continue using Unreal Engine, Unity, Maya, Blender, or other preferred tools without switching editors.

No — Promethean AI emphasizes that proprietary assets remain within your infrastructure. There's no requirement to upload assets externally, ensuring data security and intellectual property protection.

It's tailored for game development studios, film and animation production, architectural visualization firms, asset production outsourcing companies, and any creative teams building virtual worlds or 3D environments.

The free version is intended for non-commercial use only. Commercial or professional projects require enterprise licensing. Contact Promethean AI for pricing and licensing options.

Pricing is not publicly listed. You must contact Promethean AI directly for enterprise licensing quotes tailored to your team size and requirements.

Promethean AI provides enterprise-grade data security, SSO support, and customizable workflows designed to meet internal security requirements. Assets remain local without forced cloud uploads.

No — the mission is to augment artists, not replace them. Promethean AI handles repetitive and time-consuming tasks, freeing artists to focus on creative vision, storytelling, and high-level design decisions.

Apply through their website under "Early Adopter" programs or contact their sales and partnership team for pilot access and demonstrations.

BasedLabs.ai / Random

Application Information

| Developer | BasedLabs.ai develops and maintains the BasedLabs platform, offering a comprehensive suite of AI-powered tools for content creation and utility functions. |

| Supported Devices | Web-based platform accessible on desktop and mobile browsers — no installation required. |

| Languages & Availability | Available globally in English. Accessible from any country with internet connection. |

| Pricing Model | Free Tier Available Basic Random Generator tool is free to use. Advanced features and high-volume usage may require credits or subscription. |

General Overview

BasedLabs.ai is an all-in-one AI content creation platform that combines AI image generation, video tools, creative utilities, and randomization functions. The platform's Random Generator tool enables users to generate randomized numbers, names, words, passwords, or spin-the-wheel outcomes using AI-assisted algorithms.

"BasedLabs Random" refers to the Random Generator tool and its various randomization modes within the BasedLabs ecosystem. It complements other creative offerings (image, video, voice) by providing flexible randomization utilities for creative projects, games, decision-making, and practical applications.

Detailed Introduction

The Random Generator page offers multiple generation modes: Numbers, Names, Words, Passwords, and Wheel (spinning wheel). Users select their preferred mode, configure parameters (such as count and ranges), enter a natural language prompt (e.g., "six numbers between 10 and 500" or "short whimsical pet names"), and click Generate.

The AI-powered engine, combined with a pseudo-random algorithm and AI filtering layer, returns results that you can review and copy instantly. The tool is designed to be fast, secure, and free to start, offering unlimited basic generations in most cases.

Beyond the Random Generator, BasedLabs includes an extensive collection of AI image, video, voice, writing, and creative tools. The randomization utilities integrate seamlessly with these creative features as part of the comprehensive platform.

Key Features

Multiple randomization options for different use cases:

- Numbers with custom ranges

- Names for characters or projects

- Words for creative inspiration

- Secure password generation

- Wheel spinner for decisions

Fine-tune your random outputs:

- Set count and min/max ranges

- Natural language prompt input

- AI-guided generation filters

- Duplicate avoidance system

Streamlined generation process:

- One-click generation

- Instant results display

- Copy/export with single click

- Unlimited basic usage

Comprehensive creative platform:

- AI image generation from text

- Video creation & editing

- Voice/audio generation

- AI writing & content tools

- Raffle & winner selection

Download or Access Link

User Guide

Open your browser and navigate to basedlabs.ai.

Sign up for a free account or log in to access the Random Generator and other tools.

From the "Apps" or "Tools" menu, select Random Generator.

Choose your desired mode from the dropdown: Numbers, Names, Words, Password, or Wheel.

Set parameters such as count and ranges, or enter a natural language prompt to guide the generation.

Click the Generate button and wait a moment for the AI to process your request.

Results appear in the output panel. Copy the text or export as needed for your project.

Adjust your prompt or parameters to generate new results, or switch to a different randomization mode.

Use your generated numbers, names, passwords, or wheel outcomes in projects, games, writing, or decision-making tasks.

Important Notes & Limitations

- Custom constraints may be limited — highly complex conditional rules might not be supported

- Service availability depends on GPU provider status — temporary downtime may occur during maintenance or provider switches

- Advanced features and high-volume usage may require credits or subscription beyond the free tier

- Web-based platform only — offline or local usage is not supported

- Individual tools or apps may occasionally be unavailable during maintenance periods

Frequently Asked Questions

Yes — basic random generation is free to start. Premium features or high-volume usage may require purchasing credits or subscribing to a paid plan.

You can generate numbers, names, words, secure passwords, or spin-the-wheel outcomes using the Random Generator's various modes.

Yes — in number mode, you can input custom minimum and maximum ranges to control the output values.

The tool uses a pseudo-random engine enhanced with AI filters. While suitable for creative, entertainment, and everyday uses, it may not match cryptographic randomness standards required for high-security applications.

Yes — the tool provides one-click copying and export functionality for all generated results.

BasedLabs apps may be temporarily unavailable when switching GPU providers or during maintenance. The team works to restore service quickly. Check back shortly if you encounter downtime.

No — it's a browser-based platform that requires an active internet connection to function.

BasedLabs Random is designed for creative, practical, and entertainment purposes rather than strict security needs. For cryptographic or highly secure applications, use a dedicated secure random number generator.

Absolutely — the tool is well-suited for random draws, name/word generation, password creation, classroom activities, game mechanics, and giveaway winner selection.

Getimg.ai – AI DnD / Fantasy Map Maker

Application Information

| Developer | Getimg.ai |

| Supported Devices | Web-based platform accessible on desktop, tablet, and mobile browsers |

| Languages | Available globally; supports English interface |

| Pricing | Free plan with limited generation credits and paid tiers for advanced usage |

What is Getimg.ai?

Getimg.ai is a comprehensive AI image-generation platform designed to help users create stunning visuals, including DnD and fantasy maps. It provides powerful tools for generating, editing, and expanding images using artificial intelligence, making it ideal for artists, game developers, and storytellers who need professional-quality fantasy artwork.

Advanced AI-Powered Map Creation

Getimg.ai uses advanced diffusion models to turn text prompts into high-quality, imaginative images. Its Fantasy Map Maker and DnD generator tools allow users to design detailed fictional worlds in seconds. Whether you're creating a world map for your tabletop RPG campaign or developing fantasy art for a novel, Getimg.ai streamlines the creative process through AI automation.

The platform integrates powerful features such as AI Canvas for inpainting and outpainting, DreamBooth for fine-tuning AI models, and Image Editor for customizing outputs to match your creative vision.

Key Features

Create realistic or stylized images from text prompts with advanced diffusion models.

Generate intricate maps for role-playing games and fantasy projects in seconds.

Expand or modify existing images seamlessly with inpainting and outpainting tools.

Train and deploy personalized AI models using DreamBooth technology.

Generate multiple artistic interpretations of the same prompt for creative exploration.

No downloads or installations needed—access from any device with a browser.

Download or Access Link

How to Use Getimg.ai

Navigate to the official Getimg.ai website using any modern web browser.

Sign up for a free account or sign in with your existing credentials to access the platform.

Choose a tool such as AI Image Generator or Fantasy Map Maker based on your project needs.

Type a descriptive text prompt (e.g., "ancient fantasy kingdom map with mountains and rivers").

Customize settings like style, aspect ratio, and resolution to match your creative vision.

Click "Generate" to create your map or image using AI technology.

Download your result or refine it further using the AI Canvas editor for perfect results.

Important Limitations

- Free users have limited generation credits per month

- Output quality may vary depending on prompt detail and specificity

- Requires a stable internet connection for processing and generation

- Advanced customization options are only available in paid plans

Frequently Asked Questions

Yes, paid plans include commercial usage rights, allowing you to use generated images in professional and commercial projects.

Yes, users can define artistic styles such as watercolor, parchment, or fantasy illustration to match their creative vision.

No, Getimg.ai runs entirely in your web browser. No downloads or installations are required.

Absolutely. Its intuitive interface makes it easy for both beginners and professionals to create fantasy maps and images quickly without prior experience.

The platform uses advanced diffusion-based models optimized for image generation and enhancement, ensuring high-quality outputs.

AI Map Generator

Application Information

| Developer | Created by Elias Bing as an AI-driven map creation showcase project (featured on Devpost) |

| Platform | Web-based platform — accessible on desktop and mobile browsers without app installation |

| Availability | English interface, globally accessible with no region restrictions |

| Pricing Model | Free Trial 3 free credits for new users + paid credit packs (Lite, Creator, Professional) with commercial usage rights |

What is AI Map Generator?

AI Map Generator is an AI-powered tool that transforms text descriptions into detailed, high-resolution maps in multiple artistic styles including fantasy, watercolor, and sci-fi. Designed for game masters, RPG enthusiasts, storytellers, and creative professionals, it eliminates the need for manual design skills while delivering professional-quality visual world layouts in seconds.

The platform allows you to specify terrain details, structures, and layout preferences through simple text prompts, then generates downloadable maps suitable for tabletop campaigns, video games, storytelling projects, and commercial applications.

How It Works

Getting started with AI Map Generator is straightforward. First, select your preferred map style from options like Fantasy, Watercolor, Sci-Fi, or create a Custom style. Next, input a detailed text description of your envisioned map — for example, "dragon's lair with underground tunnels, lava pools, and hidden treasure rooms." You can also upload a reference image to guide the visual style and composition.

Click "Generate Map" and the AI processes your prompt in just 3–5 seconds. Your custom map appears instantly, ready for download in high resolution (2048×2048 px) with full commercial licensing included. The platform maintains a history log of all generated maps, allowing you to revisit and download previous creations anytime.

The system operates on a credit-based model (1 credit per map) with non-expiring credits and multiple pricing tiers to match different usage needs.

Key Features

Choose from Fantasy, Watercolor, Sci-Fi, or define custom styles to match your creative vision.

Generate detailed maps in under 5 seconds with optimized AI processing.

Upload images (PNG/JPG/WEBP, up to 5 MB) to guide style and layout composition.

Instant download of 2048×2048 px maps suitable for print and digital use.

Access and download all previously generated maps from your personal history log.

Use generated maps in commercial projects with full licensing rights included.

- Text-to-map AI generation from detailed descriptions

- Non-expiring credit packs (Lite, Creator, Professional tiers)

- 3 free trial credits for new users

Download or Access Link

Step-by-Step User Guide

Open your browser and navigate to aimapgen.pro.

Authenticate using Google OAuth (Google account login required).

Choose from Fantasy, Watercolor, Sci-Fi, or create a Custom style.

Enter detailed text describing layout, terrain, landmarks, and structures. Optionally upload a reference image (PNG/JPG/WEBP, ≤ 5 MB) to guide the visual style.

Click "Generate Map" and wait 3–5 seconds for AI processing to complete.

Preview your generated map and download it as a high-resolution PNG file.

Access previously generated maps through the history section for re-download.

When free credits are exhausted, buy a credit pack (Lite, Creator, or Professional) to continue generating maps.

Important Notes & Limitations

- Map quality depends on prompt clarity — vague descriptions may produce less coherent results

- Reference images limited to 5 MB maximum file size

- Credits are non-refundable and non-transferable

- AI may misinterpret complex or contradictory instructions

- Service uptime not guaranteed — periodic maintenance or downtime possible

- Generated maps stored temporarily and deleted after 30 days

Frequently Asked Questions

New users receive 3 free trial credits to test the platform before purchasing additional credits.

Lite Pack: 5 credits for $1.99

Creator Pack: 15 credits for $4.99

Professional Pack: 30 credits for $8.99

Yes — all maps (both trial and paid) include commercial license rights, allowing use in commercial projects without additional fees.

Yes — the service maintains a history log of all generated maps, accessible through your account dashboard.

No — credits never expire once purchased, allowing you to use them at your own pace.

Supported formats: PNG, JPG, or WEBP with a maximum file size of 5 MB.

The platform includes content filters for safety. Inappropriate or unsafe requests may be rejected without credit refund.

Maps are stored temporarily and automatically deleted after 30 days. Download important maps promptly for permanent storage.

Credits are generally non-refundable. Refunds may be considered only in cases of technical issues preventing service access.

DeepMind’s Genie 3

Application Information

| Developer | Developed by DeepMind, Google's advanced AI research division |

| Platform | Experimental world model running on AI infrastructure (not a consumer app) |

| Availability | Global research preview with no regional restrictions |

| Pricing | Research preview — accessible to selected researchers and creators only (not yet commercial) |

What is Genie 3?

DeepMind's Genie 3 is a groundbreaking "world model" AI system that transforms text or image prompts into interactive, explorable 3D environments in real time. Unlike traditional generative models that produce static images or short videos, Genie 3 creates persistent worlds you can navigate, manipulate, and modify on the fly.

This represents a major evolution from earlier versions, delivering minutes of consistent interactivity, environment memory, and dynamic "promptable world events" that respond to your commands.

How Genie 3 Works

Genie 3 begins with a text prompt or image describing your desired world—such as "an ancient forest under stormy skies" or "desert canyon with flowing water." The model then generates a real-time environment at 24 frames per second in 720p resolution that you can explore from a first-person perspective.

What sets Genie 3 apart is its memory and consistency: when you leave a location and return, objects and changes remain intact—walls you painted, furniture you moved, all persist. The system also supports promptable world events, allowing you to issue new instructions during exploration (like "make it rain" or "add a cave opening") and watch the environment adapt dynamically.

Built on advanced world model research, Genie 3 simulates environments, physics, object interactions, and agent behavior. It connects to DeepMind's broader work in generative video (Veo series) and AI agent training.

Key Features

Transform text prompts into fully explorable 3D environments instantly

Smooth 24 fps playback at 720p resolution with video-like quality

Environment maintains object placement and changes across multiple visits

Modify weather, insert objects, or change conditions during exploration

Create photorealistic settings or imaginative fantasy realms

Explore for several minutes with stable coherence (vs. 10-20 seconds in Genie 2)

Download or Access Link

User Guide

Genie 3 is currently in limited research preview. Apply or wait for an invitation as a researcher or creator.

Provide a descriptive text prompt or reference image to guide world generation (e.g., "ancient forest under stormy skies").

The model builds your world in real time at 24 fps, 720p resolution.

Move through the environment, look around, and interact with objects from a first-person perspective.

Issue new prompts to adapt the scene dynamically—change weather, add structures, or modify terrain.

Leave a location and return to verify that objects and edits remain in place.

Record or capture frames during exploration (availability depends on preview interface).

Important Limitations

- Duration constraints: Environments remain stable for only a few minutes before coherence degrades

- No real-world locations: Cannot simulate precise geographic locations—all worlds are fictional and generative

- Visual artifacts: Generated elements may hallucinate or glitch; objects can appear unnatural, humans may move oddly

- Text rendering issues: In-scene text (signs, labels) tends to be jumbled unless explicitly specified in prompts

- Limited multi-agent support: Multiple AI agents interacting in the same world is still under research

- Research tool status: Performance, interface, and availability may change as DeepMind continues development

Frequently Asked Questions

Genie 3 is DeepMind's latest interactive world model that converts text or image prompts into explorable 3D environments with persistent memory and real-time interactivity.

Not yet. Genie 3 is currently limited to a small group of researchers, artists, and creators in a preview stage. Public access has not been announced.

Genie 3 maintains world consistency for several minutes—a significant improvement over previous models that lasted only 10-20 seconds.

Yes. Genie 3 features persistent memory—objects you modify, move, or add remain in place when you leave and return to a location.

Yes. Through "promptable world events," you can issue new instructions mid-exploration (change weather, add objects, modify terrain) and the environment will respond dynamically.

Genie 3 renders environments at 720p resolution with 24 frames per second for smooth, video-like playback.

Not currently. Genie 3 generates fictional worlds based on prompts, not real-world geographic reconstructions or location-based simulations.

Applications include game prototyping, AI agent training, virtual simulation, creative concept design, and scientific research toward artificial general intelligence (AGI).

DeepMind has not confirmed a consumer release timeline. For now, Genie 3 remains a research resource for selected users.

Ludus AI (Unreal Engine Plugin)

Application Information

| Specification | Details |

|---|---|

| Developer | Developed by LudusEngine, specializing in AI-powered tools for Unreal Engine development |

| Platform | Plugin for Unreal Engine 5 (versions 5.1–5.6) with Visual Studio integration |

| Availability | Global access via plugin download and web app with English UI |

| Pricing | Free Trial available with credit-based paid plans for advanced features |

What is Ludus AI?

Ludus AI is an intelligent toolkit designed specifically for Unreal Engine developers, delivering C++ code generation, Blueprint assistance, scene creation, and natural language commands directly within the Unreal editor. This AI-powered assistant streamlines development workflows, eliminates repetitive boilerplate tasks, and acts as your embedded coding companion inside the engine.

How Ludus AI Works

After installation, Ludus AI integrates seamlessly into Unreal Engine through intuitive menu tools. Developers can leverage its capabilities to:

- Ask engine-specific questions and receive instant answers via LudusDocs

- Generate or modify Unreal-optimized C++ code using LudusCode

- Analyze, comment on, and improve Blueprints with LudusBlueprint

- Execute natural language commands through LudusChat (e.g., "create a directional light above this actor") that automatically translate into scene modifications or asset creation

Ludus AI understands your project context and generates relevant, tailored outputs based on your current work. The plugin requires activation in the Unreal editor and authentication through your LudusEngine account.

Core Features

Generate Unreal-specific functions, classes, and code structures tailored to your project needs.

Analyze, comment, suggest improvements, and generate code for Blueprint graphs with AI assistance.

Use LudusChat to place objects, modify scenes, and create assets through conversational commands.

LudusDocs provides instant engine queries, explanations, and contextual documentation support.

Bring Unreal-aware AI assistance directly into your IDE for seamless development workflow.

Start with free credits and purchase additional packs for advanced operations as needed.

Download or Access Link

Installation & Setup Guide

Navigate to app.ludusengine.com and log in using your preferred authentication method (GitHub, Google, or Discord).

From the portal dashboard, select your Unreal Engine version (5.1–5.6) and download the corresponding plugin package.

- Extract the downloaded plugin package

- Move the plugin folder to your Unreal Engine's Plugins/Marketplace directory

- Launch Unreal Engine and navigate to Edit → Plugins

- Search for Ludus AI, enable it, and restart the editor

Access the plugin panel through Tools → Ludus AI in the Unreal Engine menu bar.

Enter your Ludus credentials within the plugin panel to authenticate and unlock all features.

- Query engine documentation with LudusDocs

- Generate C++ code or Blueprint snippets

- Issue natural language commands for scene modifications

- Iterate, refine outputs, and integrate code into your project

Track your credit consumption in the dashboard, purchase additional credit packs when needed, and optimize prompts to maximize efficiency.

Important Limitations & Considerations

- Blueprint suggestions can sometimes be vague or require significant refinement

- AI may occasionally hallucinate or generate non-functional code

- Credit consumption can be substantial for complex or repeated prompts

- Plugin compatibility limited to Unreal Engine versions 5.1–5.6

- Older versions (UE4) and future releases beyond 5.6 are not supported

- Cloud-based inference may introduce latency or occasional service interruptions

- Advanced features require paid credit packs beyond the free tier

- Documentation and community resources are still expanding as the platform matures

Frequently Asked Questions

Ludus AI supports Unreal Engine versions 5.1 through 5.6. Compatibility with older versions (UE4) or future releases is not guaranteed.

You can start with a free trial and complimentary credits. Advanced features and heavy usage require purchasing additional credit packs through paid plans.

No. Ludus AI assists developers by accelerating workflows and reducing boilerplate code, but complex game systems, optimization, and debugging still require human expertise and oversight.

Yes. The AI functionality is delivered through a plugin that must be installed and enabled within Unreal Engine to access all features.

Yes. Ludus AI offers a Visual Studio extension that provides Unreal-aware AI assistance directly within your IDE for seamless development.

Yes. LudusBlueprint can analyze existing Blueprint graphs and suggest improvements, though the quality and applicability of suggestions may vary depending on complexity.

LudusDocs is an AI-powered documentation assistant that answers engine-specific questions and provides instant, contextual explanations about Unreal Engine features and APIs.

Review, debug, and adjust the code manually. AI-generated outputs serve as starting points and require human validation—they are not guaranteed to be production-ready.

Visit the Ludus Academy and official documentation site for comprehensive tutorials, usage examples, and prompt engineering guides to maximize your productivity.

In addition, there are many other AI tools and applications that are shaping the process of creating worlds in games, such as:

Recraft (AI Asset Generator)

Developers can "generate game assets – sprites, textures, environments – through simple text prompts" and import them into engines like Unity or Godot.

- Type descriptions like "ancient temple ruins"

- Instantly get textures and 3D models

- Direct integration with game engines

- Complete level layouts from text

Promethean AI

An AI-powered scene assembly tool that automatically arranges props, lighting, and terrain into cohesive 3D scenes following style guidelines.

- Saves countless hours in 3D design work

- Generates city plazas and dungeon chambers

- Automatic prop and lighting placement

- No manual modeling required

Microsoft's Muse (WHAM)

Microsoft Research's World and Human Action Model is a generative game model that produces full gameplay sequences and visuals.

- Transformer-based world model

- Captures game-level geometry and dynamics

- Learns structure of game worlds

- Generates consistent world content

NVIDIA Omniverse & Cosmos

NVIDIA's platform includes generative AI features for environment creation using text prompts to fetch or generate 3D assets.

- Create "countless synthetic virtual environments"

- Omniverse NIM services for asset generation

- Train Cosmos world models

- Accelerates large-scale world building

Key Benefits and Applications

AI-generated maps and environments offer several practical advantages that are transforming game development:

Speed and Scale

AI can produce huge, detailed worlds in seconds. Ludus AI generates complex 3D assets "within seconds," whereas manual modeling would take hours.

Variety and Diversity

Machine learning models introduce endless variety. AI takes procedural generation further by blending styles, themes, and story elements in novel ways.

Infinite Planets Unique Maps No Repetition

Efficiency

Automating map creation reduces workload and costs. Small indie teams and big studios can offload routine level design to AI and focus on gameplay and narrative.

- Saves countless hours in 3D design work

- Reduces development costs

- Improves productivity and creativity

Dynamic and Adaptive Worlds

Advanced AI can adapt environments in real time, generating new layouts on the fly or reshaping terrain according to story progress.

- Generate new dungeons each visit

- Respond to player actions

- Richer than simple procedural tricks

- More coherent "living" worlds

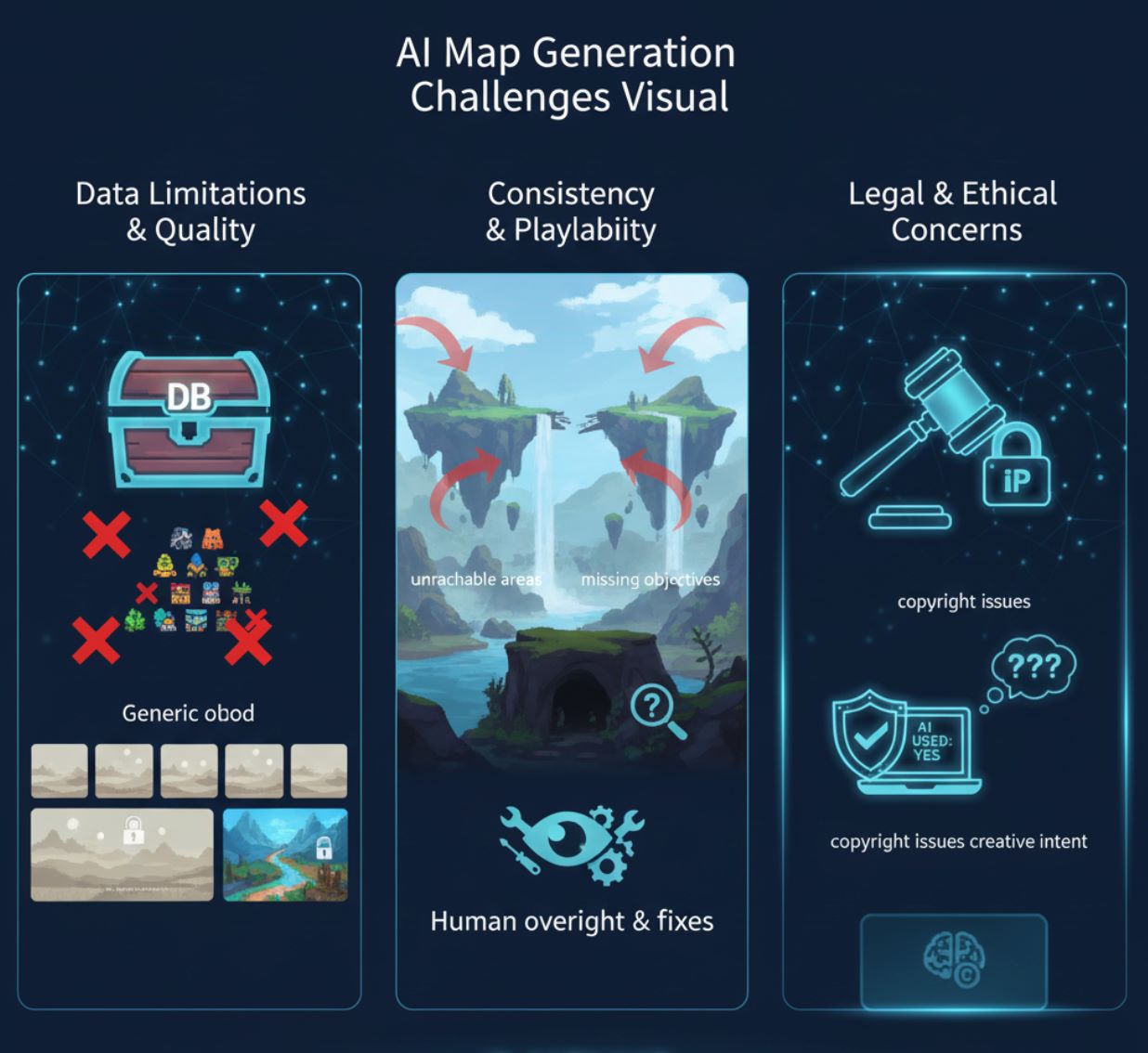

Challenges and Future Directions

Despite the promise, AI-driven map generation faces several important challenges that developers must navigate:

Training Data Challenges

High-quality generative AI requires vast amounts of training data, and game-specific datasets are often scarce. Building "high-performance generative AI requires vast amounts of training data," which is hard to gather for niche game genres.

Consistency and Playability

An AI might generate a beautiful terrain that is fun to look at but contains unreachable areas or missing objectives. Human oversight remains important for ensuring quality gameplay.

- Visual appeal doesn't guarantee playability

- Unreachable areas may be generated

- Missing objectives or game logic

- Fully automated without review

Legal and Ethical Concerns

Legal and ethical concerns are emerging as AI becomes more prevalent in game development:

- Platforms now require developers to disclose AI use

- Copyright questions: What if AI learned from copyrighted maps?

- Intellectual property ownership debates

- Transparency and attribution requirements

Conclusion: The Future of AI-Generated Game Worlds

AI-generated game maps and environments are already reshaping game development. Leading tech projects—from Google DeepMind's Genie to NVIDIA's Omniverse—are proving that whole worlds can be "dreamed up" by AI from simple descriptions.

This technology promises faster creation of immersive worlds with unprecedented diversity. As AI models continue to improve, we can expect even more lifelike and interactive virtual landscapes created on the fly.

— Industry Analysis, 2024

For players and designers alike, the future holds richer game worlds built by intelligent algorithms, so long as we use the technology wisely and creatively.

No comments yet. Be the first to comment!