AI analyzes experimental data

In scientific research, speed and accuracy in analyzing experimental data are critical. In the past, processing datasets could take days or even weeks, but Artificial Intelligence (AI) has changed that. AI can scan, process, and extract insights from massive volumes of data within minutes, helping researchers save time, reduce errors, and accelerate discoveries.

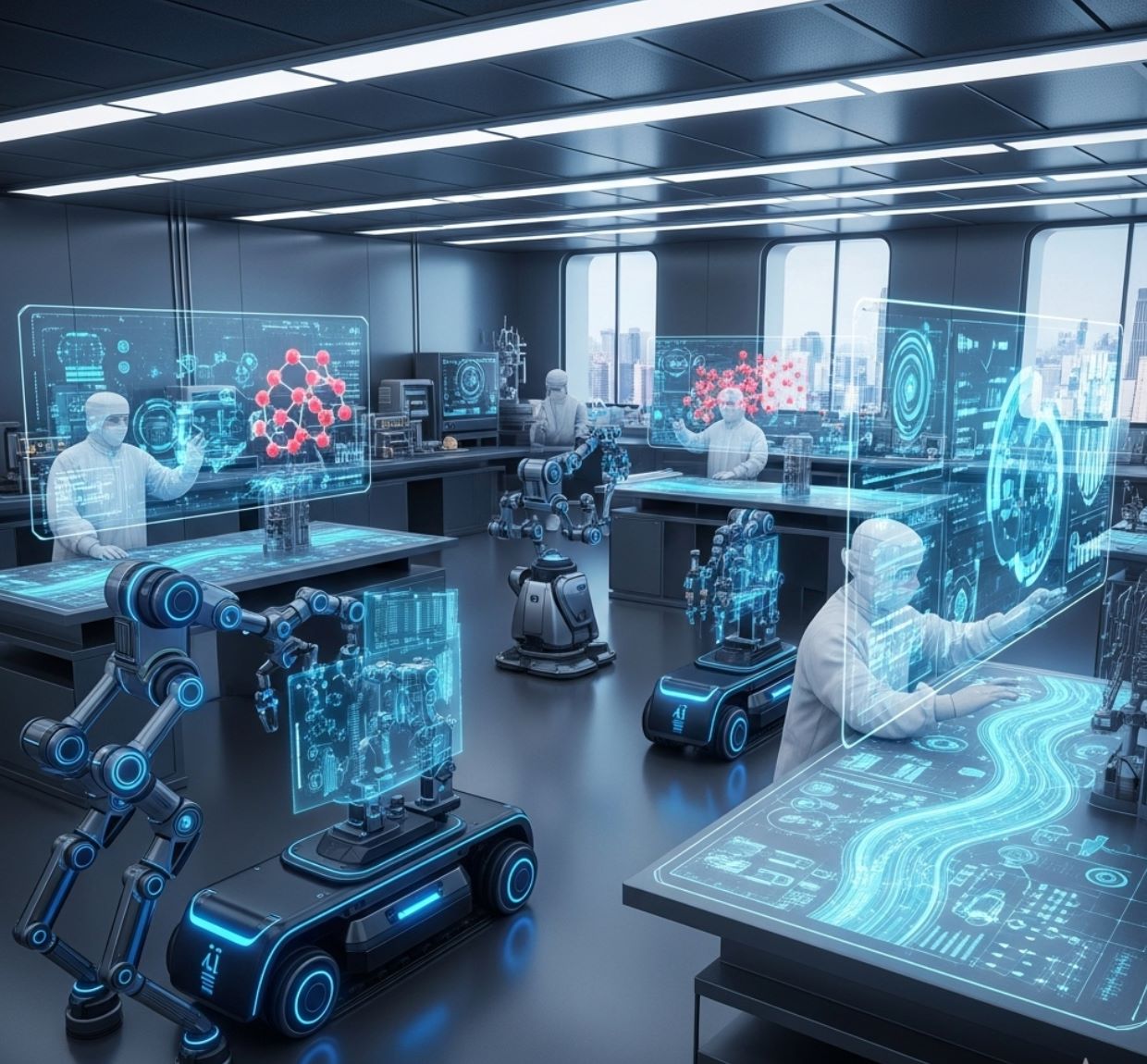

Modern research labs are using artificial intelligence (AI) to process experimental results at unprecedented speed. By integrating AI with automated instruments and supercomputers, scientists can analyze vast datasets in real time, identify patterns instantly, and even predict outcomes without running slow traditional experiments. This capability is already revolutionizing fields from materials science to biology.

Below we explore key ways AI makes lab data analysis much faster:

Four Revolutionary AI Applications in Laboratory Analysis

Automated "Self-Driving" Laboratories

AI-guided robots run experiments continuously and choose which samples to test, cutting down idle time and redundant measurements.

Real-Time Data Processing

Streamed data from instruments is fed into AI-driven computing systems for instant analysis. Researchers can adjust experiments on the fly because results are returned in minutes instead of days.

Predictive Machine Learning Models

Once trained, AI models can simulate experiments computationally. For example, they can generate thousands of molecular structures or gene-expression profiles in minutes, matching what lab techniques would take weeks or months to do.

End-to-End Research Automation

Broad AI platforms (like MIT's FutureHouse) are being built to handle entire workflows—from literature review and data gathering to experimental design and analysis—automating many critical research steps.

AI-Driven Automation in Laboratories

Researchers are building autonomous labs that run experiments with minimal human intervention. For instance, Lawrence Berkeley Lab's A-Lab facility pairs AI algorithms with robotic arms: the AI suggests new materials to try, and robots mix and test them in rapid succession. This tight loop of "robot scientists" means promising compounds are validated much more quickly than in manual studies.

Similarly, MIT's FutureHouse project is developing AI agents to handle tasks like literature search, experiment planning, and data analysis, so scientists can pursue discoveries instead of routine tasks.

Self-Driving Microscope

Intelligent Scanning

An especially striking example is Argonne National Laboratory's self-driving microscope. In this system, an AI algorithm starts by scanning a few random points on a sample, then predicts where the next interesting features might be.

On-the-fly AI control eliminates the need for human intervention and dramatically expediting the experiment.

— Argonne National Laboratory Scientists

By focusing only on data-rich regions and skipping uniform areas, the microscope collects useful images far faster than a traditional point-by-point scan. In practice, this means much more efficient use of time on high-demand instruments: researchers can run multiple high-resolution scans in the same amount of time that manual methods would take for just one.

Real-Time Data Processing in Research Facilities

Large research facilities are using AI to analyze data as it is produced. At Berkeley Lab, raw data from microscopes and telescopes is streamed directly to a supercomputer.

Distiller Platform

Machine-learning workflows process data within minutes. A new platform called Distiller sends electron-microscope images to the NERSC supercomputer during imaging; the results come back instantly, allowing scientists to refine the experiment on the spot.

Even complex instruments benefit: at the BELLA laser accelerator, deep-learning models continuously tune laser and electron beams for optimal stability, slashing the time scientists spend on manual calibrations.

24/7 Monitoring

Other national labs use AI for live quality control. Brookhaven's NSLS-II synchrotron now employs AI agents to watch beamline experiments 24/7.

If a sample shifts or data look "off," the system flags it immediately. This kind of anomaly detection saves huge amounts of time—scientists can fix problems in real time instead of discovering them after hours of lost beamtime.

Particle Physics

CERN's Large Hadron Collider uses "fast ML" algorithms built into its trigger hardware: custom AI in FPGAs analyzes collision signals instantaneously, calculating particle energies in real time and outperforming older signal filters.

Collect Everything Then Analyze Later

- Hours or days of data collection

- Manual analysis after experiments

- Problems discovered too late

- Limited real-time adjustments

Analyze on the Fly

- Instant data processing

- Real-time experiment refinement

- Immediate problem detection

- Continuous optimization

Predictive Models for Rapid Insights

AI isn't just speeding up existing experiments – it's also replacing slow lab work with virtual experiments. In genomics, for example, MIT chemists have developed ChromoGen, a generative AI that learns the grammar of DNA folding.

ChromoGen AI

Gene Expression Prediction

Given a DNA sequence, ChromoGen can "quickly analyze" the sequence and generate thousands of possible 3D chromatin structures in minutes. This is vastly faster than traditional lab methods: while a Hi-C experiment might take days or weeks to map the genome for one cell type, ChromoGen produced 1,000 predicted structures in just 20 minutes on a single GPU.

In biology, teams at Columbia University trained a "foundation model" on data from over a million cells to forecast gene activity. Their AI can predict which genes are switched on in any given cell type, essentially simulating what a vast gene-expression experiment would show. As the researchers note, these predictive models enable "fast and accurate" large-scale computational experiments that guide and complement wet-lab work.

In tests, the AI's gene expression predictions for new cell types agreed very closely with actual experimental measurements.

Impact and Future Outlook

The integration of AI into the experimental workflow is transforming science. By automating data analysis and even decision-making during experiments, AI turns what used to be a bottleneck into a turbocharged process.

Focus on discovery while machines handle repetitive tasks and real-time analysis of massive data sets.

— Research Scientists

In other words, scientists can run more experiments and draw conclusions faster than ever before.

Automate experiments with AI will significantly accelerate scientific progress.

— Argonne National Laboratory Physicists

Current State

AI-driven tools in select labs

Near Future

More self-driving instruments

Long Term

Widespread AI analysis adoption

Looking ahead, we can expect AI's role to grow: more labs will use self-driving instruments, and more fields will rely on rapid AI analysis and prediction.

This means that the cycle of hypothesis, experiment, and result will shrink—from years to months or even days.

No comments yet. Be the first to comment!